🍪 We use third party cookies to personalize content, ads and analyze site traffic. Learn more

Why

Keymakr?

Keymakr?

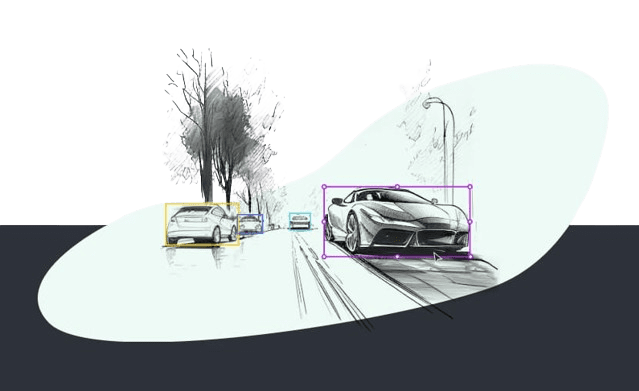

Keylabs

annotation platform

70%

more cost effective

4 Layers

of quality assurance

Annotation

experts

24/7

Customer support