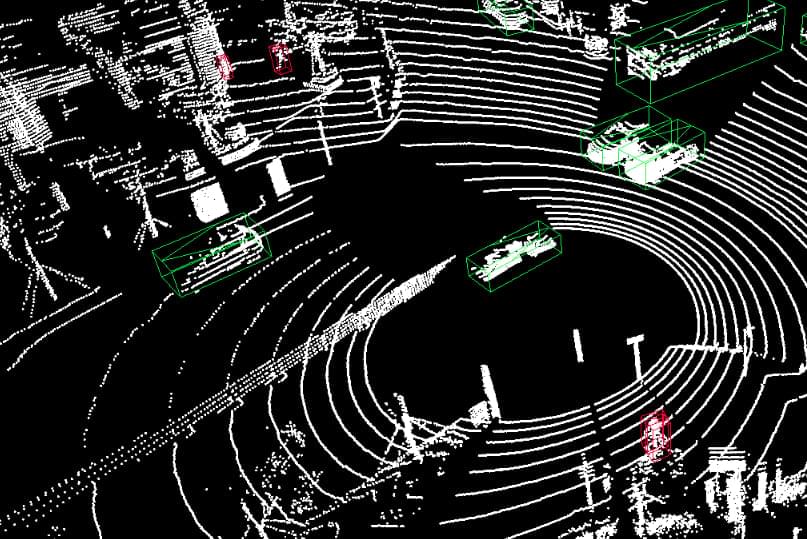

Annotation is an incredibly challenging task. Unlike static images, videos contain many high-dimensional data, making it difficult for computer vision to recognize objects and events. In addition, the medium is dynamic and complex and often requires the human eye to understand fully. Data management is another factor considering the size of files.

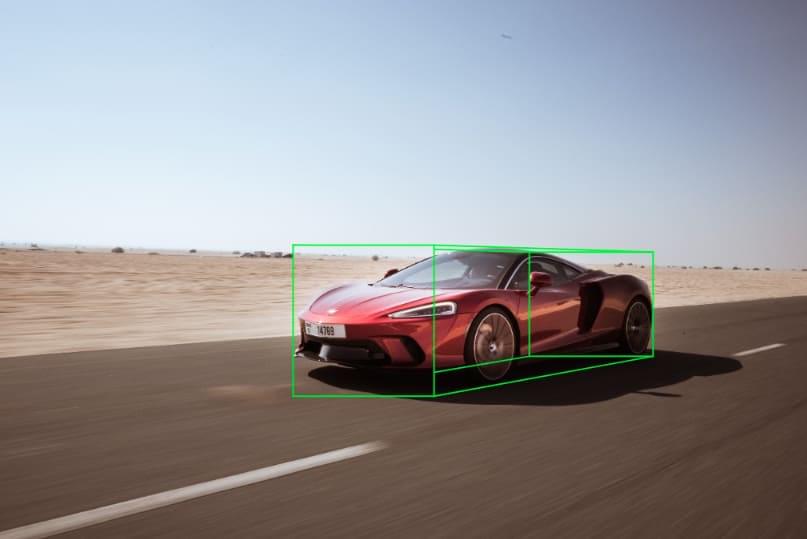

For example, if you want to detect people in footage autonomously, you'll need to teach computer vision how they move. Therefore, you'll need many examples of people walking in different situations so that the computer vision can learn what constitutes a person (e.g., their size, skeleton, and speed), how they move relative to one another, and their environment (e.g., whether they're running or walking).

In addition to the time it takes to annotate data manually, many other factors can affect accuracy and consistency. For example, suppose a tool offers multiple ways of tagging the same content (e.g., by providing different fields for each label). In that case, users can choose different markup labels for similar content management.

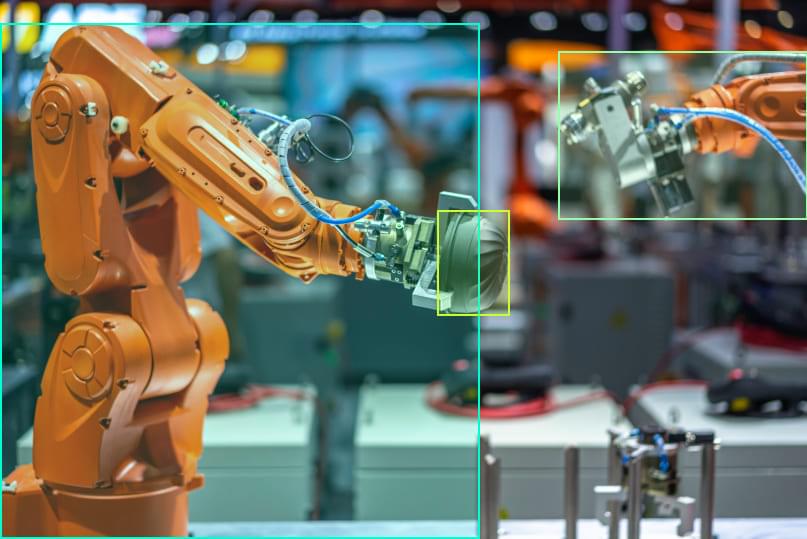

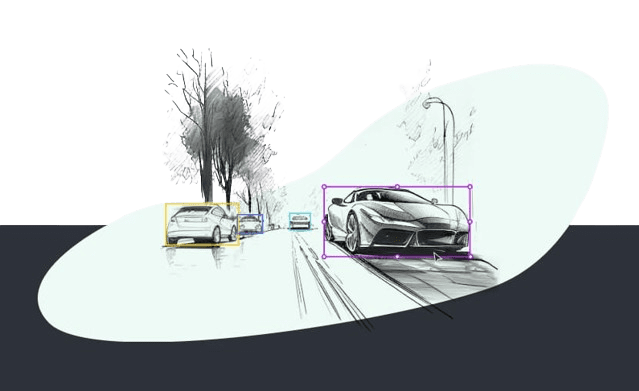

To overcome these challenges, researchers developed several new approaches that can automatically extract and markup content. One of the most popular methods is based on machine learning (ML), which provides computers with the ability to read data without human intervention.

Machine learning algorithms learn how to solve problems without being explicitly programmed by humans. For example, instead of requiring users to tag thousands of videos manually, machine learning can autonomously extract data and markup content with accuracy and consistency far beyond what humans can achieve.