Complete guide RLHF for LLMs

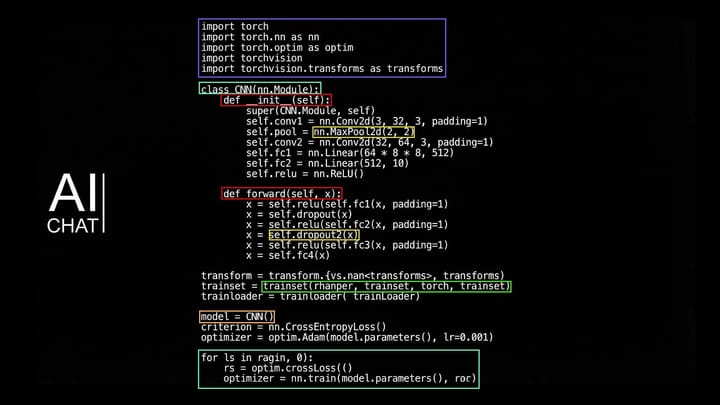

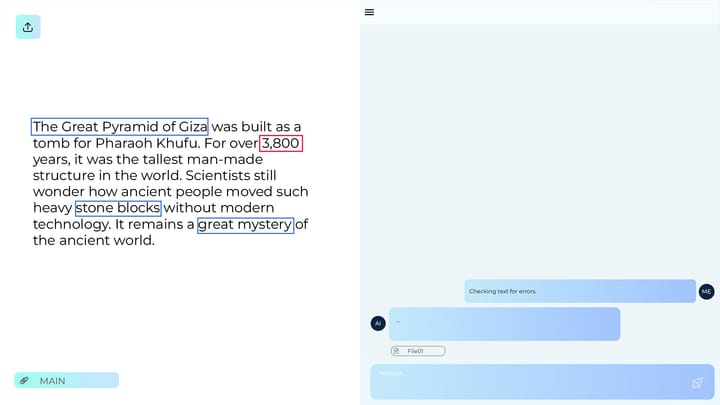

Reinforcement Learning with Human Feedback (RLHF) is a key approach to aligning large language models (LLMs) with human values and expectations. Rather than relying solely on automated metrics, RLHF uses human ratings to train models to select relevant responses.

This method enables LLMs to generate grammatically correct text and exhibit