Prepping Data for Self-Supervised Learning: Labeling Less, Learning More

With datasets' growing complexity and size, the need for methods requiring less manual annotation has become critical. Learning from data without the traditional need for labeled samples pushes the boundaries of autonomous machine capabilities.

This approach allows machines to generate their control signals, making it possible to train models without explicit labels. This opens up the possibility of utilizing the vast amount of unlabeled data that is often more readily available. With fewer restrictions associated with labeling data, the industry is seeing innovations that could change the machine-learning landscape as we know it.

The Evolution of Learning Methods in AI

Early AI systems relied heavily on supervised learning, where models were trained using labeled data, making them dependent on much manual work for labeling. With the expansion of AI applications, this approach has shown its limitations, especially when labeled data is rare or expensive. In response, unsupervised learning methods have emerged that focus on identifying patterns and structures in data without the need for labeled examples.

With the development of this field, self-study began to attract attention as an intermediate option between teacher-assisted and unsupervised learning. Self-learning allows models to learn from unlabeled data by generating labels through pre-text tasks, such as predicting missing data or understanding relationships in the data. Over time, deep learning techniques, especially in natural language processing and computer vision, have further revolutionized the ability to learn from massive, unstructured data sets.

From Supervised to Unsupervised Approaches

Supervised learning, the dominant method in the early stages of AI development, involves training models on labeled datasets where each input is paired with the correct output. While this approach has been efficient for tasks such as image classification and language translation, it has a significant limitation due to the need for a considerable amount of labeled data.

Unsupervised learning moves away from the need for labeled data and focuses on discovering hidden patterns, structures, or relationships in the data. In this approach, models find groups, associations, or representations without predefined categories. Although unsupervised learning has challenges, such as difficulties in evaluating performance due to the lack of labels, it has proven to be a powerful tool for extracting information from large datasets.

Early Challenges in Labeled Data Requirements

One of the most significant challenges in the early stages of machine learning was the heavy reliance on labeled data. Supervised learning models require large, high-quality datasets for each input signal associated with a corresponding label or ground truth. This need for extensive labeled data has posed several obstacles, especially in industries where data collection and annotation have been expensive, time-consuming, or even impossible.

As the complexity of machine learning tasks grew, so did the demand for labeled data, leading to a bottleneck that slowed the pace of innovation. In many industries, the lack of labeled examples limited the ability of AI systems to generalize and perform well with new, unknown data. In addition, the manual labeling process was often not feasible for the massive amounts of data generated daily.

Fundamentals of Self-supervised Data Preparation

The fundamentals of supervised self-learning are to create conditions under which a model can learn meaningful representations from unlabeled data. In contrast to supervised learning, where data has predefined labels, self-learning generates its own supervised learning by designing tasks or "task-pretexts" that encourage the model to extract useful features from the raw data. The key to preparing data for self-learning is to identify ways to exploit the inherent structure in the data itself, allowing the model to predict or reconstruct missing parts, predict relationships between different parts, or generate meaningful representations without the need for human annotations.

For example, a common pre-text task in computer vision may involve masking parts of an image and asking the model to predict the missing areas based on the surrounding context. In natural language processing, tasks such as predicting the next word in a sentence or filling in missing words in a text allow the model to learn semantic relationships.

Defining Pre-text Tasks in Self-supervised Learning

Pretext-based tasks in self-learning refer to artificial tasks created to enable a model to learn useful representations from unlabeled data. These tasks are designed so that by solving them, the model indirectly learns the structure and patterns in the data, which can then be applied to more specific, subsequent tasks with minimal labeled data. The basic idea is that the model generates its control signals by predicting missing parts, identifying connections, or filling in gaps in the data. Examples of pretext-based tasks include:

- Image-based tasks. In computer vision, a common pretext-based task is to mask parts of an image and have the model predict the missing areas. Another example is image rotation prediction, where the model must determine the rotated image's correct orientation.

- Text-based tasks. In natural language processing, a typical pretext-based task is predicting the next word in a sentence (speech modeling) or filling in missing words in a text (masked speech modeling).

- Temporal tasks. For time series data, a pre-text task can include predicting the next frame in a video or determining the correct order of shuffled segments in a sequence.

Avoiding Trivial Solutions

Trivial solutions are those where the model "cheats" or learns to solve the pre-text problem in a simplistic way that does not reflect the underlying structure or features of the data. These solutions typically occur when the model uses abbreviations or artifacts in the data that do not generalize well to real-world problems.

For example, in an image-based pre-text task where the model is asked to predict missing parts, a trivial solution might involve learning to copy the surrounding pixels or always assume a constant value rather than truly understanding the image's content. Similarly, in a text-based task such as masked speech modeling, the model may always learn to predict the most common word in the dataset or rely on superficial word co-occurrences, which do not reflect a deeper understanding of the language.

SSL in Natural Language Processing

Self-learning (SSL) in natural language processing (NLP) has revolutionized how models are trained, significantly reducing the need for labeled data while achieving impressive performance across various tasks. In NLP, SSL allows models to learn rich, transferable representations of language using vast amounts of unlabeled text data. The basic concept is to develop pre-text tasks that encourage the model to predict missing or related parts of the text, learning deep linguistic patterns, syntactic structures, and semantic relations.

One of the most popular approaches to SSL in NLP is masked language modeling (MLM), where certain words or tokens in a sentence are randomly masked, and the model has to predict the missing words. A well-known example of this approach is BERT (Bidirectional Encoder Representations from Transformers), which uses MLM to learn contextual relationships between words. Another common SSL technique in NLP is next-sentence prediction (NSP), where the model is trained to predict whether one sentence logically follows another, helping with sentence-level comprehension.

Architectural Innovations in Language Models

One of the most important breakthroughs in language model architecture is the transformer model, introduced in the article "Attention is All You Need" (2017). Transformers with attention mechanisms replace the recurrent layers that existed in previous models, such as LSTM (long short-term memory) and GRU (closed recurrent units). This innovation allows models to process entire input sequences in parallel, significantly speeding up training and improving performance, especially for tasks requiring long-term text dependencies. A self-awareness mechanism in transformers allows models to weigh the importance of each word relative to others in a sentence, improving their ability to capture contextual relationships and meaning.

More recent innovations include multi-trial learning capabilities, as seen in models such as GPT-3, where the model can perform tasks with very little task-specific training data. This shift to fewer labeled examples has opened up new possibilities for real-time, on-demand speech processing without requiring massive datasets for each new task. In addition, research on multimodal models such as CLIP (Contrastive Language-Image Pre-training) and DALL-E has integrated vision and language understanding into a single architecture, pushing the boundaries of what language models can achieve in different domains.

SSL Techniques in Computer Vision

One standard SSL method in vision is contrast learning, where the model is trained to distinguish between pairs of similar and dissimilar images. Methods such as SimCLR and MoCo (Momentum Contrast) use data augmentation to create different "views" of the same image, treating them as positive and other images as negative. The model learns to bring together representations of similar images in the embedded space and to push unrelated ones apart, encouraging it to focus on meaningful features such as shape, texture, or structure.

Another class of methods includes pre-text tasks based on image transformation. For example, the model may be asked to predict the rotation applied to an image (e.g., 0°, 90°, 180°, 270°) or to solve puzzles by rearranging shuffled image regions. These tasks force the model to develop a sense of spatial perception and object structure without the need for any manual markings.

Image and Video Recognition

In image recognition, models are typically trained to classify or detect objects within a single static frame. Convolutional Neural Networks (CNNs) have long been the dominant architecture for this task, excelling at learning spatial hierarchies in image data. However, newer transformer-based models, such as Vision Transformers (ViT), have begun to outperform ANNs in many tests, especially after being pre-trained using SSL techniques. SSL has allowed these models to learn from many unlabeled images by solving pre-text tasks such as image reconstruction, contrast learning, or transformation prediction, making them more adaptive and efficient concerning the data.

Video recognition, on the other hand, involves understanding not only spatial information but also temporal information - what happens over time. This adds complexity as the model must capture motion, sequence, and time. Traditional approaches have used 3D ANNs or dual-threaded architectures to handle appearance and motion features. Today, transformer-based models adapted for temporal data, such as TimeSformer and VideoMAE, show strong results. SSL in video recognition often involves tasks such as frame order prediction, reconstruction of masked video segments, or learning from temporal frame alignment in different modalities such as audio and text.

Advanced SSL Methods: Contrastive Learning and Beyond

Contrastive learning works on the principle of learning by comparison. It trains the model to bring similar (or "positive") pairs of data closer together in the embedding space while pushing different (or "negative") pairs apart. Positive pairs are typically created by applying different augmentations to the same image or sample, ensuring the model learns features invariant to changes such as cropping, color jitter, or flipping. Well-known frameworks such as SimCLR, MoCo, and InfoNCE have demonstrated how powerful this method can extract high-quality, label-free features.

However, contrastive learning has limitations, such as its reliance on large packet sizes or memory banks to provide sufficient negative examples. To overcome these, newer approaches such as BYOL (Bootstrap Your Own Latent) and SimSiam eliminate the need for negative pairs. They rely on learning from two augmented representations of the same input data, using asymmetric architectures or stop-gradient tricks to prevent collapse into trivial solutions.

Overcoming the High Cost of Data Labeling

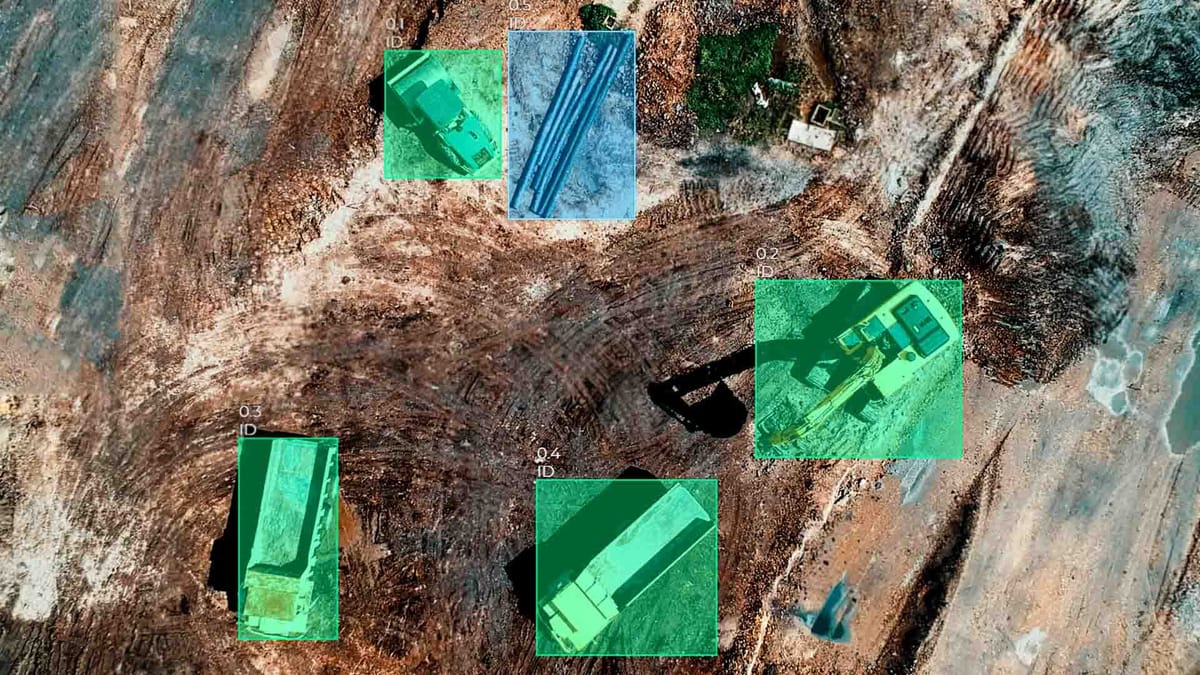

In many fields, collecting raw data is relatively easy, but obtaining accurate annotations is expensive, time-consuming, and often limited access to human experts. This creates a bottleneck for supervised model training, especially in specialized fields such as medical imaging, satellite analysis, or low-resource languages where expert annotations are expensive and rare.

Building Robust Training Pipelines for SSL Models

The first step is to develop effective data augmentation strategies, as most SSL techniques rely on transforming the same input data in different ways to create meaningful contrast or variation. For example, in vision tasks, this may include cropping, flipping, color distortion, or masking, while in speech, it may consist of token masking, reordering, or range removal. The pipeline needs to handle these transformations efficiently, often using libraries or custom modules that can perform additions on the fly during training.

Another key element is batch management and sampling. For comparative methods, it is essential to maintain a balance of positive and negative pairs, which often requires large batch sizes or memory banks to ensure sufficient diversity. Careful masking strategies and targeted sampling become more critical in non-contrast or reconstruction methods. As the model is trained, the pipeline can also include dynamic task scheduling or curriculum learning, gradually increasing the complexity of the tasks to help the model learn more efficiently.

Pre-training with Unlabeled Data

Pre-training aims to create generic representations that can be fine-tuned with less labeled data for specific downstream tasks. For example, a model that is pre-trained on millions of unlabeled images can be fine-tuned later to detect diseases in medical scans, or a model trained on books and web pages can be adapted to summarize legal documents. Because the model has already gained a broad understanding of visual or linguistic patterns, it can achieve high performance even with limited supervision.

The main advantage of pre-training with unlabeled data is scalability. Unlike supervised approaches, which depend on expensive and domain-specific labeling efforts, SSL methods can use vast amounts of freely available data - photos from the Internet, publicly available text, or sensor data from machines. This allows for creating powerful, domain-independent models that generalize well and adapt better to new tasks.

Best Practices for Optimizing SSL Performance

One of the key best practices is careful data augmentation, especially in vision-based SSL, where the variety and strength of transformations directly affect how well the model can learn invariant and robust features. The augmentations should be strong enough to prevent the model from depending on low-level signals but not so aggressive that they distort the underlying semantics of the input data. Similarly, introducing noise, masking, or reordering in speech and audio can help the model learn to handle uncertainty and context better.

Another critical area is hyperparameter tuning, which can be more delicate in SSL than in teacher-assisted learning. Parameters such as the learning rate, temperature in contrast loss, or the proportion of masked input data can significantly impact learning stability and convergence. Regular validation using proxy tasks, such as line probes or small labeled sets, helps detect collapse or under-tuning. In contrast and clustering methods, the batch size and embedding dimensionality should be tuned to balance representation diversity and computational efficiency.

Summary

Self-learning (SSL) is redefining how machine learning models are trained by allowing them to learn from vast amounts of unlabeled data. Traditionally, models required large labeled datasets that were expensive and time-consuming. SSL solves this problem by generating training signals from the data through tasks such as predicting masked inputs, matching augmented representations, or reconstructing corrupted data.

The success of SSL depends heavily on how the data is prepared and processed. From early challenges with labeled data requirements to modern innovations in task design with pre-text and contrast learning, SSL techniques have evolved into powerful frameworks applied in vision, speech, and video. Techniques such as masked modeling, clustering, and non-contrastive approaches have expanded its reach and improved performance.

FAQ

Why is data preparation critical in self-supervised learning?

Because the model learns from the structure of the data itself, how the data is prepared directly affects the quality of the learned representations.

What role do augmentations play in prepping data for SSL?

Augmentations create varied views of the same input, which is essential for contrastive and predictive tasks in SSL.

How do pre-text tasks influence data preparation?

They determine what information the model must learn to infer, guiding how data is masked, transformed, or split.

What types of transformations are commonly used in image-based SSL?

Cropping, flipping, color jittering, and masking frequently create diverse inputs from a single image.

How is the raw text prepared for SSL in NLP?

Text is often tokenized, and parts are masked or shuffled to create prediction tasks for the model.

Why is avoiding trivial solutions critical during data prep?

Poorly designed prep tasks can lead the model to exploit shortcuts or learn unhelpful patterns, limiting generalization.

How does unlabeled data need to be structured before SSL pre-training?

It should be cleaned, consistently formatted, and organized to support scalable augmentation and efficient sampling.

What's a common challenge when prepping data for SSL?

Balancing the complexity of pre-text tasks to be neither too easy nor too ambiguous for the model to learn useful features.

How does well-prepped data affect downstream performance?

Properly prepared data leads to stronger, more transferable representations, improving performance with minimal fine-tuning.