Precision and Recall for Evaluating Annotation Quality

In machine learning and data annotation, precision and recall are important metrics that help us better understand an AI model's performance. They are tools for balancing positive and negative outcomes.

By understanding the conceptual and mathematical foundations of precision and recall, you will understand their role in improving the quality of data annotation. This knowledge is essential for machine learning, allowing you to make informed decisions about the performance of an AI model and the quality of data.

Quick Take

- Precision and recall provide insight into the performance of an AI model.

- False positives and false negatives have different consequences in different applications.

- Understanding the mathematical formulas and these metrics improves their application in practice.

- Precision and recall assess and improve the quality of data annotation in machine learning.

Defining Precision

Precision measures what proportion of predicted positives are correct. The formula is:

Precision = TP / (TP + FP)

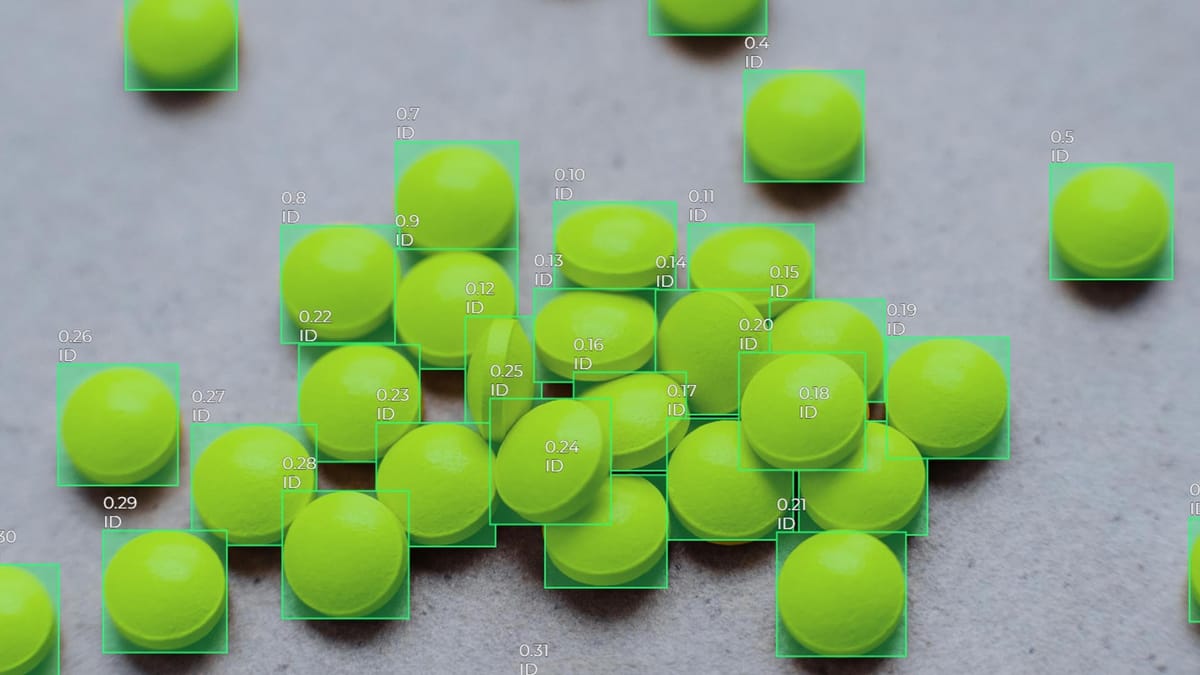

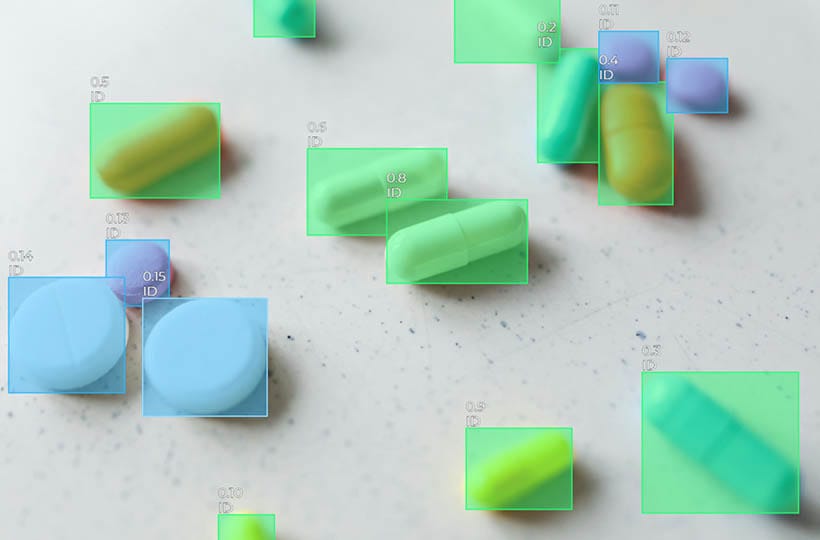

TP (True Positives) are correctly labeled objects, and FP (False Positives) are incorrectly labeled objects. High precision means that there are few false positives among the annotated objects.

Recall measures what proportion of all positive objects were correctly found. The formula is:

Recall = TP / (TP + FN)

FN (False Negatives) are correctly missed objects. A high Recall value means the system finds most of the correct objects.

Balancing these metrics is crucial for the performance of an AI model.

Understanding Annotation Quality in Data Science

The reliability of machine learning models depends on the quality of annotation. Inaccurate or inconsistent annotations will reduce the performance of algorithms and distort the results. Metrics such as Precision, Recall, and F1-measure are used to assess the quality of annotations. They determine the level of correctness and completeness of the annotation.

Also, an important factor is the consistency between annotators, which affects the AI model's results. Automated quality checks and active learning help to avoid errors and improve the quality of annotations.

Key Metrics in Annotation Evaluation

Metrics such as Precision, Recall, and F1-measure are key labeling metrics used to assess annotation quality.

Precision and recall in different areas

- Medicine and healthcare. Precision is needed in diagnosing rare diseases to reduce the number of false positives. Recall is used in screening for dangerous diseases to detect real cases.

- Security and fraud detection. High precision means that the system rarely marks everyday actions as threats. Recall detects fraud, where missing even one case leads to losses.

- Search engines and information retrieval. Precision ensures that the user receives relevant results. The recall covers all relevant pages.

- Machine learning and pattern recognition. Precision is needed for tasks where error is critical (autonomous driving). Recall is used in biometric systems (facial recognition).

Understanding the Confusion Matrix

A confusion matrix is a table used to evaluate the performance of an AI classification model. It calculates Precision, Recall, F1-measure, and overall Accuracy. It contains four main values:

Eliminating Imbalanced Datasets in Annotation Tasks

Imbalanced datasets occur when the number of examples of one class exceeds the number of another. This leads to a bias in the AI model towards the prevalent class. There are several methods to deal with unbalanced data:

- Resampling. Oversampling (adding examples of a smaller class) generates new examples to balance the classes. Undersampling (removing examples of a larger class) reduces the sample size of the dominant class to balance the distribution.

- Class Weights. Changing the class weights in the loss function compensates for the dominant class's influence on the AI model's training process.

- Data Augmentation. Expanding a smaller class by changing it (shifting, rotating, mirroring) increases its size in the dataset.

- Generative Models (GANs, VAEs). Synthetic examples are generated to balance the dataset using generative adversarial networks (GANs) and variational autoencoders (VAEs).

- Evaluation metrics. F1-measure, AUC-ROC, or balanced accuracy evaluates the quality of an AI model on unbalanced datasets.

Summary

Precision and recall are important metrics for evaluating the quality of annotation. These metrics can be used to assess the performance of AI models, which is important for medical diagnostics and environmental monitoring. Advanced methods such as the F1 score provide a complete assessment, especially in unbalanced datasets.

FAQ

What is the difference between precision and recall in evaluating machine learning models?

Precision measures what proportion of predicted positives are correct. Recall measures what proportion of all real positives an AI model correctly recognizes.

What role does a confusion matrix play in evaluating classification performance?

A confusion matrix helps to estimate the errors of an AI model, detect imbalances between classes, and calculate key metrics.

How does class imbalance affect the accuracy of an AI model?

Class imbalance causes a machine learning model to favor the more common class while ignoring the less represented one. This reduces the model’s ability to correctly recognize rare cases.

What is an F1 score?

The F1 score is the average between precision and recall, and is used to assess the quality of an AI model.

How does the choice of evaluation metrics affect the interpretation of the model’s performance?

Using different metrics allows for a deeper understanding of the strengths and weaknesses of an AI model in a specific context.