Managing Distributed Annotation at Scale: Tools and Techniques

As AI applications grow more sophisticated, the demand for high-quality, accurately labeled datasets has never increased. Scalable data labeling tools are essential for managing distributed annotation workflows, ensuring that your machine learning models are accurate and reliable. Whether you are working with image, video, or audio data, the right approach can make all the difference.

Managing a distributed annotation pipeline requires more than assigning tasks to a global workforce. It involves coordination across time zones, consistent quality assurance, and robust version control. Tools that offer workflow customization, role-based access, and real-time monitoring can significantly streamline the process. By integrating these features, teams can reduce annotation drift, improve turnaround time, and maintain consistent labeling standards across different data types and contributors.

Key Takeaways

- Scalable data labeling tools are crucial for managing distributed annotation workflows.

- High-quality labeled datasets are essential for accurate and reliable machine learning models.

- Advanced annotation software and data management strategies can significantly improve efficiency.

- Innovative approaches to distributed annotation are key to streamlining workflows and achieving project goals.

Understanding the Data Labeling Process

The process starts with defining the type of data the annotator is working with and the type of annotations the machine learning model requires. The instructions and tasks must be clear for consistent annotation and to reduce errors during annotation. Poorly defined instructions can lead to confusion, inconsistent labels, and a less efficient model.

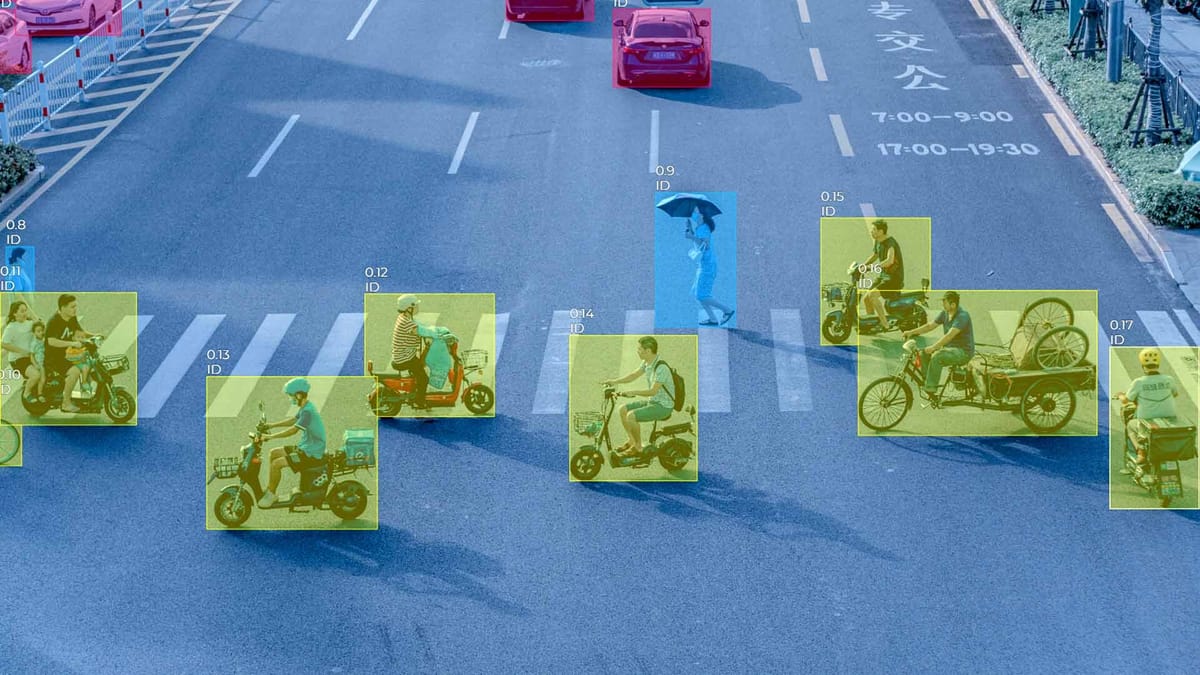

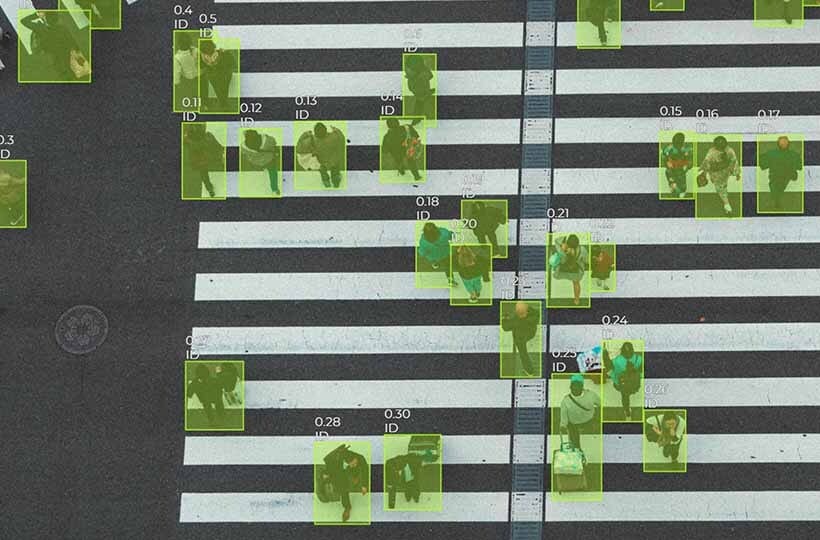

Annotation types

Manual versus Automated Approaches

Manual annotation relies entirely on annotators to label the data, providing high accuracy, contextual understanding, and the ability to handle edge cases. It is often used in the early stages of model development when accuracy is paramount, and datasets are relatively small or specialized. However, manual labeling can be time-consuming, labor-intensive, and expensive, especially as data volumes grow.

Automated annotation, on the other hand, uses machine learning models or rule-based systems to create labels with minimal intervention. This is especially useful for quickly scaling large datasets or pre-labeling data for later human improvement. Although automated methods are faster and more cost-effective, they can struggle with complex or ambiguous data and often require supervision to detect errors or improve model predictions.

Adapting to Complex ML Pipelines

Adapting to complex machine learning pipelines means aligning your data labeling strategy with a broader model development, deployment, and iteration system. In these environments, data passes through several stages - ingestion, preprocessing, annotation, validation, and training - each of which can affect model performance. The annotation process must be flexible enough to integrate seamlessly into this cycle, supporting version control, traceability, and scalability.

As models evolve, labeling needs may change. The initial phase may require broad categorization, while later iterations require detailed distinctions or new types of annotations altogether. Annotation tools and workflows should be adaptable, allowing for schema changes and iterative feedback. Integration with tools for data management, model monitoring, and active learning becomes crucial, enabling continuous improvement throughout the pipeline. Adaptation ensures that the labeled data remains relevant and actionable at every stage of model development.

Best Practices in Distributed Data Labeling

The best practices for labeling distributed data focus on maintaining consistency, quality, and efficiency across a geographically distributed workforce. One of the most important steps is establishing clear, detailed annotation guidelines. These should include definitions, examples, edge cases, and instructions on how to handle ambiguity. Training and education for annotators ensures a common understanding from the outset and reduces variability in labeling.

Regular quality control can include spot checks, consensus reviews, and feedback loops where reviewers correct and explain errors. Building a tiered workflow with dedicated roles for annotation, review, and quality control helps maintain high standards. Communication is also key, as collaboration tools to share updates, answer questions, and coordinate teams across time zones ensure the labeling process is smooth and efficient.

Pre-labeling with models or scripts can speed things up, but review is crucial to ensure accuracy. Analytics dashboards can track performance metrics across annotators, identify bottlenecks, and highlight areas for improvement. When these elements are in place, distributed labeling operations can scale efficiently without sacrificing quality.

Project and Quality Management in Annotation

Managing annotation at the project level means beginning with a detailed plan that outlines the scope of work, including the types of data to be labeled, the specific annotation formats required, the intended use of the dataset, and a realistic timeline. Project leads must account for team size, tool capabilities, expected throughput, and the complexity of the labeling task itself.

Distributed annotation teams benefit from tools that provide clear task visibility, role-based access, and real-time status updates. These allow project managers to quickly identify bottlenecks, underperforming areas, or annotators who need additional support. Agile management techniques, such as short sprints or weekly reviews, can also be applied to annotation work, helping teams remain flexible and responsive to shifting priorities or changes in model requirements.

Quality management runs alongside project coordination, functioning as a continual process rather than a one-time check. At its core, clear, unambiguous annotation guidelines are established and refined over time. These documents should evolve with the dataset, incorporating new edge cases or clarifications as they emerge. Annotation quality is typically measured using a combination of metrics such as inter-annotator agreement (to check consistency across different contributors), accuracy against gold-standard samples, and audit results from expert reviews.

A layered quality control system is often used to maintain high standards. The first layer might involve peer reviews or built-in validation checks within the annotation tool. The second layer could include dedicated QA personnel who re-annotate or spot-check a subset of completed tasks. In more complex projects, a third layer might involve domain experts providing high-level guidance or resolving annotation disputes.

Integrating Active Learning in Annotation Workflows

Active learning is a strategy that enhances annotation efficiency by using the model itself to prioritize which data points should be labeled next. Instead of unquestioningly labeling large datasets, active learning identifies the most informative or uncertain samples the model is least confident about or those most likely to improve its performance if labeled. By focusing effort where it's most impactful, teams can train high-performing models with fewer annotated examples.

You need a feedback loop between the annotation platform and the model training process to integrate active learning into an annotation workflow. As the model is trained, it flags samples for review based on uncertainty measures like entropy, margin sampling, or disagreement among multiple model outputs.

For the workflow to function smoothly, annotation tools must support dynamic task assignment and seamless data integration. It's also essential to establish review checkpoints, where model performance is evaluated after each round of active learning to ensure it's improving in the desired direction. Over time, this method reduces labeling costs and produces datasets that are better aligned with the model's actual decision boundaries.

Summary

Efficient data annotation is foundational to building reliable machine learning systems, and as projects scale, thoughtful design in both workflows and tools becomes critical. Whether using bounding boxes, segmentation, or custom labels, the choice of annotation type must align with the model's goals. Manual labeling offers high accuracy but is resource-intensive, while automated and semi-automated approaches provide speed and scalability, especially when supported by human oversight.

Managing distributed annotation efforts requires strong project and quality management practices. Clear guidelines, layered QA processes, transparent communication, and performance monitoring ensure consistency and reduce team error rates. Integrating these practices with complex ML pipelines means designing annotation workflows that are flexible, traceable, and can evolve alongside model requirements.

FAQ

What is the importance of high-quality training data in machine learning?

High-quality training data is crucial for ensuring the accuracy and reliability of machine learning models.

How does the data labeling process impact the performance of AI models?

The data labeling process directly affects AI model performance. Accurate and consistent labels enable models to learn patterns effectively, improving prediction accuracy and system reliability.

What role does expertise play in the data labeling process?

Expertise is critical for complex or domain-specific data. Annotators with specialized knowledge ensure that labels are accurate and contextually appropriate, which is vital for training reliable AI models.

How do active learning techniques improve data labeling efficiency?

Active learning selects the most informative data samples for human annotation, reducing the time and effort required to train accurate models.