Legal Tech: Annotating Contracts for Clause Extraction

The legal industry has traditionally been one of the most conservative regarding technological innovations, but today it faces a data overload crisis, too. Lawyers manually review hundreds of pages of complex contracts, especially during auditing. This not only consumes a lot of precious time but also increases risks. The human factor often leads to errors that can have catastrophic financial consequences for the client.

Quick Take:

- AI-powered Clause Extraction reduces manual review time, minimizing human error and accelerating due diligence.

- NLP models convert unstructured legal text into structured, analyzable data using NER, relation extraction, and text classification.

- Annotation requires mandatory domain expertise to correctly interpret ambiguities and classify complex clauses like Indemnity or force majeure.

- High-quality labeling is crucial for risk management, enabling the automatic detection of atypical or non-compliant boilerplate clauses.

Legal Tech is attempting to radically change this situation using Artificial Intelligence and Natural Language Processing models. The primary catalyst for this process is the extraction of provisions using artificial intelligence. Such models are trained on vast arrays of annotated legal documents.

High-quality annotated data allows Legal Tech instruments to automate most of lawyers' routine work. Thus, instead of mechanical proofreading, lawyers can focus on strategic analysis.

AI in Legal Contract Review

Legal teams regularly deal with thousands of multi-page contracts, especially during mergers and acquisitions or large-scale audits. Hours are spent on manual proofreading and comparing so-called boilerplate clauses:

- Contract duration terms.

- Terms of penalties and liability.

- Contract termination conditions.

- Confidentiality clauses.

In addition to its high cost, this monotonous work carries a significant risk of human error, which can lead to the omission of important terms. For example, unfavorable renewal terms or unclear indemnity provisions.

NLP as a Risk Management Tool

Natural Language Processing technology allows for the automation of contract analysis, transforming legal auditing into a rapid analytical function. It instantly detects anomalous or atypical provisions, such as inappropriate governing law or the absence of force majeure clauses in principal business agreements.

NLP provides proactive risk management in several areas:

- Automatic Detection of Atypical Provisions. NLP models trained on thousands of standard contracts can instantly identify provisions that deviate from internal or industry standards. The system automatically highlights an atypical clause in red and compares it with the "ideal" internal template, signaling to the lawyer the need for immediate review and negotiation.

- Ensuring Compliance and Regulatory Adherence. Companies must constantly check millions of documents for compliance with new laws and regulations. The model quickly scans the contract archive using Clause Extraction to pull out only the relevant sections. This allows the compliance team to determine which contracts require immediate updating in a significantly shorter time frame.

- Identification of Problematic Conditions. Text classification and attribute extraction models evaluate criticality and group contracts by risk level, prioritizing those with the highest potential threat.

- Outcome Prediction. The model analyzes how courts have interpreted similar force majeure clauses or indemnity terms in thousands of court decisions, providing lawyers with a statistically substantiated forecast.

Thus, NLP transforms the legal department from a routine operations center into a strategic risk management center.

The core of this process is Clause Extraction. This function transforms the unstructured contract text into data ready for analysis. AI models automatically extract critical terms, classify them, and enter them into a database. This allows for increased accuracy and reduced time spent on legal audits.

Technical Foundation of Contract Annotation

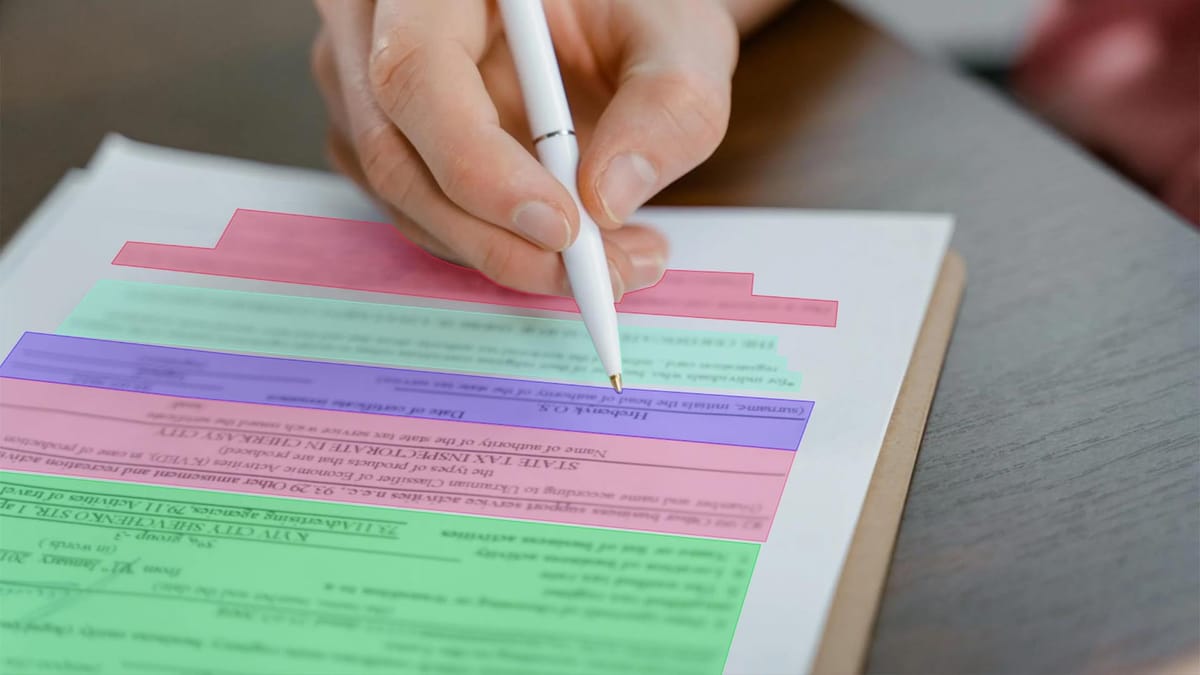

Contract annotation is the stage that converts complex, unstructured legal text into structured training data that the AI model understands. The essence of annotation in Legal Tech is to mark legally significant entities and relationships directly within the contract text. This is meant to train Natural Language Processing models to identify, classify, and extract these elements in new, unlabeled documents.

It creates the ground truth necessary for automated auditing and risk management, teaching AI models:

- What is a certain provision?

- Where is it located in the text?

- What are its types and criticality?

Legal annotation uses several specialized techniques:

- Named Entity Recognition is the basic level of labeling, which consists of highlighting and classifying key legal terms.

- Relation Extraction is a form of annotation that adds context by marking the functional links and dependencies between the highlighted entities.

- Text Classification is a technique applied to assign a label or category at the provision level or at the document level.

Together, these three techniques ensure a deep and structured understanding of legal documents, which is necessary for successful Clause Extraction.

Types of Legal Clauses and Labeling Structure

For effective Clause Extraction, the AI model must be trained on specific examples where each provision is accurately highlighted and classified. This converts the text into a structured "key-value" pair for the algorithm. The labeling structure is usually represented in a certain way:

- Span Highlight is the continuous highlighting of a key phrase or entire sentence that contains the provision, defining for the model where the clause is located.

- Tag is the main classification label that determines what kind of provision it is.

- An attribute is an additional structural element that clarifies details within the clause.

Examples of the most important legal clauses and how they might be labeled in the data:

Such a labeling structure allows NLP models to extract key parameters from the provisions for subsequent automatic analysis and comparison.

Specifics and Challenges of Legal Text Annotation

Annotating legal contracts for Clause Extraction is one of the most demanding tasks in NLP. This is due to the unique properties of legal language, which create significant obstacles for training AI models.

Ambivalence and Legal Jargon

Legal language is designed for precision, but its excessive formality creates algorithm difficulties.

Lawyers often use different phrases to denote the same concept to avoid ambiguity. For example, the model must understand that "if this happens," "in the event of such an occurrence," and "subject to the occurrence of the specified circumstances" functionally mean the same thing.

Legal texts often contain lengthy sentences with numerous subordinate clauses and negations. Annotators must highlight the exact boundaries of the entity or relationship in such complex syntactic constructions.

Experience Requirements

Unlike annotating photos or general text, a legal document annotator cannot be just anyone, as context is crucial. The annotator must interpret the legal meaning of words and the intent of the parties, which is impossible without relevant experience.

Thus, annotators must be lawyers or paralegals with the necessary deep industry knowledge. For example, only an expert can accurately distinguish a "Force Majeure Clause" from a "Termination Clause," as their functionality may overlap in the text.

Structural Challenges

The model must learn to recognize legal documents with an internal architecture to extract information correctly.

This also requires annotating the contract structure — clause numbers, sub-clauses, indentations, and headings. The model must know that a provision in "Section 3.2" is in a specific context.

Contracts often contain cross-references to other documents or external legal acts. The annotation must indicate that a specific condition depends on information outside the current document.

These challenges emphasize that successful annotation in Legal Tech requires not only powerful annotation tools but also a strict quality control methodology involving qualified specialists.

Business Value and the Future of Legal Tech

Quality annotation fuels Clause Extraction models and generates a direct economic benefit for law firms and corporate legal departments.

Automatic detection of missing, atypical, or risky provisions minimizes the likelihood of lawsuits and financial losses in the future. The system converts a potential threat into a measurable risk.

In the future, the Legal Tech sphere may integrate Large Language Models, fundamentally changing the role of annotation and the annotator. New platforms will use LLMs for automatic preliminary extraction and labeling of almost all key provisions in a contract.

The role of the lawyer-annotator will transform. They will no longer be involved in primary labeling but only in the validation, correction, and auditing of AI responses. This will allow the annotation team to process a far greater volume of data while maintaining expert quality.

Thus, quality annotation today guarantees scalability and is necessary for the lawyers of the future to use AI as a reliable, fast, and accurate partner.

FAQ

Why can't AI be simply trained on unlabelled contracts?

Models require a "Ground Truth" to know exactly what constitutes a "termination clause" and what does not. Without manual expert annotation, AI will confuse ordinary sentences with critical legal provisions, leading to catastrophic inaccuracy.

Is it possible to use LLM to extract provisions without annotations?

LLMs can offer responses, but their accuracy is not guaranteed, especially for complex or rare legal formulations. Annotation is necessary for fine-tuning LLMs to specific corporate jargon and, most importantly, for validating LLM results to ensure legal compliance and minimize risks.

What is the most expensive clause to annotate?

Clauses concerning indemnity and force majeure are among the most expensive. They often have many exceptions and cross-references, and their correct annotation requires not only text highlighting but also the labeling of complex functional relationships between different parts of the contract.

How will the prediction of the outcome of legal proceedings be made?

Through precedent analysis. Models can analyze how courts have interpreted thousands of similar boilerplate clauses in past judicial decisions, providing lawyers with a statistically substantiated forecast regarding their chances in future disputes.