Leading Tools of the Modern Robotics Software

The modern robotics landscape is based on specialized software that enables machines to see the world, make decisions, and safely interact with complex environments. As robots move from controlled laboratory settings to factories, warehouses, farms, medical facilities, and public spaces, the software infrastructure behind their operation becomes increasingly complex and interconnected. Modern robot software is a complete ecosystem that combines real-time performance, perception algorithms, decision-making modules, simulation, high-quality data, and cloud services.

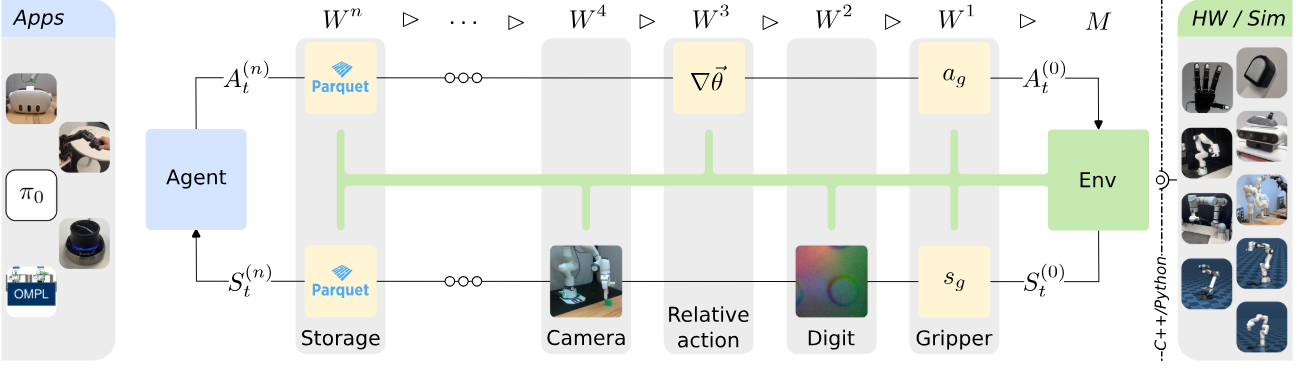

At the core of this ecosystem are operating and integration frameworks that coordinate every sensor, actuator, and software module inside the robot. They provide communication infrastructure and hardware abstraction, allowing navigation, motion control, sensor systems, and machine learning models to work consistently and predictably. Based on this foundation, a perception layer is built, where software tools convert raw data from cameras, LiDARs, and other sensors into a structured understanding of space.

Beneath all this lies a powerful data infrastructure. Robotics heavily relies on ML models, which require high-quality datasets and tools for annotation, training, and evaluation. Modern data management platforms ensure model stability, accelerate development, and make robots more reliable and accurate.

Understanding this landscape allows the creation of robots that are safe, adaptable, and ready to operate in complex, dynamic environments. It is this technological layer that transforms mechanical systems into intelligent agents capable of autonomous operation – and it continues to develop rapidly.

Quick Take

- Middleware serves as the robot's nervous system, providing standardized communication between all its components and offering critical support for real-time performance and safety.

- Perception uses computer vision and 3D data processing stacks to convert raw sensor data into a meaningful understanding of the scene and a three-dimensional reconstruction of the environment.

- Navigation frameworks are responsible for path planning, localization, and collision avoidance, utilizing SLAM technology.

- Simulation provides a virtual environment for safe testing, fast AI training, and the collection of synthetic data.

- ML Data Ops is the mandatory infrastructure that manages the data lifecycle, ensuring the supply of high-quality training data for ML models.

- Motion planning tools calculate safe trajectories, and control stacks ensure the precise execution of these commands by motors, often with a focus on human collaboration.

Robotics Middleware & Integration Platforms

The basic robot software platform is the foundation for any modern robot. These tools act as the "nervous system", connecting the robot's brain to its body. They help different parts of the robot communicate with each other: sensors that see, motors that move, and algorithms that make decisions. This mechanism integrates everything into a single operational system.

ROS / ROS 2

The Robot Operating System is the standard in the world of robotics, especially in academic research and new product launches. It is a meta-operating system that provides a rich collection of tools and libraries for creating robotic applications.

ROS ensures modularity, allowing complex tasks to be broken down into separate nodes. The new generation, ROS 2, offers improved real-time support, enhanced security, and compatibility with industrial standards, making it suitable for commercial deployment.

NVIDIA Isaac ROS

This software stack, developed by NVIDIA, is specifically optimized for GPUs. Since many robotics tasks, such as computer vision, navigation, and path planning, require intensive parallel computing, Isaac ROS allows developers to maximize the effective use of NVIDIA chip power. This is an ideal choice for robotics software focused on artificial intelligence, especially where high-speed sensor data processing is required.

Amazon RoboMaker

RoboMaker is a cloud-based ROS infrastructure focused on developing, simulating, and managing a large number of robots. By using Amazon Web Services cloud resources, RoboMaker allows developers to run large-scale simulations for testing algorithms and effectively manage software updates for an entire fleet of robots already operating in real-world conditions.

Apex.AI Apex.OS

This platform is a certified, safe middleware developed based on ROS. Apex.OS is aimed at industries with high demands for reliability and security, such as the automotive industry and industrial automation. It provides strict control and guarantees real-time operation, which is necessary for accident avoidance and functional safety. This is a reliable foundation for developing safe software for robotics.

Sensor Processing & Computer Vision Stacks

The tools and libraries belonging to this section are responsible for converting raw data from various sensors into meaningful information that the robot can use for navigation, manipulation, and decision-making. The main tasks here are image processing, point cloud analysis, three-dimensional reconstruction of the environment, and scene understanding – that is, figuring out exactly what is in front of the robot.

OpenCV / Open3D

OpenCV is the classic and arguably the most important foundation for any software for robotics that uses vision. It is a massive library that offers thousands of algorithms for image processing, object recognition, tracking, and camera calibration.

Open3D is a modern counterpart specifically focused on working with three-dimensional data, particularly point clouds from LiDAR or 3D cameras. It provides basic operations for filtering, segmentation, and registration of 3D scans, which are necessary for accurate environmental modeling.

DeepStream

NVIDIA dominates the field of AI-focused computing and offers specialized stacks that leverage the power of its graphics processors.

DeepStream SDK is a framework for developing applications based on video and sensor data that operate at a high frame rate. It allows robots to process data from multiple cameras or sensors in real-time, which is important for fast autonomous systems.

Slamtec SDK

Slamtec is a well-known manufacturer of affordable 2D and 3D LiDAR, and their SDK provides simple and reliable libraries for integrating these sensors into the robot software platform. The Slamtec SDK often includes basic functions for SLAM, allowing mobile robots to immediately begin mapping and localizing themselves in space, which is ideal for the initial stages of development.

Mapping, Localization & Navigation

For a robot to move, it must answer three main questions: Where am I? Where should I be? How do I get there?

Tools in this category provide a complete stack from building an internal map of the world to safely moving within it, often using SLAM technology.

Nav2

Nav2 is the modern software for robotics standard for solving navigation tasks in the world of ROS 2. It is an entire framework that allows the robot to:

- Find a path. Determine the optimal route from the current point to the goal.

- Avoid obstacles. Dynamically bypass people, objects, and other moving entities.

- Work with various sensors. Easily integrates with LiDAR, cameras, and other sensors to gather environmental information.

Essentially, Nav2 is a ready-made "navigator" for the robot that developers can quickly customize for their needs.

Cartographer

Cartographer is a high-performance robot software library from Google that specializes in real-time SLAM. Cartographer works excellently in both 2D for simple mobile robots and complex 3D environments for autonomous cars or drones.

Its key advantage is global consistency. It is capable of creating very accurate, "loop-closed" maps, automatically correcting errors that accumulate as the robot travels long distances.

Slamcore

Slamcore is a commercial company offering a ready-made, high-precision solution for visual SLAM. Instead of developers having to integrate complex algorithms themselves, Slamcore provides an optimized software robotics module that runs quickly and reliably on small embedded computers.

They offer a localization-as-a-service solution that enables robots to instantly determine their location, utilizing only cameras and inertial sensors, which is crucial for service robots and drones.

Applied Intuition

Applied Intuition does not produce final robot software for navigation but provides powerful tools for the development and testing of autonomous vehicles. Their products focus on:

- Simulation. Testing navigation algorithms in millions of virtual scenarios.

- Validation. Checking whether the navigation stack works safely and reliably in all conditions.

This is useful for companies building their own highly complex navigation stacks.

Autoware

Autoware is a large open-source software for robotics projects developed as a complete autopilot stack for autonomous vehicles. This represents the most complex level of navigation. It includes not only mapping and localization but also high-level mission planning and low-level behavior planning. It is based on ROS 2 and aims to create standardized, safe code for the entire autonomous driving industry.

Simulation, Testing & Digital Twins

This category of robot software is a virtual "sandbox" where robots are born, trained, and tested before interacting with the real world. Simulation is necessary for:

- Safety. Testing dangerous scenarios without the risk of damaging the robot or harming people.

- Training Speed. Collecting millions of data points and running training algorithms much faster than in real-time.

- Cost-Effectiveness. Developing and debugging software for robotics without needing an expensive physical prototype.

Modern simulators create so-called digital twins – accurate virtual copies of the robot, its sensors, and the environment, which allows for the creation of synthetic data that is almost indistinguishable from real data.

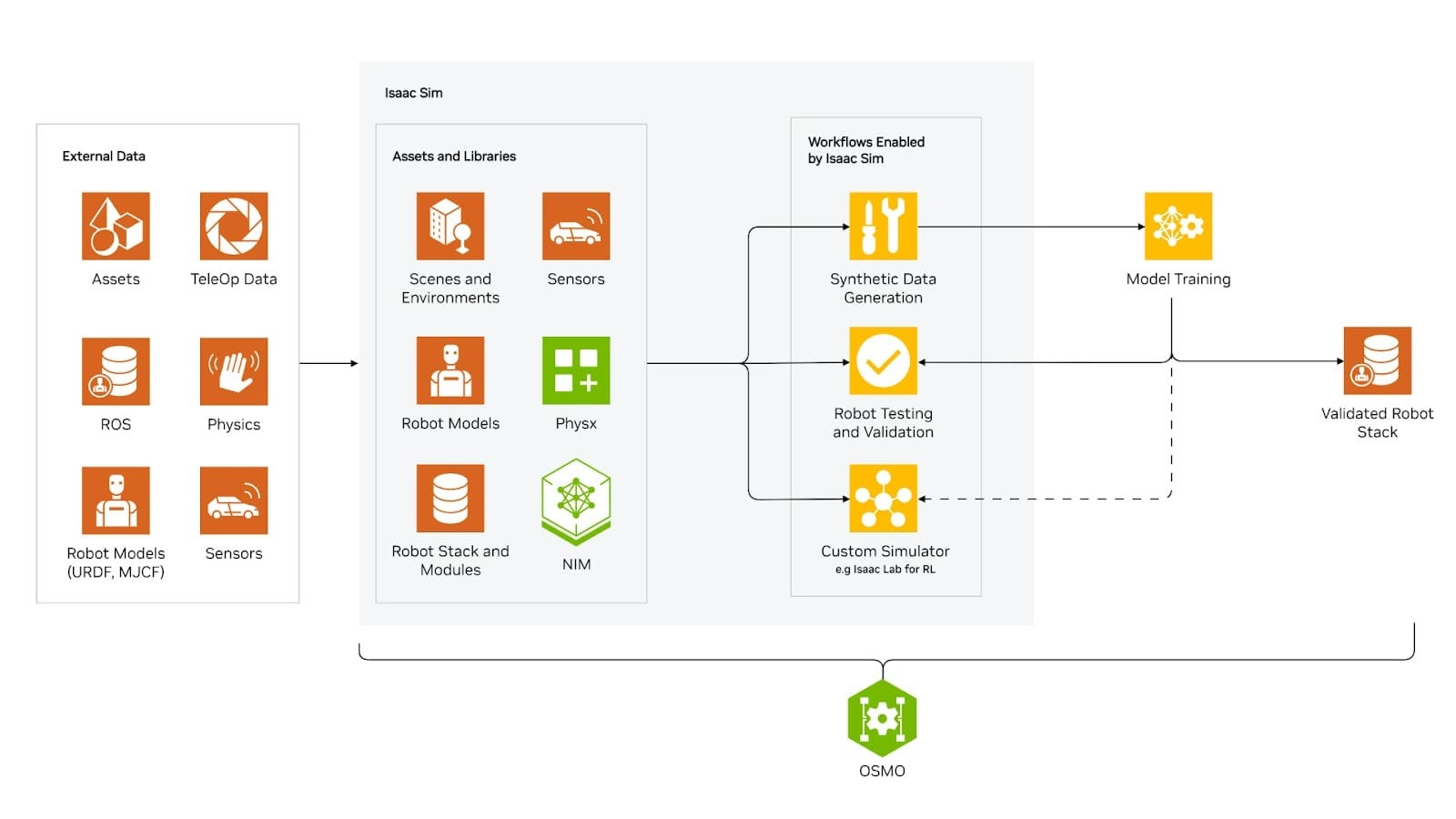

NVIDIA Isaac Sim

NVIDIA Isaac Sim is a leader in creating photorealistic and physically accurate simulations. It is built on the NVIDIA Omniverse platform, ensuring:

- High physical accuracy. Uses the NVIDIA PhysX engine for very realistic modeling of collisions, friction, and dynamics.

- Synthetic Data. Has powerful tools for automatically generating large volumes of training data for computer vision algorithms, significantly accelerating the training of AI models.

This is the best choice for companies developing complex autonomous systems where visual accuracy is crucial.

Gazebo

Gazebo is the de facto standard for simulation in the ROS / ROS 2 community. It is open-source software that tightly integrates with the robot software platform frameworks.

Their advantage is the ease of integration with existing robotics software and the ability to model sensors and robot dynamics using URDF files. It is ideal for testing navigation and motion planning algorithms in simple and complex scenarios.

MuJoCo

MuJoCo is a full-fledged simulator and a high-accuracy physics engine. Its main strength is the fast and stable modeling of complex contacts and the dynamics of multi-joint systems. It is the leading tool for Reinforcement Learning, where algorithms quickly test millions of movements to learn optimal control.

RoboDK

A specialized simulator focused on industrial robotics, particularly robot manipulators. It allows engineers to create, simulate, and verify programs for specific industrial robots directly on a computer. Its key capability is generating code that can then be loaded and run on the real industrial controller, which simplifies the deployment process.

ML Data Ops

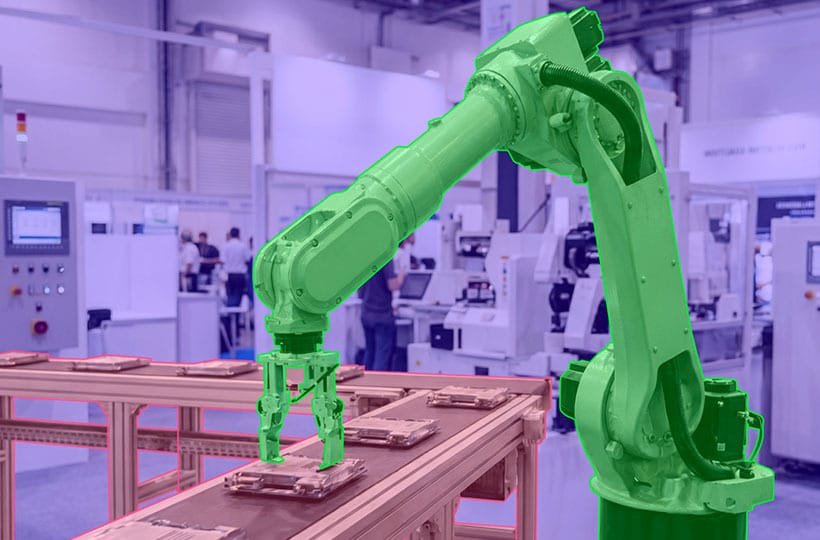

Modern robotics heavily relies on machine learning, particularly for perception tasks such as object recognition, surface classification, and scene understanding.

ML Data Ops is the discipline that ensures a continuous, efficient, and automated workflow for data: from collecting raw data from robots, cleaning it, and various annotations to training models and, finally, deploying them on the robot. Without such tools, it is impossible to create quality software for robotics.

Segments.ai

A platform that focuses on segmentation and increasing the efficiency of annotation. Its tools are designed to make the annotation process faster by using automation and AI tools. It specializes in creating pixel-accurate masks and processing 3D data.

They actively use artificial intelligence models for pre-labeling data, which significantly reduces the volume of manual work. It is ideal for teams working on computer vision who require highly detailed annotation for training complex deep learning models.

Kognic

A complete Data Ops platform created specifically for managing the data lifecycle in the ecosystem of autonomous vehicles and robotics. They treat data as the biggest asset and aim to automate everything that happens to it after collection.

Kognic provides tools for collecting, synchronizing, visualizing, annotating, and validating sensor data. They help synchronize data from various sensors, ensuring their correct joint use. The strong focus is on Data Ops automation and supporting complex, multi-modal datasets composed of different types of sensors.

They are a strategic partner for companies creating large fleets of autonomous robots and requiring a reliable, scalable pipeline for managing millions of kilometers/hours of collected data.

Keymakr

A company that specializes in providing high-quality data annotation services for AI projects, particularly in the fields of autonomous vehicles and robotics. They offer not only a platform for annotation but also a qualified workforce capable of handling large volumes and complex data formats.

Keymakr is often utilized to ensure the fast and accurate creation of annotated datasets on demand, allowing robot development teams to quickly scale their training data without establishing their own internal annotation department.

Scale AI

A company that offers a comprehensive approach to data annotation, focused on the most complex challenges of autonomous systems. Scale AI provides both a technological platform for annotation and services from qualified annotators.

They can process huge volumes of data, including complex 3D point clouds and synchronized video from multiple cameras. In fact, they are an outsourcing partner for Data Ops for many leading developers of autonomous technologies, ensuring that perception models receive training data.

Weights & Biases

W&B solves the problem of chaos in ML model development. When engineers train dozens or hundreds of versions of perception models, W&B provides a unified platform for tracking, comparing, and visualizing the results of all these experiments. This ensures that the team always knows which model is the best and why.

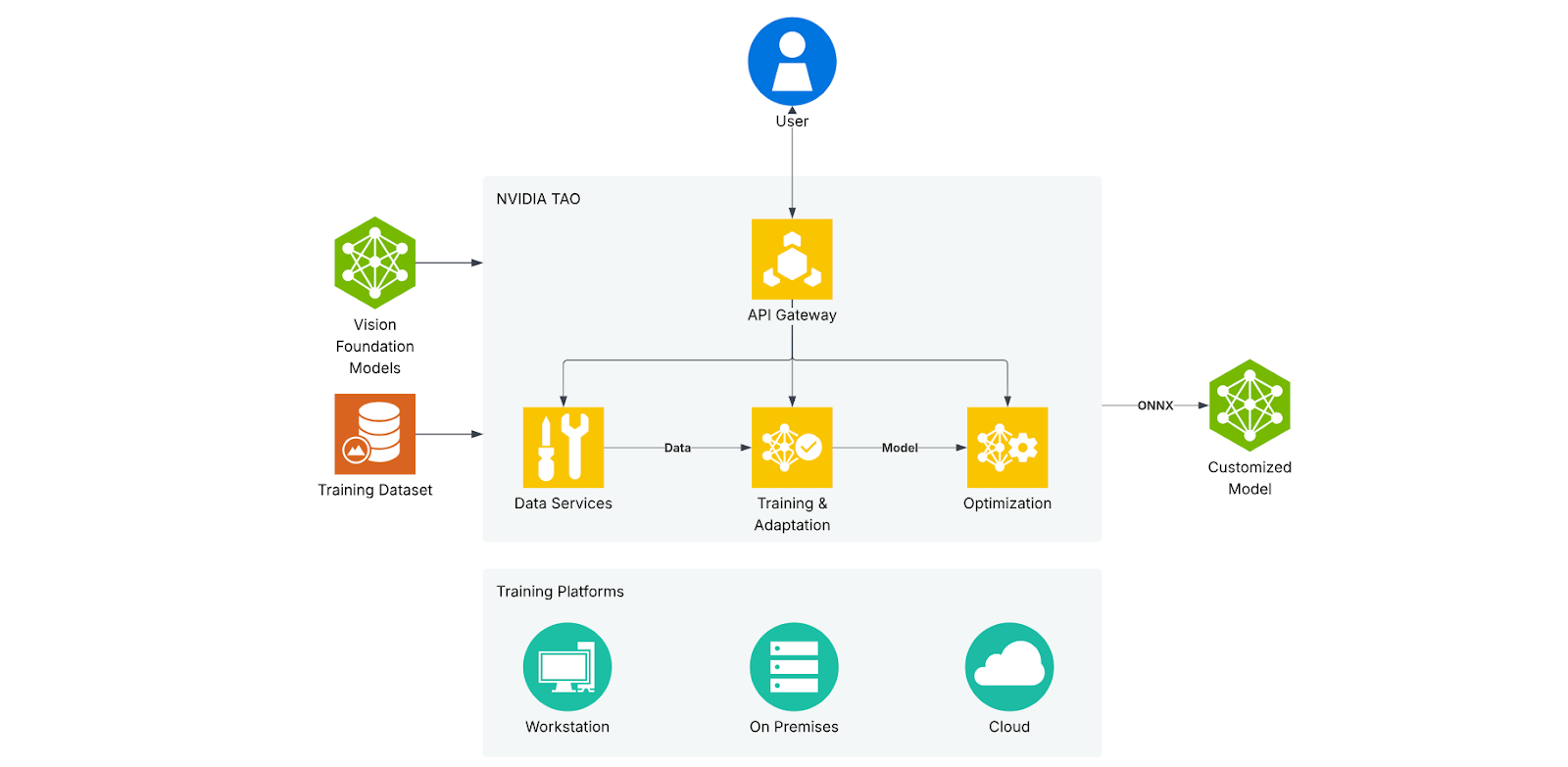

NVIDIA TAO Toolkit

An actual set of tools that significantly simplifies and accelerates the model training stage. It allows developers to:

- Use pre-trained NVIDIA models optimized for robotics tasks.

- Fine-tune these models on proprietary data without needing to write complex code.

- Automatically optimize models for the fastest possible operation on NVIDIA embedded devices on the robot.

This is an example of how robotics software accelerates the ML cycle, making it accessible even to smaller teams.

Low-Level Control & Motion Planning

This category of robotics software is responsible for how the robot physically moves in the real world. Low-level control involves sending direct commands to the motors to ensure the robot achieves the desired speed or position, and motion planning is the determination of the optimal and safe trajectory for performing a task while avoiding collisions.

MoveIt

MoveIt is the standard for motion planning in the world of robotics. It is essentially an open set of robot software tools, tightly integrated with ROS.

It allows the robot manipulator to calculate complex movement trajectories, considering its kinematics and the environment to avoid collisions with itself and external objects. It is the tool that translates a high-level command like "pick up object A" into a sequence of low-level motor commands.

Realtime Robotics

Realtime Robotics offers a commercial hardware and software platform that solves one of the most difficult problems: real-time collision-free motion planning.

Thanks to the use of specialized hardware and algorithms, their software for robotics can instantly replan the robot's trajectory when a person or another object suddenly enters the workspace. This is important for industrial environments where high operating speed and flexible human-robot collaboration are required.

Universal Robots UR+ Ecosystem

UR is the leader in the collaborative robot market. Their UR+ ecosystem is a platform that includes software and hardware modules for controlling their manipulators. The focus is on ease of programming and safety.

Their robot software allows operators to easily program complex movements through a graphical interface, and built-in safety features ensure the cobot stops upon contact with a human. This is a holistic approach to control, integrated with the hardware.

Open Robotics Control Stack

This is a set of tools and libraries developed by the Open Robotics organization to provide standardized and reliable low-level control of actuators. It includes a framework that standardizes the interface between complex high-level algorithms and hardware motor drivers.

It allows developers to write control code that will work with any type of robot, regardless of the motor manufacturer, which significantly increases the compatibility of the robot software.

FAQ

What are the typical vulnerabilities of robotics middleware, and how do commercial platforms address the cybersecurity issue in integration frameworks?

The main vulnerability of the old version of ROS is the lack of built-in encryption and authentication. ROS 2 corrects this by using the DDS standard, which allows integrating encryption and security certificates. Commercial solutions, such as Apex.AI, ensure security through code certification and strict access control.

What is the balance in modern robots between on-device computing and using cloud resources for training and heavy computations?

Most critical functions that require real-time operation are performed on Edge AI. The cloud is utilized for non-critical tasks, including large-scale simulations, data collection, retraining of AI models, and remote monitoring.

Are there standards for robotics software that regulate the ethical behavior of autonomous systems, and how are they integrated into the motion planning stages?

There are no direct global standards yet, but developers often use value-sensitive design concepts. This means that "priority rules" are embedded in motion planning algorithms, which affect the choice of trajectory in critical situations.

What software tools are used for developing a natural and intuitive interface for human-robot interaction, beyond simple motion control?

Tools for real-time data visualization and specialized gesture and voice frameworks are used. For example, for cobots, tactile sensors and graphical interfaces are often employed, allowing a person to program movements by literally moving the robot's arm.