Ethical Considerations in AI Model Development

The development of artificial intelligence models has rapidly changed many aspects of our lives, creating new opportunities and challenges. As AI systems are increasingly integrated into everyday decision-making, questions about their creation and use are gaining increasing attention. Developers, researchers, and organizations are now in a complex landscape where technology meets human values. Discussions around AI often touch on different perspectives, including technical, social, and philosophical dimensions.

Specific considerations have attracted considerable interest in the many AI-related topics because of their potential implications. These considerations affect how AI systems are designed, tested, and deployed in various sectors. While technical details are important, the context in which AI operates also shapes the conversation. Ensuring that AI meets broader societal expectations requires constant reflection and adaptation. Thus, these topics are essential to understanding the current AI landscape.

Key Takeaways:

- Bias and discrimination in AI systems can perpetuate unfair outcomes and hinder inclusivity.

- Transparency and accountability are vital to understanding and addressing potential AI model biases.

- The ownership of AI-generated art raises complex ethical questions that require legal clarity.

- Social manipulation and misinformation pose significant risks to democratic processes.

- Privacy, security, and surveillance concerns must be addressed to protect individuals' rights.

Bias and Discrimination in AI Systems

Bias and discrimination in AI systems occur when these technologies mistreat people. This often stems from the data that AI learns from, which can carry the same old social and historical biases as the real world. Instead of solving these problems, AI can sometimes make them worse by repeating these unfair patterns. This is evident in critical areas such as hiring or law enforcement, where fairness is essential. It's a reminder that AI doesn't work in a vacuum - it reflects our world.

When AI discriminates, certain groups may be unfairly disadvantaged because of how data is collected or algorithms are designed. Sometimes these problems are apparent, but sometimes they are subtle and more complex to spot. This makes it difficult for people to trust systems and hold them accountable. Fighting discrimination isn't just about coding but also about understanding the ethical and social impact. That's why developers, users, and communities must work together to identify risks and create protections that ensure AI is fair for everyone.

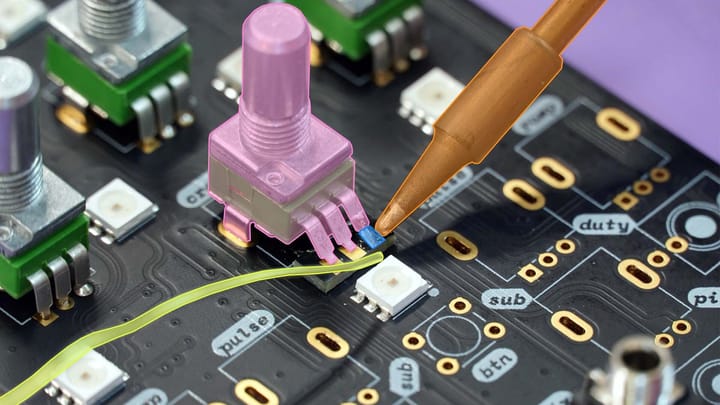

Example: Bias in Facial Recognition Technology

A prominent example of bias and discrimination in AI systems is the use of facial recognition technology. Studies have shown that these systems tend to have higher error rates for women and people with darker skin tones, leading to biased outcomes and potential harm to these individuals. This highlights the importance of addressing bias and discrimination in AI algorithms to ensure fair and unbiased decision-making processes.

Transparency and Accountability in AI Systems

Because AI systems can be complex and sometimes challenging to understand, people often worry about how they make decisions and whether they might be biased. That's why researchers and developers are working hard to make these systems more transparent and accountable. They want to create AI that people can trust and have confidence in.

When it's clear how AI comes to its conclusions, people feel more comfortable relying on it, especially when it comes to important choices. Transparency also helps to identify errors or unfair treatment at an early stage, giving developers the opportunity to fix problems before they cause damage. It's not just about technology—it's about building AI that respects the people it serves. In this way, AI can become a useful tool supporting better decision-making without leaving anyone behind.

The Importance of Transparency

- Builds trust between users and AI systems by making decisions more understandable.

- Helps identify and correct errors or biases in AI models.

- Supports accountability by clarifying who is responsible for AI outcomes.

- Ensures better regulation and oversight of AI technologies.

- Encourages ethical use by making AI processes visible and open to scrutiny.

- Improves user engagement by providing clear explanations of the results obtained by AI.

- Facilitates collaboration between developers, users, and stakeholders.

- Improves overall AI performance through continuous feedback and improvement.

- Prevents misuse or harmful impact by exposing potentially problematic behavior.

- Aligns AI systems with societal values and expectations.

Enhancing Accountability

Accountability is a key part of ensuring AI's responsible and ethical use. It means that everyone involved in building and running AI systems is responsible for the results they produce and can be held accountable for them. When everything is transparent, it is easier for people to identify biases, errors, or unfair practices in AI models. With this awareness, they can intervene early to correct problems and avoid causing harm.

To promote proper accountability, several essential elements need to be put in place:

- Clear roles and responsibilities so everyone knows their role in the AI lifecycle.

- Open communication between developers, users, and regulators to share information and concerns.

- Regular audits and assessments to evaluate AI performance and ethical standards.

- Feedback and correction mechanisms that allow for the quick resolution of issues.

- Legal and ethical frameworks that support holding parties accountable when things go wrong.

Having these elements in place helps build trust and encourages a culture where ethical AI development is a shared goal. It also means that when AI systems make decisions that affect people's lives, a safety net is in place to identify and correct problems. Ultimately, accountability creates a more transparent and equitable AI landscape, where every participant feels responsible for the impact of their work on society.

The Role of Copyright Law and Intellectual Property Rights

Copyright law plays a vital role in protecting creators' rights. However, AI-generated art challenges traditional copyright principles. Copyright laws are designed to protect human creations, leaving AI-generated artwork in a legal gray area.

To address this challenge, lawmakers and legal experts must explore adaptations to copyright legislation that consider AI-generated art.

Developing guidelines and policies encompassing AI's role in the creative process can ensure a fair and balanced approach to ownership and protection.

The Importance of Ethical Considerations

Ethical considerations are important because they form the basis for developing and deploying artificial intelligence technologies. As AI systems become more powerful and pervasive, their impact touches nearly every aspect of our lives, from healthcare and education to finance and public safety. Without a strong ethical framework, artificial intelligence could unintentionally cause harm, deepen social divisions, or violate individual rights. Ethical awareness encourages developers and organizations to anticipate these risks and take proactive measures to minimize negative consequences.

Moreover, ethical considerations foster a culture of responsibility and care in the AI community. They encourage constant reflection on who benefits from AI and who may be forgotten. This thinking drives efforts to make AI more inclusive, accessible, and transparent. It also supports the creation of standards and policies that hold creators accountable for the impact of their systems.

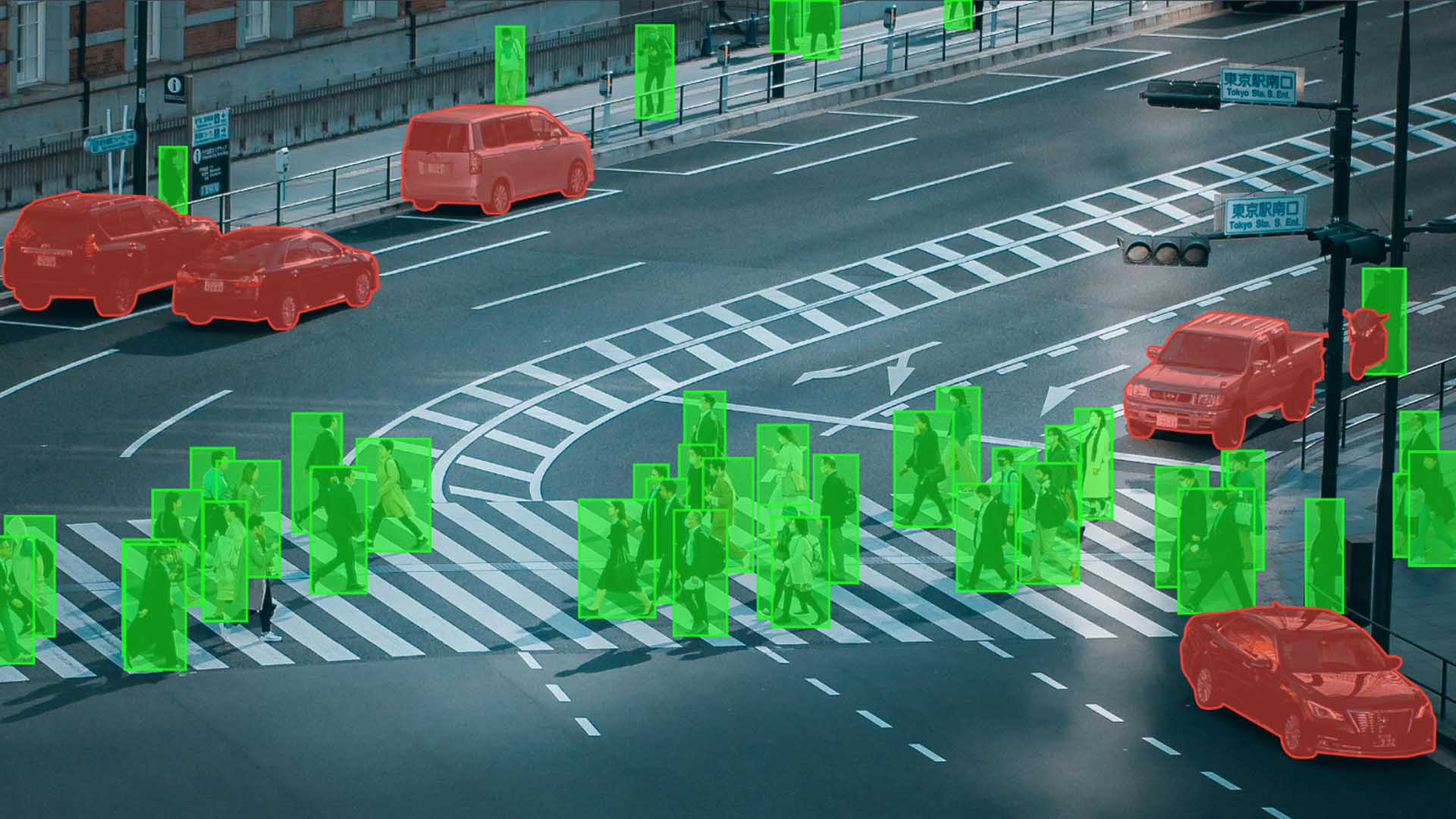

Privacy, Security, and Surveillance in AI

Artificial intelligence systems often rely on large amounts of personal data to function effectively, which raises important questions about how this information is collected, stored, and used. Protecting privacy means ensuring you control your data and carefully handling sensitive information. At the same time, security measures should be in place to protect against unauthorized access or misuse of AI systems and the data they process.

AI-powered surveillance adds another layer of complexity. While it can improve security and efficiency, it also risks violating individual freedoms if not carefully regulated. The balance between using artificial intelligence for security purposes and respecting privacy rights is a delicate and often debated issue. Clear guidelines and oversight are needed to prevent misuse and maintain public trust. Addressing privacy, security, and surveillance issues is essential to creating AI technologies that protect people rather than expose them to harm or exploitation.

Key Considerations for Privacy, Security, and Surveillance in AI:

- Data Protection. Implementing strong security measures, encryption protocols, and access controls to prevent data breaches and unauthorized access.

- Transparency and Accountability. Ensuring that AI systems are transparent and explainable, allowing individuals to understand how their data is processed and decisions are made.

- Ethical Frameworks. Organizations should adopt and adhere to ethical frameworks, prioritizing privacy, security, and responsible use of AI.

- Law and Regulation. Governments and regulatory bodies should establish comprehensive statutes and regulations to protect individuals' privacy rights and regulate the use of AI technologies.

Job Displacement by AI Automation

The job losses caused by AI automation are fundamentally changing the workforce. As machines and algorithms can handle repetitive or routine tasks more efficiently, many traditional roles are being transformed or phased out. This emphasizes the need for people to remain adaptable and continually develop new skills to stay relevant in a changing labor market. The pace of change means that employees and employers must prepare for a future where human and artificial intelligence collaboration will become the norm.

Despite concerns about job losses, AI automation also creates the potential to create new types of employment and industries. Roles focused on developing, maintaining, and improving artificial intelligence systems are growing, alongside jobs that require human creativity, emotional intelligence, and complex problem-solving. Automation can take over mundane tasks, freeing people to focus on higher-value work that artificial intelligence cannot easily replicate. However, this transition is not automatic or guaranteed; it requires investment in education and training programs that equip workers with skills suited to the changing economy. Without such support, the benefits of AI-driven innovation may not reach everyone equally.

Governments, businesses, and educational institutions all have a role in creating social safety nets and opportunities for workers affected by automation. This includes retraining and upskilling programs and strengthening social protection during transitional periods. Open dialogue among stakeholders helps identify new needs and formulate strategies to balance economic growth with social equity. By proactively addressing these issues, AI can boost productivity while ensuring a fairer and more inclusive future for the workforce.

Summary

Addressing the ethical challenges of AI requires collaboration and open dialogue between many different groups - technologists, policy makers, ethicists, and society at large - working together to ensure that these powerful tools are developed and used in ways that truly serve the best interests of people. It's essential to have clear and strict rules governing the creation and deployment of AI systems, helping to prevent harm while encouraging innovation, and transparency is an integral part of this because when people understand how AI works, they are more likely to trust it and hold those responsible accountable.

This ethical reflection cannot be a one-time event, but rather an ongoing conversation that evolves with the technology itself, where stakeholders are constantly learning, adapting, and re-examining the moral implications of artificial intelligence as it changes the world around us. When ethical concerns are addressed directly and integrated into how AI is created and used, it becomes possible to unlock all of the incredible benefits of the technology while respecting the values and principles that matter most to society. Responsible AI adoption means striking a balance and encouraging creativity and progress without losing sight of the risks or real-world human consequences that come with it.

FAQ

What are some ethical considerations in AI model development?

Ethical considerations in AI model development include addressing bias and discrimination, ensuring transparency and accountability, clarifying ownership of AI-generated art, combating social manipulation and misinformation, protecting privacy and security, addressing job displacement, and promoting responsible AI deployment.

How do AI systems perpetuate bias and discrimination?

AI systems can perpetuate bias and discrimination by operating on biased data, leading to unfair or discriminatory outcomes. For example, an AI system used for job screening may discriminate against candidates who do not match a company's historical hiring practices.

What is the importance of transparency and accountability in AI systems?

In AI systems, transparency and accountability are crucial to understand how they work and make informed decisions. A lack of transparency hinders accountability when errors or harm occur.

Who owns the rights to AI-generated art?

The ownership of AI-generated art raises complex ethical questions. As AI systems become more involved in artistic creation, it becomes unclear who owns the rights to the art and who can commercialize it.

How can AI be misused for social manipulation and misinformation?

AI algorithms can spread fake news, manipulate public opinion, and amplify social divisions. Technologies like deepfakes generate realistic yet fabricated audiovisual content, posing significant risks to election interference and political stability.

What are the concerns regarding privacy, security, and surveillance in AI?

The advancement of AI relies on personal data availability, raising concerns about privacy, security, and surveillance. Excessive use of facial recognition technology for surveillance has led to discrimination and repression in some countries.

How does AI automation impact job displacement?

AI automation can replace human jobs, leading to widespread unemployment and exacerbating economic inequalities. However, some argue that AI can create more jobs than it replaces.

How can ethical issues in AI be addressed?

Addressing ethical issues in AI requires collaboration among various stakeholders, including technologists, policymakers, ethicists, and society. It involves implementing robust regulations, ensuring transparency in AI systems, promoting diversity and inclusivity in development, and encouraging ongoing discussions.