Data Annotation for Foundation AI Models

AI baseline model annotation is used to create large, annotated datasets for AI development.

Baseline models reduce the need for labeled data by annotating only thousands of examples for tasks that previously required a larger number.

The use of baseline models has demonstrated cost-effectiveness. This is encouraging companies to adopt automated AI-based annotation.

Key Takeaways

- Foundation model annotation is essential for training AI programs.

- Foundation model annotation reduces the amount of labeled data for practical training.

- Foundation model annotation facilitates AI-based automation.

- Using foundation model annotation helps improve accuracy.

Definition and Foundation Concepts

A foundation model is a pre-trained machine learning model that serves as a basis for refinement or adaptation to a specific task. These models can perform a wide range of functions, including generative tasks. They can generate text, images, and music by learning patterns from data.

Evolution of Foundation Models

One of the first foundation models, BERT, influenced the field of AI by understanding a simple text corpus. Now, models combine NLP annotation tools and computer vision. These models demonstrate that every innovation builds on previous models, expanding the capabilities of AI.

The Role of Data Annotation in AI Development

Data annotation is the foundation for training an AI model. It provides AI systems with labeled data on which to train. High-quality annotations are essential for the development and accuracy of AI models.

What is Data Annotation?

Data annotation is the process of tagging, labeling, or categorizing data. This process helps models understand and interpret input data correctly. There are a variety of annotations: text, image, audio, video.

Importance of Foundation Models

Data labeling supports foundation models and robust application performance. Cloud platforms facilitate real-time collaboration across teams on data annotation.

Quality of Data and Model Performance

High-quality annotations reduce bias and improve model performance. The main challenge is managing the volume of data. To solve this, advanced machine-learning annotation tools are needed. Data annotation automation maintains accuracy when processing large amounts of data with minimal human intervention.

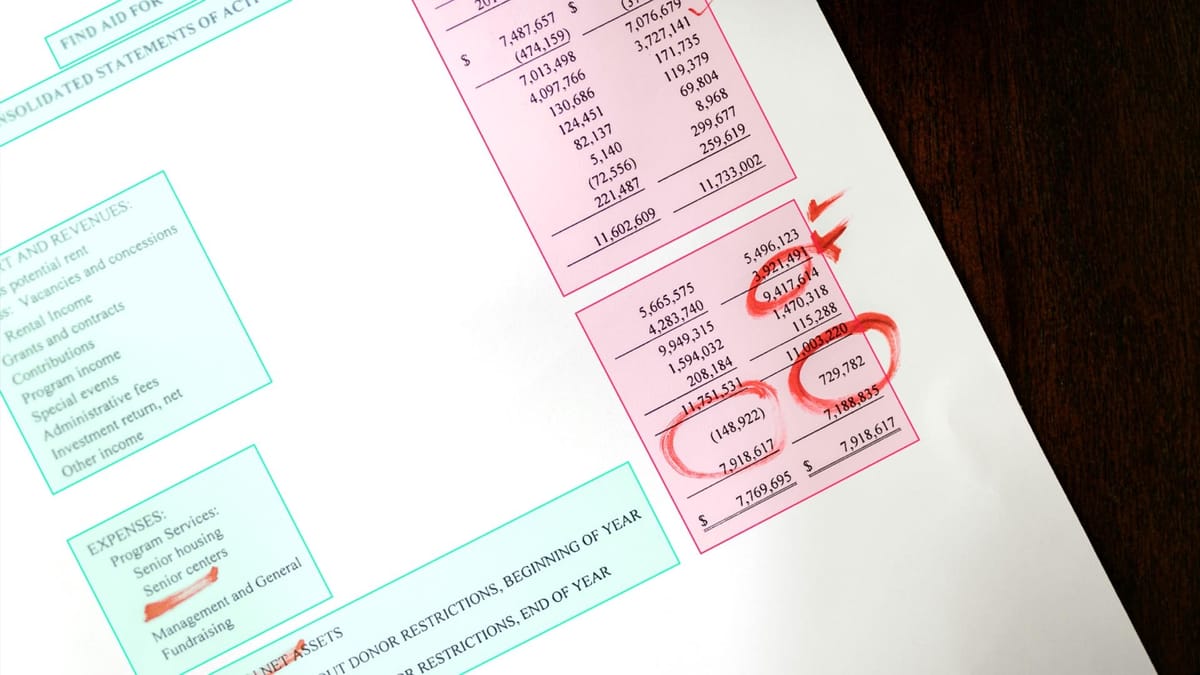

The table below shows the key factors that affect data annotation performance:

Types of Annotation Techniques

Data annotation helps them understand and recognize complex patterns. Each annotation technique boosts AI capabilities, whether for text, images, videos, or audio.

Text Annotation

Text annotation is key for Natural Language Processing (NLP). Tools like Named Entity Recognition (NER) and Sentiment Tagging are used to train models. These tools help models grasp semantics and context. Tasks like text classification, parts of speech tagging, and summarization are critical for improving machine-learning annotation algorithms.

Image and Video Annotation

Image and video annotation are vital for computer vision. Techniques like bounding boxes, object tagging, and image classification are used. These help models detect, recognize, and track objects. Such techniques are essential for autonomous vehicles.

Audio Annotation

Audio annotation is important for voice AI systems. It involves scripting, listening, and analyzing intonation to understand human speech. This supports applications from speech recognition to virtual assistants. A strong annotation platform is needed to ensure data accuracy and relevance.

Challenges in Data Annotation

Scalability problems. The huge amount of data makes manual annotation inefficient. Unstructured data requires specialized annotation methods and tools, including text, images, audio, and video.

Linguistic ambiguity, cultural nuances, and the need for specialized knowledge in different domains complicate annotation.

Quality Control Techniques

Effective quality control processes are vital for the accuracy and utility of annotated data. Quality control must address human subjectivity, which can cause inconsistencies, even with multiple annotators. Red teaming, where separate teams verify annotations, can help reduce these issues.

Ensure high-quality annotations in radiology. Semi-automated approaches have maintained consistency, reduced manual workload, and improved model performance. This underlines the ongoing need for quality control in machine learning annotation.

Variability in Annotation Standards

Standard variations arise due to differences in task definitions, annotator subjectivity, and lack of instructions. When annotating sensitive information, complying with regulations such as GDPR is important.

Setting guidelines, regularly training annotators, and automating repetitive tasks are all methods that can help avoid annotation problems and achieve better results. They are aimed at protecting workers and reducing bias in AI models.

Tools and Technologies for Annotation

Specialized platforms and tools have simplified the annotation of large datasets, and annotation platforms have become very popular due to their features and capabilities.

Keymakr is a data annotation platform that provides machine learning tools. It supports image, audio, and video annotation, making it a versatile solution for AI projects. With a user-friendly interface and automation capabilities, Keylabs reduces the time and cost of data labeling.

Machine Learning-Assisted Annotation

Machine learning helps in the automated annotation process. Automated algorithms can process large amounts of data much faster than manual annotation. It reduces the cost of manual annotation and reduces the need for a large team of annotators. Modern models can accurately annotate images, videos, audio files, and text.

Open-Source Solutions

- Open-Source data annotation tools do not require licensing fees, reducing the annotation cost.

- The code can be modified to meet the specific needs of the project.

- Open tools can be easily integrated with other ML frameworks.

- Open-source tools are suitable for marking images, text, video, and audio.

Practices for Annotation

Effective data annotation is key to creating top-notch AI models. Following best practices ensures that data labeling services provide the consistency and accuracy needed for robust machine learning outcomes.

Guidelines for Annotators

Clear guidelines should be established for annotators. These guidelines should be detailed but easy to understand.

- Define the project goals to achieve the desired results.

- Use simple language and examples.

- Include diagrams and flowcharts for spatial data to improve understanding.

- Highlight special cases to reduce labeling inconsistencies.

Ensuring Consistency

Implementing quality control in annotation workflows helps maintain consistency across datasets. To achieve this:

- Regular training for annotators should be conducted before starting any assignments.

- Maintain communication to resolve issues and clarify instructions.

- Use double-checks or multiple annotators to verify accuracy.

- Update instructions to avoid discrepancies with project goals.

Regular Review Process

Regular reviews and updates to annotation workflows can improve the quality of training data. This includes:

- Spot checks and assessments to monitor quality.

- Feedback loops to correct annotations.

- Maintain communication to quickly resolve annotation issues.

We integrate these practices into the data annotation process to produce high-quality training data.

Leveraging Collaboration in Annotation Projects

By combining different methods and teamwork, we can obtain reliable data. This is an important aspect of improving AI and ML models.

Multidisciplinary Teams

Multidisciplinary teams are groups of experts from different fields who combine their knowledge and skills to jointly solve complex tasks or projects. This has the following advantages:

- Diversity of perspectives.

- Diversity of options for solving problems.

Assessing the impact of annotation on model performance

Accurate and consistent data annotation is key to improving model performance. Evaluation methods are used to determine the impact of annotation on AI model performance. This method includes a wide range of metrics, case studies, and strategies for improvement.

Measurement Metrics for Success

In AI model evaluation, several metrics are used to assess annotation impact. Precision and recall are critical for evaluating accuracy. Metrics like Fleiss' Kappa and Cohen's Kappa measure annotator agreement. These metrics show how well annotators align on classifications.

Incomplete annotations can lead to biased models and incorrect predictions. Quality checks during annotation ensure the reliability of the data.

Future Trends in Foundation Model Annotation

Automation and AI will significantly impact processes. Machine learning can reduce data preparation efforts and speed up manual annotation.

Automating repetitive tasks will allow you to focus on complex tasks.

Eliminating bias, ensuring transparency, and protecting privacy are important ways to maintain ethics in AI models. Ethical and robust governance frameworks are also needed to ensure that models benefit society.

FAQ

What is data annotation?

Data annotation is labeling data to create large, accurately labeled datasets. These datasets are essential for effectively training AI models and for the AI to learn specific tasks accurately.

Why are foundation models significant in AI development?

These models can perform various tasks with less specific training data. They capitalize on large-scale, diverse datasets and advanced architectures, making them versatile across numerous industries.

How does data annotation impact model performance?

Quality annotated data directly influences the accuracy and reliability of AI models. It ensures high performance across varied applications. Accurate annotations help the models learn more effectively from real-world data.

What are some common types of annotation techniques?

Common annotation techniques include text annotation using NLP tools, image and video annotation using object recognition and tagging methods, and audio annotation involving scripting and intonation analysis for voice AI.

What challenges are associated with data annotation?

Data annotation challenges include scalability issues, maintaining quality control, and variability in annotation standards. To overcome these challenges, it is essential to implement robust platforms and tools and effective quality control processes.

What tools and technologies are commonly used for data annotation?

Popular tools and technologies for data annotation include various annotation platforms, machine learning-assisted annotation methods and open-source solutions. These make AI development more accessible across industries.

What are the best practices for practical annotation?

Best practices for practical annotation include setting clear guidelines for annotators, ensuring consistency in the annotation process, and conducting regular reviews. This maintains the accuracy and relevancy of the training data.

How important is collaboration in annotation projects?

Collaboration in annotation projects is critical. Multidisciplinary teams bring different perspectives and expertise. Feedback loops and iterative processes are essential for continuous improvement, aligning with advanced AI model requirements.

How can the impact of annotation on model performance be evaluated?

The impact of annotation on model performance can be evaluated through measurement metrics for success, analyzing case studies on annotation's impact, and implementing continuous improvement strategies. This keeps up with evolving AI technologies and methodologies.

What future trends are expected in foundation model annotation?

The future of foundation model annotation lies in increased automation and deeper integration with AI. It emphasizes ethical considerations such as addressing bias, ensuring privacy, and maintaining transparency. Preparing for next-generation AI involves adapting to technological advances with scalable and efficient annotation methods.