Complete guide RLHF for LLMs

Reinforcement Learning with Human Feedback (RLHF) is a key approach to aligning large language models (LLMs) with human values and expectations. Rather than relying solely on automated metrics, RLHF uses human ratings to train models to select relevant responses.

This method enables LLMs to generate grammatically correct text and exhibit behavior that aligns with real-world user needs. This improves their effectiveness in a variety of applications.

Key Takeaways

- Training the next token is not enough to achieve subjective goals.

- Human preference data and reward models provide targeted matching.

- PPO with KL penalties helps stabilize the updates in reinforcement learning.

- Open-source tools lower the barriers to fine-tuning for production.

- High-quality labels and robust evaluation are essential for secure deployment.

What is Reinforcement Learning from Human Feedback?

RLHF is an approach to training AI models in which their behavior is adjusted based on human evaluations and preferences. This method combines RLHF training with preference learning to teach the model to act in ways that are considered valid, safe, and appropriate. A reward model is then used to guide the model toward high-quality responses.

The RLHF process consists of several stages:

- The model is trained on a large corpus of data using the supervised learning method,

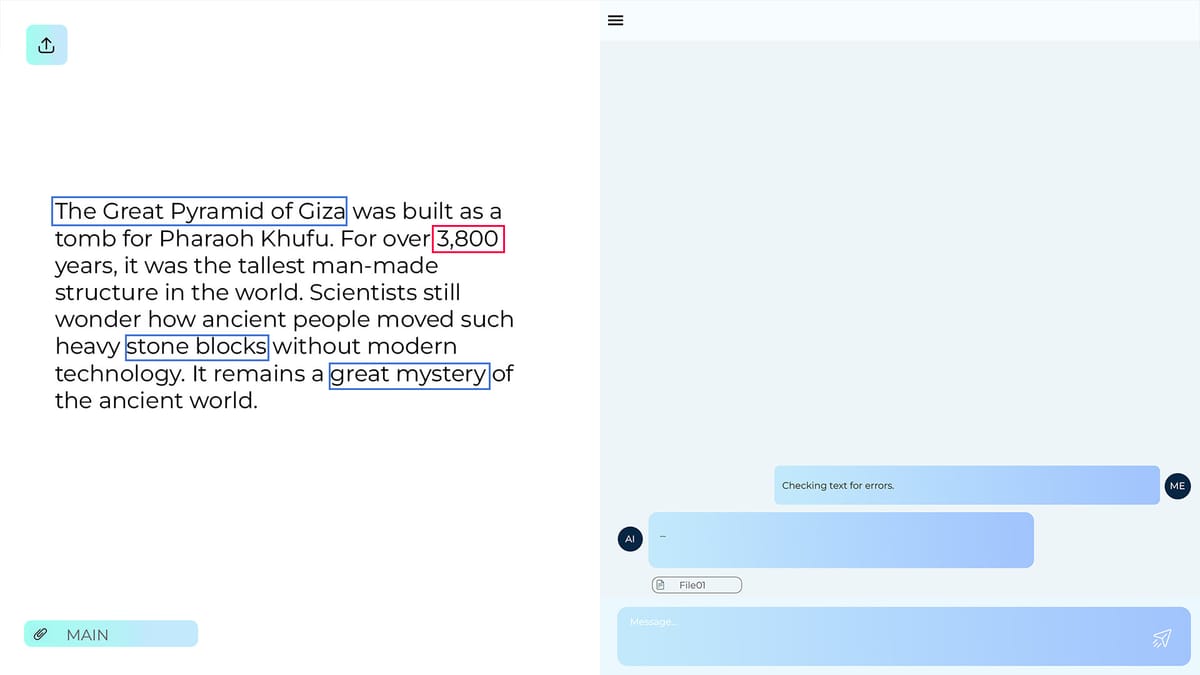

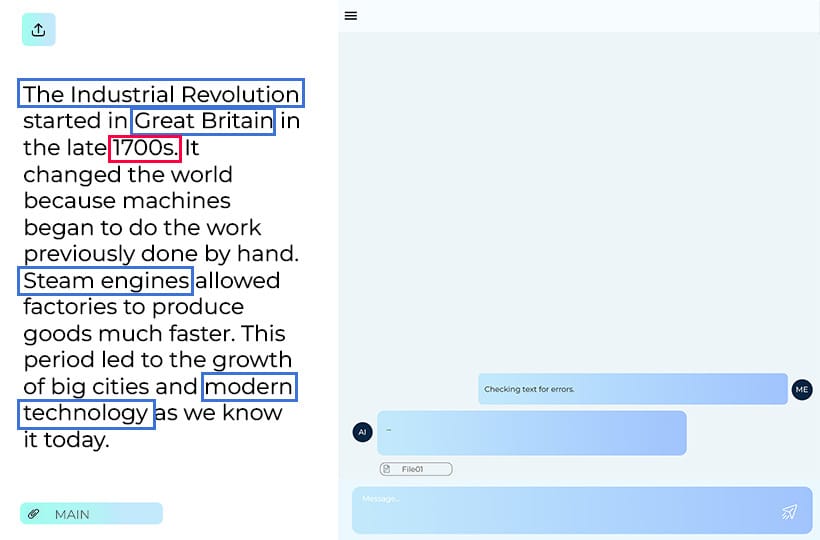

- Annotators evaluate the model's responses, compare several options, and determine the best ones.

- Based on these comparisons, a reward model is built that reflects human preferences.

- The main model is further trained using reinforcement learning to generate responses that humans consider high-quality more often.

As a result, RLHF enables you to align model behavior with human values, reduce unwanted responses, and increase the practical usefulness of artificial intelligence systems in real-world applications.

Building a quality dataset

Building a quality preference dataset is the foundation for RLHF, as it is the data the model uses to learn what people consider best. A well-constructed dataset should be consistent and representative of real-world scenarios where the language model will be used, so that subsequent reinforcement learning can produce consistent and predictable results.

Training a reward model

The reward model maps human preferences to scalar scores, allowing reinforcement learning human feedback to optimize the model’s responses.

From human preferences to scalar reward function

Transforming human preferences into a scalar reward function allows a reinforcement learning algorithm to optimize the model's behavior. In this step, data about which responses people consider best is used to train a separate reward model.

This model takes a specific response as input and assigns it a numerical score reflecting how closely it matches human preferences. This approach transforms complex subjective ratings into a formalized metric that can be used to optimize the underlying language model automatically.

Calibration layers, Temperature scaling, and Score normalization

These techniques improve the consistency and stability of reward models in RLHF.

Calibration layers help the model correctly represent the relative scores of responses and reduce systematic bias in predictions.

Temperature scaling adjusts the "sharpness" of the probability distribution. This makes the model more or less confident in its estimates.

Score normalization equalizes the reward values across different examples, which ensures stable optimization during reinforcement learning and allows you to compare the quality of responses across contexts.

Policy optimization with PPO and KL control

PPO uses coefficient pruning to keep policy updates within [1−ε, 1+ε]. This stabilizes gradient growth on noisy reward signals and is easier to implement than TRPO.

KL penalties are used to prevent reward hacking. Link logits anchor behavior and reduce reward-induced drift that can lead to dangerous cuts.

Freezing vs. Parameter adaptation

During retraining large language models for RLHF, special attention is paid to the balance between freezing and parameter adaptation. Freezing the model's baseline parameters, either partially or entirely, preserves the language and knowledge potential acquired during pre-training. However, fully adapting all parameters can be too resource-intensive and risk instability during optimization.

LoRA (Low-Rank Adaptation) technology, instead of changing all the parameters of the model, adds small low-dimensional matrices for adaptation. This allows the model to learn from new data or user preferences without increasing computational costs.

This approach preserves the base model's performance, reduces resource requirements, and provides fast matching to human preferences.

Where RLHF improves LLM systems

RLHF enables LLMs to operate safely and in ways that are relevant to users' needs. Based on people's ratings and preferences, the model learns to prioritize high-quality responses, avoid unwanted or harmful outcomes, and adapt to the specific context of use. Below are key areas where RLHF significantly improves LLM systems.

By using human alignment and preference learning, RLHF ensures that LLMs provide safe, relevant, and context-aware responses.

Challenges, risks, and limitations

While RLHF significantly improves the performance of large language models, it also poses several challenges and limitations. These are related to the training process itself, as well as data quality, human factors, and technical limitations.

Models aligned with evaluation and benchmarking

Models aligned with evaluation and benchmarking allow you to train a model to generate valuable, safe answers and objectively measure its performance.

After training with RLHF or other alignment methods, the model is tested using metrics and benchmarks that assess the quality of its answers, its consistency with human preferences, and its ability to solve specific tasks.

Metrics for evaluating models

- Accuracy measures the correctness of answers compared to expected results.

- Usefulness assesses the extent to which an answer meets the user's needs or solves their query.

- Clarity determines the ease of perception and the structure of the answer.

- Consistency checks how coherent the answer is.

- Ethical Alignment assesses the risk of harmful content.

Human Preference Alignment shows how well the model chooses answers that people consider best. Metrics help measure current performance and identify weaknesses to improve the model.

Benchmarks for evaluating models

- General linguistic benchmarks assess basic language skills.

- Logical and cognitive benchmarks test the ability to reason, solve problems, and draw conclusions.

- Specialized domain benchmarks test knowledge in specific areas such as medicine, law, and finance.

- User interaction benchmarks assess which model provides practical and safe answers in a dialogic mode.

- Multimodal benchmarks test the model's ability to work with efficient data types.

Benchmarks allow you to compare models, monitor their stability, and determine how well they align with human expectations.

FAQ

What is RLHF, and why is it important now?

RLHF is a method that trains large language models to favor responses that humans find useful and safe. This is important for improving the accuracy, reliability, and ethics of AI in real-world applications.

How does RLHF move models from predicting the next token to aligning with human preferences?

RLHF moves models from simply predicting the next token to aligning with human preferences by using human ratings to train the reward model and optimizing policies so that the generated responses match those preferences.

How does RLHF adapt language models for non-harmful behavior?

RLHF adapts language models by training them to favor responses that humans rate as ethical and contextually relevant.

What are the main stages of the RLHF life cycle for language models?

Pre-training, super-training (SFT), collecting human feedback, training the reward model, optimizing the policy with reinforcement, and evaluating iteratively.

How does RLHF training use preference learning and a reward model to achieve human alignment?

RLHF training leverages human feedback to teach the model to prioritize responses that humans consider correct and safe. The reward model translates these preferences into scalar scores, guiding reinforcement learning for alignment with human values.

How does a reward model learn from human preferences?

A reward model learns from human preferences by predicting which answers people think are best, using data from annotators' ratings or comparisons of answers.

What are the challenges, risks, and limitations of RLHF?

RLHF faces the problems of subjectivity in human assessments, the high cost and complexity of scaling, risk of bias, data noise, limited generalizability, and difficulty controlling the stability of the model policy.