CARLA Simulator for Autonomous Driving Data Labeling

Creating reliable autonomous driving systems requires colossal amounts of labeled data. However, collecting real-world information from roads has significant limitations. Real trips are expensive due to costs for fuel, equipment, and driver labor, and the annotation process takes months. Furthermore, it is nearly impossible to safely capture critical situations on public roads, such as accidents or the sudden appearance of pedestrians in the dark, which makes real-world datasets incomplete.

Simulators like CARLA solve these problems by creating virtual testing grounds for training artificial intelligence. In a digital environment, every pixel of the image, every distance to an object, and every vehicle speed is known to the system in advance. This allows for the generation of perfectly labeled datasets instantaneously and at an unlimited scale, without putting anyone in danger.

Quick Take

- The simulator allows for the creation of rare and dangerous situations that are impossible or too risky to film on real roads.

- Data from cameras, LiDARs, and radars are perfectly synchronized, allowing AI to perceive the world comprehensively and without delays.

- The use of texture randomization and hybrid datasets helps models trained in virtuality work in the real world.

- Virtual millions of kilometers of mileage cost several times less than real-world outings involving fuel, drivers, and the risk of accidents.

CARLA Capabilities for Autonomous Transport Development

The use of simulators transforms the data preparation process from labor-intensive manual work into an automated pipeline. Instead of waiting for rare fog or icy road conditions, developers can create these environments with the press of a key. Thus, the virtual environment allows autonomous driving systems to cover millions of virtual kilometers even before stepping onto real asphalt.

Virtual Environment for Annotation

CARLA is an open-source simulator specifically designed to support research in the field of autonomous driving. The primary reason for its popularity is the ability to create any synthetic scenario imaginable. You can adjust the weather, time of day, number of pedestrians, and intersection complexity in just a few clicks.

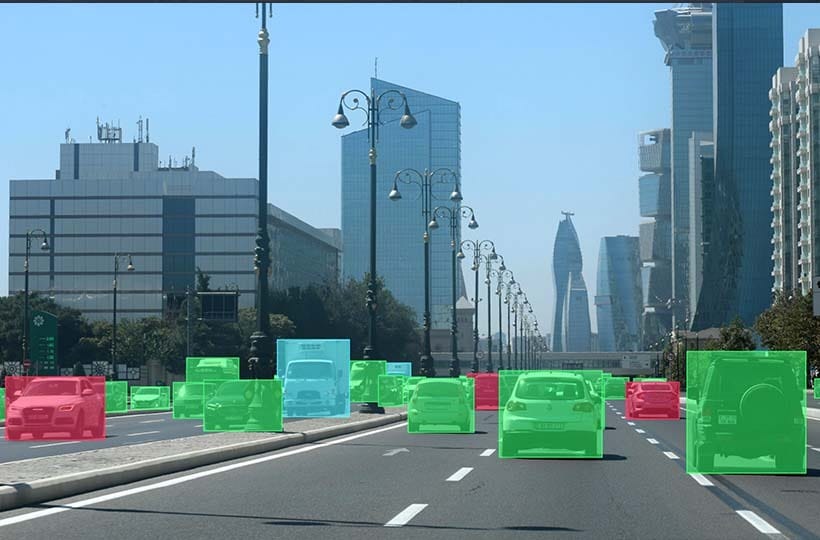

For the data labeling process, CARLA is convenient because it automatically provides a ready-made answer for every object. In real life, an annotator must manually draw boxes on a video, whereas in the simulator, the computer already knows the coordinates of every car. This makes simulation-based training extremely productive, as developers receive gigabytes of precise data without any manual labor.

By using the Unreal Engine, the system creates photo-realistic data that closely resembles actual photographs. This helps artificial intelligence learn to recognize the world as if it were looking through a real car camera. The ability to repeat the same complex scenario thousands of times with slight variations allows for the perfection of driving algorithms.

Types of Data Obtainable from CARLA

The simulator is capable of imitating the operation of any sensor that can be installed on a real vehicle. All CARLA annotations are perfectly synchronized with each other, so camera data matches LiDAR or radar data down to the millisecond.

In addition to visual data, CARLA allows for the acquisition of precise movement trajectories for all road participants. This means the system knows not only where an object is now, but also where it was a second ago and where it will go next. Such synchronization helps create highly complex datasets where data from ten cameras and five radars work as a single unit, providing the artificial intelligence with a complete picture of the surrounding world.

Automation Mechanisms

Automatic labeling allows for the creation of giant datasets, but for the successful application of this knowledge in the real world, the difference between graphics and actual asphalt must be considered.

The Process of Creating Automatic Labels

Automatic annotation in the simulator works based on direct access to the engine. When an object appears in the CARLA world, the system already knows its exact coordinates, class, and dimensions. The user does not need to manually outline cars or pedestrians, as the simulator generates CARLA annotations simultaneously with the image itself. This guarantees the stability of the so-called ground truth.

Full environment controllability allows developers to request data that is physically impossible to obtain in real life. For example, the simulator can provide a perfect mask of an object partially hidden behind a tree, precisely indicating where the car ends and the leaves begin. This ensures the flawless quality of simulation-based training, as the model learns from perfectly clear examples without visual noise or inaccuracies that often arise during manual annotation of real camera footage.

Realism and the Domain Gap

Despite high graphics quality, there is a concept called the domain gap – the rift between virtual images and the real world. Artificial intelligence can become accustomed to the ideal colors or specific lighting of the simulator and begin to make mistakes on a real road. To overcome this barrier and obtain truly high-quality photo-realistic data, developers use special techniques.

One such technique is domain randomization, where textures, lighting, and object positions in the simulator are intentionally changed in unrealistic ways. This forces the model to focus on the shape and essence of objects rather than their color. Furthermore, hybrid datasets are created, where synthetic data is mixed with real data. This helps the neural network adapt to the imperfections of real cameras, such as image graininess or motion blur, while maintaining the advantages of precise annotation from the virtual world.

Hybrid Data Use in Training

The best results in autonomous driving are achieved precisely through a combination of simulator capabilities and real-world trips. CARLA is often used at the pretraining stage – initial model training, where it learns basic traffic rules and behavioral logic. This allows for the saving of hundreds of hours of real-world driving, as the car enters the road already having a basic understanding of what the world looks like.

A special role of the simulator lies in recreating rare cases and complex scenarios called synthetic scenarios or edge cases. For example, one can infinitely model a situation where a child runs into the road in icy conditions. In real life, collecting such data would be dangerous and unethical. Using CARLA for fine-tuning on such critical moments makes the final system significantly safer, preparing it for situations that happen once in a million kilometers of mileage.

Practical Application and Technical Challenges

The simulator allows for the closure of the full development cycle – from object recognition to the final decision on steering. However, working with such a complex tool has its nuances that are important to consider for the successful transfer of technology to real life.

Typical Use Cases

In the modern robotic process, CARLA plays the role of the primary testing ground for training and verifying all levels of the autonomous stack. Due to high data precision, the simulator is used to solve three major tasks:

- Training perception models. Using automatically labeled frames to train neural networks to recognize cars, pedestrians, and road signs in difficult conditions.

- Trajectory prediction. Modeling the movement of dozens of cars simultaneously to teach AI to predict where a neighbor in the lane will go in five seconds.

- Behavior planning. Testing driving logic in a safe environment. For example, how a car should behave at an unregulated intersection or when an obstacle appears on a high-speed highway.

This allows developers to perform testing of autonomous stacks – checking the car's entire logic at once without risking the destruction of expensive equipment.

Limitations and Practical Nuances

Despite all the advantages, CARLA is complex software that requires significant resources and attention to detail. One of the main challenges is the high hardware load: running a photorealistic simulation with many sensors requires powerful graphics workstations.

It is also important to remember the need for sensor calibration. In the simulator, cameras and LiDARs work perfectly, but in reality, they have errors, tilts, and noise. If virtual sensors are not configured to match real "iron" (calibration of focal length, viewing angles), the trained model simply will not be able to work on a real car. Finally, integration with ML pipelines requires writing a large amount of auxiliary code to automatically launch simulations, collect data, and send it to the server for neural network training.

FAQ

What are the system requirements for running CARLA with high realism?

For comfortable operation, a powerful graphics card is needed, such as an NVIDIA RTX 3080 level, and at least 32 GB of RAM, as the simulator is based on the resource-intensive Unreal Engine.

How does CARLA work with maps of real cities?

The simulator supports the RoadRunner standard, which allows for the creation of digital twins of real interchanges based on geodata and importing them for tests.

Does the simulator support night vision or thermal imaging cameras?

Officially, CARLA supports RGB and depth cameras, but through special shaders, developers can imitate thermal imaging or infrared spectrum effects.

What is "Scenario Runner" in CARLA?

It is a separate tool for creating scripted events, for example: "the car in front brakes sharply when a traffic light goes out at an intersection".

How is the problem of the simulator's "too clean" world solved?

Developers add post-processing to the pipeline: applying digital noise grain, motion blur, and camera lens distortion.

Does CARLA support interaction with V2X?

This can be implemented through the API: models receive data on the status of all traffic lights and the positions of other cars directly from the simulation server.

How does CARLA help in testing ethical dilemmas?

The simulator allows for the safe programming of choice scenarios where a car must make a decision in a no-win situation and analyze the AI's logic.