What is an LLM? Complete Guide to Large Language Models [2026]

![What is an LLM? Complete Guide to Large Language Models [2026]](/blog/content/images/size/w1200/2025/12/KMmainX--8-.jpg)

In recent years, large language models (LLMs) have become the backbone of modern AI. They power chatbots, assistants, search engines, business automation tools, scientific research, and even creative platforms. What was once considered an experiment a few years ago has now evolved into a robust and mature infrastructure for working with information.

Modern LLM-based systems perform a wide range of tasks, including text generation, document analysis, code generation, business process automation, and support for complex research workflows. To understand their potential, it is essential to have a comprehensive explanation of LLMs — an understanding of how they learn, the language model fundamentals that underlie their work, and why the transformer architecture has become the standard for such systems.

Key Takeaways

- Large language models learn from massive corpora to generate natural language and enable many applications.

- APIs and open-source foundations lower barriers for enterprise experimentation.

- Practical implementation links architecture, training, and operations to measurable business outcomes.

What is an LLM? A simple definition for beginners

A LLM, is a type of AI designed to understand, generate, and transform text. In its simplest form, a large language model definition is as follows: it is a statistical model trained on vast sets of text data to predict the next word, phrase, or structure in a given context.

A full-fledged LLM explained that such models do not “know” information like humans, but they are able to detect patterns in language, logic, and text structure. Thanks to this, they are able to answer questions, write articles, analyze data, create code, translate languages, summarize documents, and even conduct dialogue, imitating a natural style.

What Their Work Is Built On

- Probabilistic text prediction.

- The model estimates which words are most likely to appear next, based on its experience gained during training.

- Scale of data and parameters.

- Modern LLMs are trained on trillions of tokens and can contain billions or even trillions of parameters — mathematical quantities that encode language patterns.

- Unique architecture — transformer.

- The basis on which most modern models operate is the transformer architecture, which enables efficient processing and analysis of text in parallel and within a contextual framework.

Why LLMs have become so powerful

The key to their effectiveness is the combination of:

- Huge size of data corpora.

- Powerful computing resources.

- Flexible transformer architecture.

- Retraining for specific tasks.

- Integration with search engines, logic tools, and external modules.

How large language models work

To understand how large language models work, it is worthwhile to consider the processes that underlie their learning, operation, and interaction with text. Although on the surface the LLM appears to be an “intelligent conversationalist,” in reality, the model performs complex mathematical operations built on the fundamental principles of machine learning.

- Training on large-scale data corpora.

Any LLM begins with a pre-training stage, during which the model learns to predict the next word in a sequence. This is the essence of the basic large language model definition: the system learns the language's structures by analyzing trillions of tokens.

At this stage, key language model fundamentals are formed:

- Understanding of grammar.

- Logical connections between words.

- Semantic patterns.

- Factual associations found in texts.

None of this makes the model “conscious”; it simply optimizes the parameters to better predict the text.

- Transformer Architecture — the core of LLM.

To explain how LLMs work, it is necessary to understand the role of the transformer architecture. The transformer consists of two key mechanisms:

- Self-attention — allows the model to analyze each word in the context of the entire sentence or document. Thanks to this, the LLM understands long dependencies: for example, what a pronoun refers to or how several paragraphs are related.

- Feed-forward networks — process information at each step and transform the context into mathematical representations.

This architecture enabled scaling the models to billions and trillions of parameters, making them effective in a wide range of tasks.

- Mathematical prediction, not “thinking”.

During the response, the LLM determines the most likely next word. This is a key aspect of LLM explained: the model does not think or make decisions in the human sense — it calculates a probability distribution. The process looks like this:

- The user gives a query.

- The model converts words into internal vectors.

- Self-attention determines the context.

- The model predicts the probability of each possible word.

- The response is generated — a sequence of the most optimal tokens.

- Additional training and specialization.

- Instruction tuning — models are taught to respond correctly to clear instructions.

- Reinforcement Learning from Human Feedback (RLHF) — people evaluate the answers, and the model learns from the feedback.

- Focused additional training — adaptation to narrow areas: law, medicine, cybersecurity, etc.

- Integration with tools and modalities.

- Search engines,

- External knowledge bases,

- Modular tools (code analysis, planning, logic),

- Multimodal capabilities (images, video, audio).

Training, fine‑tuning, and alignment

Performance, infrastructure, and developer access

1. Performance of Large Language Models

The high performance of LLMs is the result of a combination of advanced algorithms, a scalable transformer architecture, and a huge number of parameters. The term performance refers not only to the speed of text generation but also to the quality of answers, the accuracy of predictions, and the ability to perform complex cognitive tasks. Key aspects of performance:

- Scale of parameters and data. Modern LLMs have from tens of billions to trillions of parameters. The larger the model, the more language model fundamentals it can learn during pre-training, which increases accuracy and flexibility when working with different contexts.

- Contextual memory. Thanks to self-attention in the transformer architecture, LLMs can store and consider long contexts (thousands of tokens) when generating text. This enables the model to accurately interpret multi-stage queries and complex dialogues.

- Generation quality. The level of consistency of the text with reality, logic, and style depends on fine-tuning and alignment. SFT, DPO, and RLAIF methods help optimize the model's behavior and ensure the high relevance of the answers.

- Multimodal capabilities. Modern LLMs integrate the ability to process not only text, but also images, audio, and video. This significantly expands the scope of application, for example, in the generation of video explanations, automatic document analysis, or multimodal chat assistants.

2. Infrastructure for LLMs.

To understand how LLMs work on a real scale, it is important to consider the infrastructure that supports their operation. By infrastructure, we mean the hardware and software that allows us to train, run, and scale LLMs. Components of modern infrastructure:

Computational resources.

- GPU/TPU clusters: training trillion models requires thousands of specialized processors.

- Distributed training: models are distributed across multiple nodes to accelerate calculations and save memory.

- Memory optimization: technologies such as ZeRO or FlashAttention allow for efficient processing of large contexts without performance loss.

Cloud platforms and services.

- Cloud providers provide ready-made infrastructure for pre-training, fine-tuning, and inference.

- Automatic scaling is provided in response to user requests, which is crucial for handling a large number of simultaneous requests.

Data pipelines and storage.

- Powerful data processing and storage systems for pre-training (trillions of tokens).

- Modern LLMs integrate with streaming data to update knowledge in real-time without requiring complete retraining.

API and service layers.

- Services provide REST or gRPC APIs for integrating LLMs into business processes.

- Using containerization (Docker, Kubernetes) and orchestration enables the rapid deployment of models across various environments.

3. Developer Access and Usability.

Understanding LLM explained is impossible without considering how developers access models and integrate them into their products. Key aspects of access:

API access.

- Developers can connect to LLM via REST or gRPC APIs, which provide fast integration into web and mobile applications.

- APIs typically provide fine-tuning parameters, such as temperature, maximum tokens, and top-k sampling, which influence text generation.

SDKs and libraries.

- The availability of official SDKs (Python, JavaScript, Java, and C#) enables the use of LLM without requiring in-depth knowledge of the internal transformer architecture.

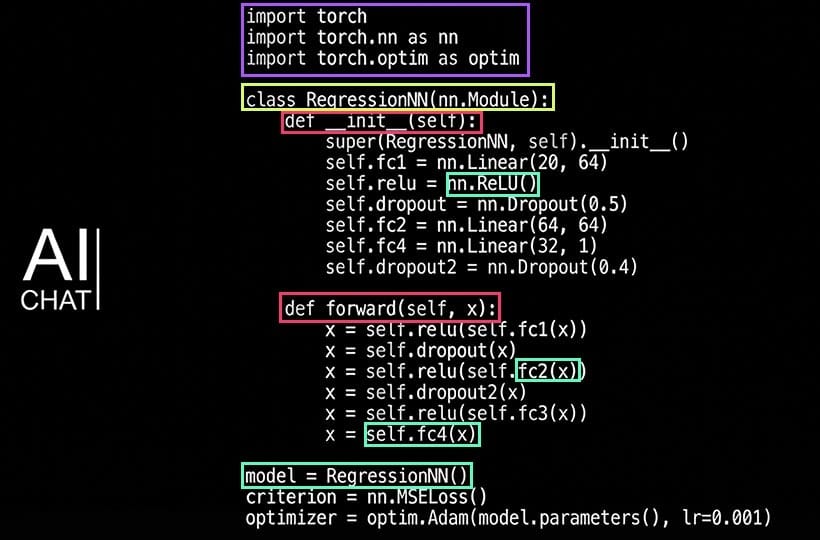

- Support for popular ML frameworks (PyTorch, TensorFlow, JAX) provides flexibility in fine-tuning and developing custom solutions.

Learning Resources and Community Support.

- Documentation, code examples, and integrated pipelines for fine-tuning and alignment help developers understand the basics of LLMs.

- Communities actively share best practices for using large language models in production.

Security and access control.

- Multi-level authentication and model access restrictions are critical for enterprise applications.

- The ability to customize filters and alignment ensures secure text generation.

Summary

Large language models continue to evolve towards greater versatility, security, and integration with different data types. The future of LLM includes the development of multimodal models that can simultaneously work with text, images, audio, and video, as well as closer integration with external knowledge bases and decision-making tools.

The model movement aims to enhance accuracy, consistency, and adaptability through innovative methods of fine-tuning and alignment, automated learning, and the utilization of AI feedback. Developers will gain even greater opportunities to customize models for specific business and scientific tasks, while LLMs will remain flexible, scalable, and capable of performing complex cognitive tasks.

FAQ

What is a Large Language Model (LLM)?

A Large Language Model is an AI system trained on vast amounts of text to predict and generate language. This comprehensive language model definition highlights its ability to capture linguistic patterns and context for tasks such as text generation and analysis.

How do LLMs work?

LLMs work by predicting the next token in a sequence using probabilities learned during training. How LLMs work involves self-attention and feed-forward layers in transformer architecture, which enable models to understand context and long-range dependencies.

What are the basics of LLMs?

The LLM basics include training on massive datasets, using language model fundamentals such as tokenization, embeddings, and contextual prediction. These basics allow models to generate coherent and contextually relevant text.

What is the role of transformer architecture in LLMs?

Transformer architecture provides the structural foundation for LLMs, using self-attention to process sequences in parallel. It enables models to capture complex dependencies in text efficiently, making them highly scalable.

What is pre-training in LLM development?

Pre-training involves exposing the model to a large corpora of text to learn the fundamentals of general language models. This stage establishes a foundation for later adaptation through fine-tuning and alignment.

What is fine-tuning, and why is it important?

Fine-tuning adjusts an LLM to perform specific tasks or domains, often using methods like SFT, LoRA, DPO, or RLAIF. This process ensures the LLM explained is tailored to deliver accurate and relevant outputs.

What is alignment in LLMs?

Alignment optimizes LLM behavior to match human expectations and safety standards. Proper alignment enables the model to adhere to ethical guidelines while demonstrating the practical application of LLM fundamentals.

How does infrastructure affect LLM performance?

High-performance LLMs rely on GPU/TPU clusters, distributed training, and cloud services. The right infrastructure ensures fast inference, scalability, and reliable access for developers to leverage the transformer architecture efficiently.

How do developers access and use LLMs?

Developers typically access LLMs via APIs, SDKs, or libraries that provide tools for integration, fine-tuning, and parameter control. This accessibility enables them to apply the LLM explained without requiring a deep understanding of its internal mechanics.

What is the future direction of LLMs?

LLMs are moving toward multimodal capabilities, deeper alignment, and seamless integration with external tools. Their evolution demonstrates how the definition of large language models, LLM basics, and language model fundamentals will continue to drive practical AI applications.