Training AI Models with Synthetic Data: Best Practices

The growing importance of synthetic data in AI training and model development is undeniable. As the demand for robust AI solutions continues to surge, artificial data is becoming a game-changer in the field.

Synthetic data refers to artificially created information that mimics real-world patterns without using actual observations. It's revolutionizing AI training by offering a cost-effective, scalable, and diverse alternative to traditional data collection methods. This approach is particularly valuable when real-world data is limited, expensive, or sensitive.

The success of AI models hinges on large, diverse, and high-quality datasets. Synthetic data fills this need by providing AI developers with an endless supply of training material. From healthcare to finance, industries are leveraging synthetic data to push the boundaries of what's possible in AI development.

Key Takeaways

- Synthetic data is predicted to make up 60% of AI training data by 2024

- It offers cost-effectiveness, scalability, and diversity in AI development

- Synthetic data is crucial when real-world data is limited or sensitive

- It enables AI models to encounter a wide range of scenarios and edge cases

- Industries like healthcare and finance are already benefiting from synthetic data

Understanding Synthetic Data in AI

Synthetic data is vital for training AI models. It's artificially generated and mimics real-world data, opening up new avenues for innovation. Let's delve into the realm of synthetic data types and their effects on AI advancement.

Definition and Types of Synthetic Data

Synthetic data is artificially created to replicate real-world data attributes. There are three primary types:

- Fully synthetic: Created without using real data

- Partially synthetic: Replaces sensitive elements in real datasets

- Hybrid synthetic: Combines real and artificial data

These types meet various needs in AI model training, from privacy to data augmentation.

Importance in AI Model Training

Synthetic data is crucial for AI development. It tackles issues like data scarcity, privacy, and bias.

Synthetic data speeds up analytics development, eases regulatory hurdles, and cuts data acquisition costs.

Comparison with Real-World Data

Real and synthetic data each have unique benefits. Synthetic data is flexible, scalable, and allows control over data characteristics. Real-world data, however, offers authenticity and mirrors actual scenarios. The choice between them hinges on project-specific needs.

| Aspect | Real Data | Synthetic Data |

|---|---|---|

| Privacy | May contain sensitive information | Privacy-friendly, no PII |

| Availability | Often limited or restricted | Can be generated as needed |

| Bias | May contain inherent biases | Can be controlled for fairness |

| Cost | Can be expensive to acquire | Cost-effective to produce |

Advantages of Using Synthetic Data for AI Training

Synthetic data brings significant benefits to AI development and training. This innovative method of data creation tackles the challenges of traditional data collection. It helps overcome the limitations of real-world datasets, thereby improving AI model performance.

One key advantage of synthetic data is its ability to produce vast amounts of training examples. You can generate from 10,000 to a billion examples, providing AI models with a rich, diverse dataset. This is especially beneficial for training autonomous systems like self-driving cars, where companies like Waymo and Cruise use simulations to create synthetic LiDAR data.

Synthetic data is exceptional at simulating rare events, essential for AI training. It also ensures perfect annotation, automatically labeling each object in a scene. This significantly cuts down on the costs of data labeling, making it a cost-effective solution over time.

- Improves data diversity in AI training

- Reduces bias in AI models

- Optimizes privacy and security

- Enables performance testing and scalability scenarios

- Aids in trend forecasting and business outcome prediction

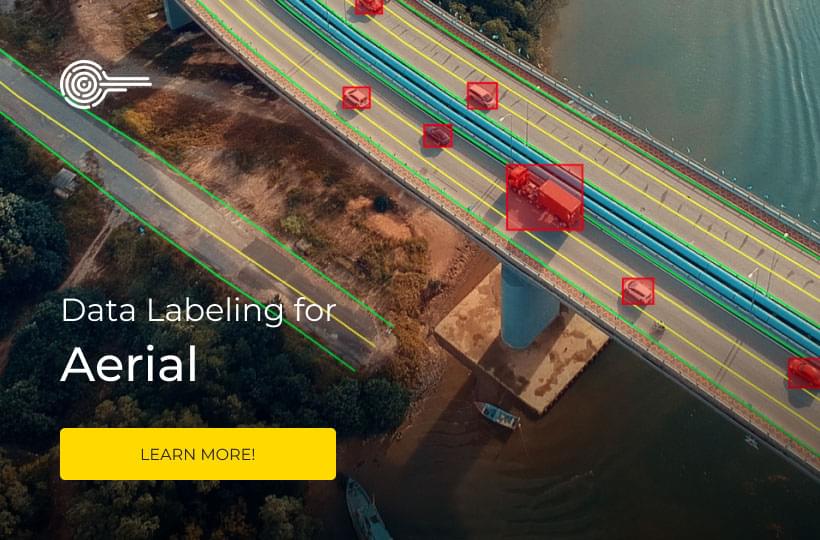

Synthetic data's versatility is invaluable across industries like automotive, drones, medicine, and fields requiring privacy, such as healthcare. It's particularly beneficial when real historical data is scarce or hard to obtain. By integrating synthetic data into AI development, you can foster innovation, enhance research, and build more robust, reliable AI systems.

Synthetic Data in Model Training: Techniques and Approaches

Synthetic data is vital in modern AI training. We'll delve into the essential techniques and approaches for creating and integrating artificial datasets into machine learning frameworks.

Generative Models for Synthetic Data Creation

Generative models lead the way in synthetic data creation. Generative Adversarial Networks (GANs) are the top choice for synthesizing data across various domains. They are adept at producing realistic images, videos, and even tabular data.

Data Augmentation Strategies

Data augmentation boosts existing datasets by generating new, slightly altered versions of original data points. This method enhances model robustness and generalization. Common strategies include image rotation, flipping, and adding noise to audio signals.

Quality Control Measures

Ensuring synthetic data quality is crucial for effective AI training. Researchers validate synthetic datasets against real-world benchmarks and check that statistical properties align with authentic data. This validation process ensures the integrity and usefulness of the artificially generated information.

| Technique | Application | Benefit |

|---|---|---|

| GANs | Image synthesis | High-quality, diverse outputs |

| Variational Autoencoders | Text generation | Improved data privacy |

| SMOTE | Balancing datasets | Addresses class imbalance |

By utilizing these techniques, AI researchers and developers can create high-quality synthetic datasets. These datasets improve model performance while addressing data scarcity and privacy concerns.

Ensuring Data Quality and Representativeness

Data quality and representativeness are key when using synthetic data for AI training. It's essential to test and validate your synthetic datasets thoroughly. This ensures they mirror real-world scenarios accurately. Such an approach keeps your AI models reliable and boosts their performance across different applications.

For top-notch data quality, following industry standards and best practices is a must. This means conducting detailed data quality checks, using various data sources, and regularly reviewing synthetic datasets. By doing so, you can create synthetic data that closely matches real-world data.

Representativeness is vital in synthetic data validation. Your synthetic datasets must capture the diversity and complexity of real-world situations. This includes demographic variations, edge cases, and rare events often missing in traditional datasets.

Key Strategies for Synthetic Data Validation

- Compare statistical properties with real-world data

- Use domain-specific metrics to assess accuracy

- Employ expert evaluations for qualitative assessment

- Validate execution results for code-related synthetic data

- Implement model audit processes to detect biases

By prioritizing data quality and representativeness, synthetic data can speed up AI model development. It ensures ethical and responsible AI practices. This not only improves model performance but also fosters fairness and equity in AI use across industries.

| Aspect | Real Data | Synthetic Data |

|---|---|---|

| Privacy Protection | Limited | Enhanced |

| Data Acquisition Speed | Slow | Fast |

| Cost | High | Lower |

| Scalability | Limited | High |

| Bias Mitigation | Challenging | Controllable |

Overcoming Challenges in Synthetic Data Generation

Synthetic data is a promising tool for training Large Language Models (LLMs), yet it faces significant challenges. This section delves into the main obstacles and strategies for synthetic data generation.

Addressing Bias and Fairness Issues

Ensuring bias mitigation in synthetic data generation is paramount. Industry experts highlight ethical concerns, particularly biases that could result in incorrect decision-making. To address this:

- Implement model audit processes to uncover biases in synthetic datasets

- Diversify data sources to increase dataset representativeness

- Ensure synthetic data accurately represents real-world scenarios

Maintaining Data Privacy and Security

Data privacy is a critical concern when utilizing synthetic data. LLMs necessitate vast, diverse datasets, yet these must be meticulously curated to avert private information breaches. Strategies for privacy include:

- Using anonymization and de-identification techniques

- Implementing privacy-preserving machine learning solutions

- Adopting federated learning approaches to keep sensitive data secure

Scaling Synthetic Data Production

Scaling synthetic data production is vital for the increasing demands of AI model training. A Gartner study reveals that about 50% of organizations generate synthetic data through custom-built solutions with open-source tools. To scale effectively:

- Develop sophisticated algorithms for data generation

- Invest in computational resources to handle large-scale data production

- Balance quality control with scalability to ensure reliable synthetic datasets

By tackling these challenges, organizations can fully leverage synthetic data in AI model training. This ensures ethical standards and data integrity are maintained.

Best Practices for Integrating Synthetic Data in AI Pipelines

Integrating synthetic data into AI pipelines demands meticulous planning and execution. By adhering to synthetic data best practices, you can significantly boost your model training and elevate AI performance.

Start by prioritizing data quality. Validate your synthetic data against real-world benchmarks to confirm its accuracy. This step is paramount for sustaining the integrity of your AI pipeline integration.

Diversity is essential in synthetic datasets. Strive to prevent bias and overfitting by incorporating a broad spectrum of scenarios. Such an approach fosters more resilient models and enhances real-world efficacy.

Data privacy and security must never be compromised. Employ anonymization techniques to safeguard sensitive information while offering valuable training data for your AI models.

"Synthetic data can be tailored to specific requirements, such as balanced representation of different classes for improved model performance."

Consider these model training tips:

- Combine synthetic and real data when feasible

- Develop sophisticated evaluation metrics

- Conduct rigorous testing to ensure accuracy

By adopting these practices, you'll forge a more potent AI pipeline. This pipeline harnesses synthetic data's strengths while mitigating its potential pitfalls.

| Synthetic Data Advantage | Impact on AI Training |

|---|---|

| Scalability | Abundant training data, especially in scarce domains |

| Customization | Tailored datasets for specific model requirements |

| Privacy Protection | Anonymized data for sensitive applications |

Industry Applications

Synthetic data has transformed various sectors, offering groundbreaking solutions to intricate problems. It's essential to see how different industries utilize this technology for progress and efficiency.

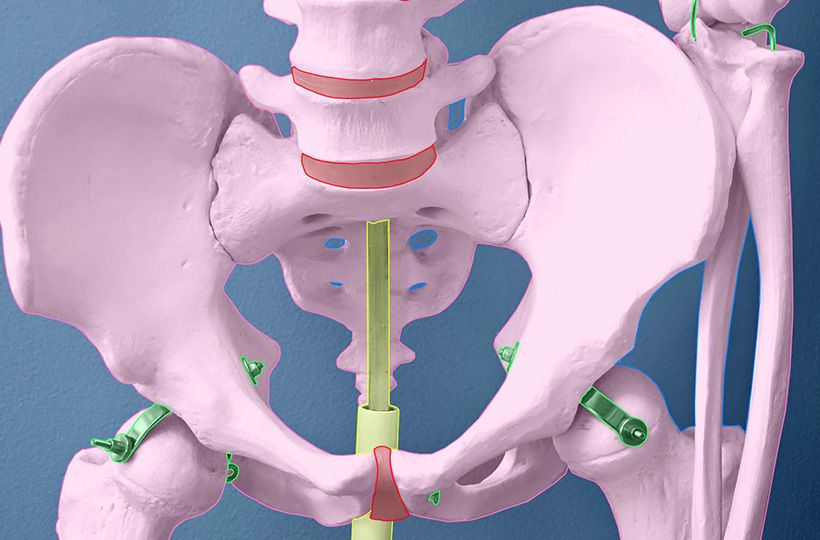

Healthcare and Medical Research

In healthcare AI, synthetic data is pivotal for advancing medical research and training. It allows for the generation of realistic yet anonymous medical images. This supports personalized medicine and disease prevention strategies. Roche, a top pharmaceutical firm, employs synthetic medical data for clinical research. This ensures patient privacy while advancing medical knowledge.

Financial Services and Fraud Detection

Financial fraud detection has notably improved with synthetic data. Banks and credit card companies leverage it to enhance their machine learning and fraud detection algorithms. American Express and J.P. Morgan have adopted synthetic financial data to strengthen their fraud prevention efforts. This method enables them to simulate various fraud scenarios without using real customer data.

Autonomous Vehicles and Robotics

Autonomous vehicle training heavily depends on synthetic data for testing and development in virtual environments. Companies like Waymo use it to shorten testing times and reduce costs. In robotics, synthetic data helps in developing perception, manipulation, and grasping models. This accelerates AI model development in manufacturing, automotive, and industrial inspection sectors.

These case studies highlight the versatility and strength of synthetic data across industries. From healthcare AI to financial fraud detection and autonomous vehicle training, synthetic data is propelling innovation. It's enabling companies to navigate data privacy challenges while enhancing their technological prowess.

Future Trends in Synthetic Data for AI Training

Synthetic data is transforming AI training, addressing privacy and data scarcity concerns. This artificially created data mirrors real-world data, enabling machine learning models to thrive across industries. As synthetic data advancements progress, we're witnessing the dawn of new AI trends.

Let's delve into key emerging AI technologies and their effects:

- Non-AI data simulation and AI-generated synthetic data

- Structured and unstructured data formats for AI training

- Hypothetical scenario representation beyond real-world data

These innovations are reshaping various sectors:

| Industry | Application | Benefit |

|---|---|---|

| Automotive | Autonomous vehicle testing | Reduced risks |

| Healthcare | Privacy-compliant patient data | Improved diagnostics |

| Finance | Transactional data analysis | Enhanced fraud detection |

| Retail | Virtual inventory management | Optimized supply chains |

Despite the benefits, synthetic data faces challenges. Ensuring realism and accuracy is paramount, as datasets that don't mirror real-world complexity can cause problems. Ethical concerns, particularly in areas like facial recognition, must be tackled.

Future trends suggest a rise in synthetic data use for regulatory testing and advancements in generative adversarial networks. These developments are making high-quality synthetic data more accessible and affordable, setting the stage for the next AI breakthroughs.

Synthetic data is the key to unlocking powerful, ethical AI models that can adapt to unforeseen circumstances faster than humans.

Summary

Synthetic data is transforming the future of AI, offering substantial benefits for innovation. It significantly accelerates data science projects and cuts software development costs. For a deeper dive into the synthetic data impact on AI training, explore this insightful article.

Despite challenges in privacy and accurately capturing outliers, synthetic data offers solutions. It enhances fairness, corrects bias, and strengthens machine learning systems. Industries like finance, healthcare, and automotive are increasingly using synthetic data to tackle AI challenges.

In the future of AI, synthetic data will be key in addressing data scarcity and privacy issues. Through techniques like variational autoencoders and generative adversarial networks, experts can create realistic synthetic datasets. These datasets will fuel AI innovation while protecting data privacy and security. The ongoing exploration of synthetic data's potential will define the future of AI and data-driven solutions.

FAQ

What is synthetic data, and why is it important in AI training?

Synthetic data is artificially created to mimic real-world patterns without actual observations. It's vital for training and testing AI models, especially when real data is scarce, expensive, or sensitive. Synthetic data ensures AI models have large, diverse, and high-quality datasets for accurate and robust training.

How is synthetic data generated?

Synthetic data is often produced using generative models like GANs and variational autoencoders, or simulation software. Techniques such as semantic rephrasing and backward reasoning are also employed to create synthetic data.

What are the advantages of using synthetic data for AI training?

Synthetic data has many benefits, including addressing data shortages and representation issues, protecting privacy by not including identifiable information, reducing bias by balancing datasets, ensuring data protection compliance, and being more cost-effective than real data collection.

How is the quality of synthetic data ensured?

Ensuring synthetic data quality is key, which involves validating it against real-world benchmarks and matching statistical properties of authentic data. This includes comparing statistical properties, using domain-specific metrics, and expert evaluations.

What challenges are associated with synthetic data generation?

Challenges include the risk of bias amplification, privacy concerns, and scaling issues. To address bias, generative models must be carefully designed and validated. Privacy is maintained through anonymization and de-identification. Scaling requires sophisticated algorithms and significant computational resources.

What are the best practices for integrating synthetic data in AI pipelines?

Best practices include validating data quality against real-world benchmarks, ensuring dataset diversity to prevent bias and overfitting, prioritizing privacy and security, and combining synthetic and real data when feasible. Rigorous testing and advanced evaluation metrics are essential.

In which industries is synthetic data commonly used?

Synthetic data is widely used in healthcare (medical imaging, patient diagnosis), financial services (fraud detection, risk assessment), automotive (testing autonomous vehicles), retail (inventory management, customer behavior analysis), and climate science (weather forecasting, climate modeling).

What are the future trends in synthetic data for AI training?

Future trends include advancements in generative models and simulation techniques, broader adoption across industries, and integration with emerging technologies like federated learning and differential privacy. There's a growing focus on developing sophisticated methods to ensure synthetic data's factuality and fidelity.