Specific Legal AI Issues: Evolving Frameworks

The increasing use of AI in our systems brings up numerous questions that span across the whole fabric of our societies. We’ve already discussed the ethical and legal implications of artificial agents in a broader sense - this time we’ll talk about specific issues and evolving frameworks designed around them.

Key Takeaways

- When discussing AI, legal professionals wrestle with issues like accountability, transparency, and bias.

- Generative AI introduces specific challenges, including inaccuracy, hallucinations, and security concerns.

- Legal AI usage brings about several legal quandaries: data privacy, intellectual property, discrimination, and adherence to laws.

- Maintaining the accuracy and reliability of AI is key to reducing potential legal risks and upholding standards.

AI Liability

The issue of AI's accuracy is a major roadblock to its full acceptance. The American Bar Association went as far as creating a task force on Law and Artificial intelligence to explore what it means for the legal profession, education, and justice as a whole.

In 2020, just before the current generative AI boom, The European Union released a report about liability that touches on AI, IoT, and robotics. The Product Liability Directive establishes that producers of defective products, including AI systems integrated into products, should take full responsibility if they cause physical or material damage. However, AI poses unique challenges:

- Complexity: The integration of AI into other products and services makes it difficult to identify the liable person and prove causation in the event of harm. This calls for more comprehensive regulation.

- Connectivity and Openness: Cybersecurity vulnerabilities in connected AI systems raise questions about the scope of product liability and the allocation of responsibility between various individuals and organizations in the ecosystem.

- Autonomy and Opacity: The "black box" nature of some AI algorithms makes it difficult to prove fault or understand how damage occurred.

A special AI Liability Directive was proposed to address all these challenges. In the current framework of the EU AI Act, damage that was caused by an AI system or a failure of an AI system to produce a specific output is now defined to fall under liability. If injured parties can provide a causal link to relevant regulatory bodies in the member states, they can prove damages and receive compensation.

At the time of writing, other major regulators like the United States lag behind the EU in this regard, so AI laws are more decentralized on the state level. There’s a compelling reason for strict AI liability standards to be introduced, but the situation remains uncertain.

AI Liability Trends

A recent study published in Computer Law & Security Review argues that AI systems, especially those with unpredictability and autonomy, pose challenges for existing liability frameworks and need an evolved approach. Increasingly agentic AI is unpredictable and harder to control. Some types of harm it could cause include:

- Physical harm either from direct AI action or misinformation.

- Data loss caused by system vulnerabilities.

- Privacy harm and failure to comply with PII laws.

- Algorithmic bias and discrimination, directly leading to bad outcomes.

In order to define this, we need to expand the very definition of what a “software product” means to include digitally manufactured files, such as images, text, video, and so on. In addition, companies that create AI aren’t just producers - they’re economic operators that run artificial agents.

In essence, the first step to establishing AI liability is transparency and explainability of these systems. This is why the conversation about ethics in algorithms is so important, and why the solution to AI liability isn’t just for lawyers - it involves dozens of stakeholders.

With lobbying having spiked 185% in 2023, the industry is already preparing for massive changes coming up. Finding a balance between promoting innovation and ensuring AI's responsible use is crucial. While countries with a traditional legal background may not urgently need new laws to guide AI's liability, addressing these issues early is important for AI's continued existence.

| Risk Factor | Potential Impact | Mitigation Strategies |

|---|---|---|

| Algorithmic Bias | Perpetuating or amplifying existing prejudices | Explainable AI, AI governance frameworks |

| AI-caused Harm | AI systems causing damage to individuals and organizations | Clear liability guidelines, compliance requirements |

| Intellectual Property Rights | Uncertainties in protecting AI-generated work | Staff training, securing indemnification in contracts |

The legal battle got far more heated in one particular field:

AI and Intellectual Property

The quick evolution of AI has left legal frameworks behind, demanding a rethink and possible reforms in patent law and copyright protection. This disparity between AI advancements and legal regulations has opened up various issues regarding the ownership of generated content, such as articles written by ChatGPT, images made by DALL-E and Midjourney, and so on.

There have been a series of high-profile legal battles and complaints in this area:

- Microsoft, GitHub, and OpenAI allegedly used licensed code to teach GitHub Copilot, which could potentially qualify as software piracy.

- Getty Images sued Stability AI on allegations of misusing 12 million images to train its AI.

- Comedian Sarah Silverman joined a class-action lawsuit against OpenAI and another lawsuit against Meta, claiming that they illegally added her property to a training dataset.

- Scarlett Johansson’s lawyers are alleging that OpenAI’s personal assistant voice was stolen from the movie “Her” starring the famous actress’ voice.

- There are many other lawsuits and cases, such as those carefully cataloged here, with numbers increasing seemingly each passing month.

While the issue of training AI on real-world data and associated copyrights is still being discussed, we still face another major question:

Who does AI-generated content belong to?

The United States Copyright Office launched several inquiries about the role of AI and copyright practices related to it. Currently, they are not extending copyright protection to works created solely by AI, because copyright is the domain of human authorship. However, the degree to which the work had “human input” is considered the degree to which it can enjoy copyright protection.

This framework is far from perfect, and a recent precedent of Elisa Shupe has potentially served to reduce the stigma around using AI for creative pursuits. This disabled veteran managed to write a novel and became an owner of a “selection, coordination, and arrangement of text generated by artificial intelligence.”

In most countries, as well as the European Union, the situation is just as murky and up for debate. Notably, the EU AI act referenced earlier doesn’t define copyright ownership, which means that human-made prompts may enjoy the same copyright protection as literary works. However, as with many things in the field, there’s still no unifying response.

The Thaler v. Vidal case determined that AI cannot be considered an inventor because it doesn’t qualify as human, showcasing a major legal obstacle. In an interesting precedent, South Africa actually granted Thaler’s AI a patent to an AI for a fractal food container. Yes, it’s the same Stephen Thaler from the United States case - one of the first inventors pushing the boundaries of the legal personhood of artificial agents.

So, what’s the state of AI-generated Data? It’s currently a gray area in most jurisdictions. Discussions are ongoing with regulators including the US Senate about legal safeguards for AI-created inventions, suggesting the creation of an "AI-IP" category.

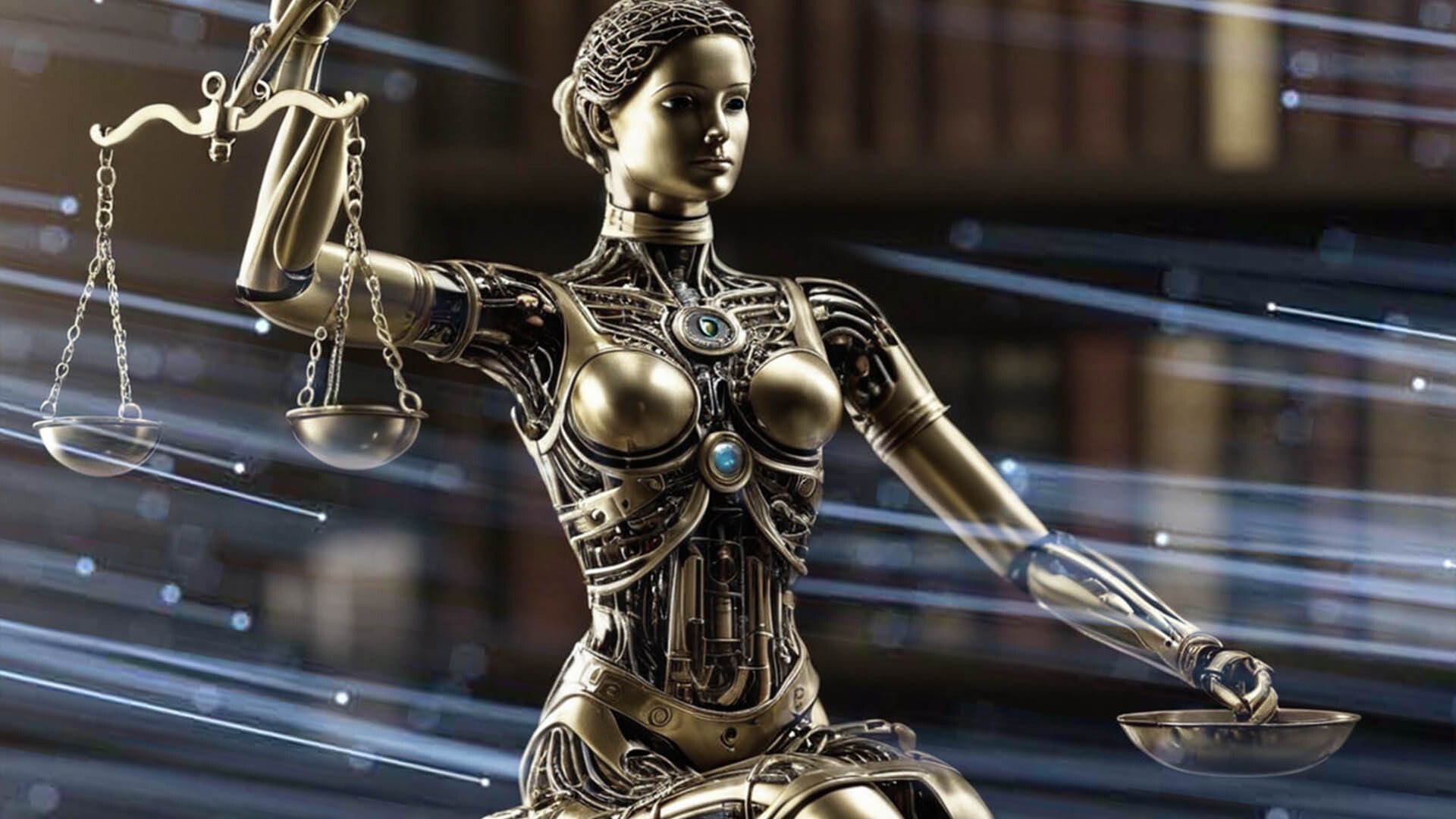

AI and Criminal Justice

The integration of AI in criminal justice has marked a substantial change. It notably includes the application of forensic DNA to solve cold cases. This has also led to the liberation of wrongly convicted individuals, a practice that started in the late 1980s. AI boosts the capability of forensic labs, enabling the analysis of DNA that was previously challenging to use. This includes cases where DNA levels were low or where the evidence was degraded.

These are just a few of the applications. AI is widely used in:

- Judicial Proceedings, analyzing legal data, and making recommendations, with scientists going as far as trying to teach AI legal reasoning.

- Law Enforcement, to effectively distribute resources and predict high-crime areas and at-risk locations. This particular application is often criticized by various outlets.

- Ballistics and firearm examination, comparing everything from gunshot samples to various angles, models, and tracking of weapons, with many niche uses in the field.

- Recidivism Assessment, with AI sentencing used for calculating risks of reoffending and general calculations of crime statistics. This application is also criticized for potential bias.

- Specific Applications, such as crime scene reports, recognizing bomb components, financial fraud detection, and so on.

In general, the use of AI in predictive evaluations and in predicting risks of criminality has sparked concerns about racial biases and targeting vulnerable groups. Some studies point to historical data's impact, which has led to the uneven enforcement and disproportional sentencing of individuals of color.

Despite these difficulties, AI continues to offer significant improvements to the criminal justice system. Efforts are underway to diversify the tech sector for a broader, more inclusive deployment of AI in criminal justice. Notably, studies highlighting the mixed predictive performance of AI risk assessment tools call for ongoing improvement and scrutiny.

AI in Autonomous Vehicles

The introduction of AI into autonomous vehicles is transforming how we get around. By 2035, this market could grow to between $300 and $400 billion. However, with this exciting technology comes complex legal hurdles, like who is responsible if a self-driving car causes harm. Is it the individual at the wheel, the company producing the car, the company producing the software, or all of the above? These questions get surprisingly more complex the further you go.

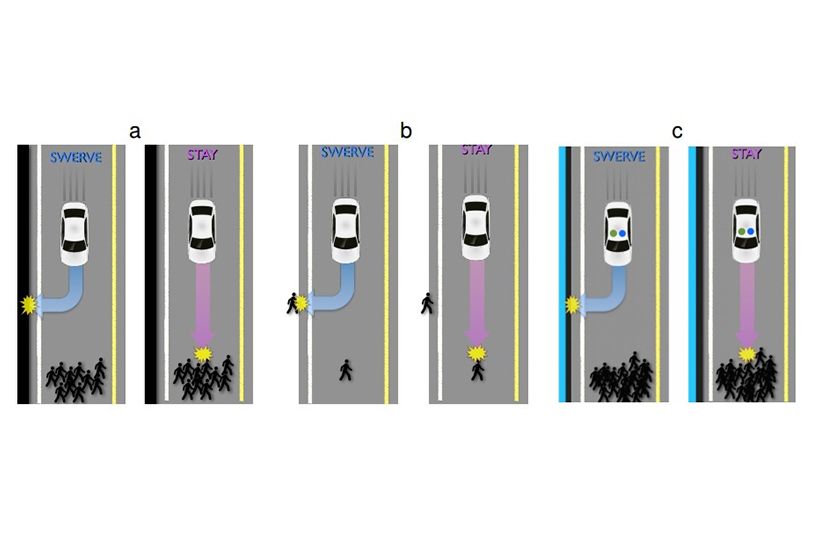

Initial testing shows autonomous vehicles may be safer than those driven by people, with lower accident rates. Yet, concerns about technological failures and unpredictable road scenarios persist. For example, should the car prioritize the safety of its passengers or pedestrians? And how does it behave in potentially lethal scenarios with no objectively easy answers?

Both the questions of accident liability and autonomous car behavior are intrinsically linked. Complex algorithms are designed by computer scientists to determine best behaviors in a wide variety of scenarios. However, since a lot of questions are ethical in nature, they require a lot of stakeholders to come together - from policymakers to scientists, engineers, and even philosophers.

Changing how we think about product liability and insurance is essential in this new era.

Ruling autonomous vehicles is new and complex, with broad regulations still in the works.

Around the globe, governments are addressing the legal framework needed for autonomous vehicles. In the U.S., more than 80 state laws focusing on these vehicles have passed or are pending. At the national level, the “SELF-DRIVE” Act establishes the federal role in the safety and deployment of highly autonomous vehicles. The NHTSA has also laid out new guidelines for these systems that require specific safety benchmarks, as well as expectations of system maturity.

Germany and China are setting their own rules. Germany pioneered a framework for autonomous cars in 2022, which goes through the procedure of granting licenses for vehicles with autonomous functions. China has bold plans for 2030, aiming to sell vehicles that are completely self-operating. Japan and South Korea are also heavily investing in the industry, aiming to catch up.

It’s inevitable that, much like with AI in other industries, different countries will come up with varied regulations and decision trees for autonomous vehicles in their countries. A recent survey shows that 93% of Americans have concerns about self-driving vehicles, so legal concerns aren’t the only thing standing in the way of autonomous cars - the court of public opinion has deemed them unsafe in their current state, so a major shift is necessary for this to change.

AI and Facial Recognition

The forward march of facial recognition technology has ignited heated discussions on privacy and data safeguarding in the age of AI surveillance. While this tech offers advancements in law enforcement and security, it stirs up deep worries about gathering and utilizing biometric data.

Facial recognition systems come with a significant concern; they can be biased and inaccurate, especially when attempting to recognize people from varied racial and ethnic groups. As per federal tests, most algorithms fall short of identifying people who aren’t white men. This issue can result in wrongful arrests and discrimination of minorities.

One example of this is the story of Robert Williams, a black man and father, who spent 30 hours in a Detroit jail cell because facial recognition technology falsely deemed him a criminal in 2020. Evidence indicates computer vision algorithm biases exist primarily due to the over-representation of data on caucasian faces during the development of U.S. algorithms.

Still, more than a quarter of local and state police forces and almost half of federal agencies employ these systems.

"Concerns exist around the lack of transparency and standards regarding the use of AI in law enforcement and criminal justice."

At the national level, a "Blueprint for an AI Bill of Rights" was released in 2022. Congressional Democrats also put forth the Facial Recognition and Biometric Technology Moratorium Act. These initiatives aim to tackle the rising worries about unchecked AI surveillance and its implications on civil rights.

Companies in the private sector are also under a magnifying glass for their use of facial recognition technology. For instance, Rite Aid was banned from using it for five years in surveillance due to thousands of false hits that affected certain groups disproportionately. The FTC complaint underscored Rite Aid's negligence in addressing risks, verifying technology accuracy, avoiding low-quality images, and properly training staff.

As AI and machine learning expand in the justice field, tackling the hurdles posed by facial recognition technology is vital. Defense lawyers meet significant barriers in critiquing AI evidence due to the lack of transparency and stringent requisites to obtain crucial information for court cases. Even worse, the prosecution typically obstructs requests to share AI algorithm source codes, further complicating matters - this process has been dubbed a ‘black box’ at the heart of the US legal system.

To promote the ethical use of facial recognition technology, the following steps are essential:

- Comprehensive legal frameworks that protect privacy and balance with public safety

- Clear guidelines for gathering, storing, and using biometric data

- Ensuring transparency and oversight in AI surveillance deployment

- Dealing with algorithmic bias and accuracy issues through broad and quality training data

- Establishing legal limits for facial recognition and biometric data usage in different cases.

As concerns about fairness and transparency linger in AI beyond facial recognition, a concerted effort is needed to address the overarching problems of skewed predictions from potentially flawed data.

AI and Weaponry

In 2020, the DOD set AI Ethical Principles, emphasizing accountability, fairness, transparency, dependability, and controllability. Yet, a critical assessment revealed gaps in areas such as exemptions, control measures, cross-border technology transfers, and the approval process for creating and using lethal autonomous weapons.

A recent report by the Public Citizen raises concerns about the dangers of AI in the military, some of which include:

- Use of force and decisions to take human lives made by autonomous systems

- AI control over highly destructive weaponry, such as nuclear arms

- Firms that develop algorithms and contract them to the military have financial agendas

- Systemic inaccuracies of generative AI like chatbots and predictive software

- Battlefield use of deepfakes and misinformation

The introduction of autonomous weapons disrupts established norms of international law. At the 2024 meeting of the Group of Governmental Experts (GGE) on lethal autonomous systems, concerns about AI bias in particular were raised. Algorithmic bias can distort legal and ethical judgments, especially in assessing combatants based on characteristics like age or gender.

This bias is an issue since it can persist through all stages of developing military AI, and the limited data availability only exacerbates the problem.

Contrary voices in the US military argue for the strategic benefits of autonomous weaponry, including enhancing military power, expanding operational ranges, and minimizing human risk in perilous tasks. The DOD outlines in its Unmanned Systems Roadmap that robots excel in hazardous terrain and long missions. They believe that as autonomy grows, so will the capability of these systems in key areas like recognition, planning, education, and interaction with humans.

However, thousands of AI experts have urged a ban on these weapons due to fears about the implications of AI militarization. It is indispensable to maintain human oversight over the utilization of AI weaponry and to establish stringent arms control protocols.

Much like with the issues of criminal justice and the use of AI in judicial matters, there’s no one-size-fits-all governance. Notably, the EU’s recent rules on artificial intelligence specifically omit the use of military AI from its scope, with defense applications being absent from most regulations globally. Think tanks and activists continue to demand that governments step up and comprehensively regulate the use of AI in defense due to increasing concerns about autonomous systems making decisions about human lives in and outside of battlefields.

FAQ

What are the specific legal issues associated with AI?

The legal world faces a host of AI-related challenges. These include holding AI accountable, managing risks, ensuring transparency, and reducing bias. Oversight, auditing, and establishing regulatory frameworks are vital. Legal professionals are tasked with using AI responsibly and ethically within a shifting technological terrain.

How does AI liability impact the legal landscape?

AI systems can cause harm or errors, raising complex liability issues. Algorithmic bias and explainable AI are critical concerns. To address these, robust AI governance and ethical development practices are essential, reducing risks and ensuring accountability. You can learn more about critical concepts in this article.

What are the challenges at the intersection of AI and intellectual property law?

The merging of AI with intellectual property presents challenges in content and invention ownership. Adapting copyright and patent laws for AI-created works is a necessity. Legal minds must deal with the complexities of attributing authorship and inventiveness to AI.

What are the legal and ethical concerns surrounding the use of AI in criminal justice?

AI’s role in criminal justice sparks worries over fairness, transparency, and due process. Systems like predictive policing can perpetuate biases and disparities. It's essential to ensure fairness and transparency when AI influences the criminal justice system.

What are the legal challenges associated with autonomous vehicles powered by AI?

Autonomous vehicles introduce unique legal issues, especially in accidents. It’s hard to pinpoint liability—whether it lies with the maker, the developer, or the owner. Legal evolution in product liability and insurance is likely necessary for AI-driven vehicles.

What are the privacy and data protection concerns related to AI-powered facial recognition technology?

AI facial recognition technology poses privacy and data protection issues through potential mass surveillance and privacy violations. Strict personal data protection laws must be followed regarding biometric data use. Despite ongoing legal developments, frameworks for facial recognition's ethical use are still in their early phases. The European Union is a pioneer here, placing the use of facial recognition and biometrics under ‘High-Risk’ in AI training, heavily regulating the use of such systems.

What are the legal and ethical implications of AI-powered weaponry?

The creation of AI weaponry, including autonomous systems, brings about significant ethical and legal dilemmas. Such weapons challenge international laws and can increase armed conflict risks. It's vital to ensure human oversight and implement strong arm control methods with AI weaponry.