Regional and International AI Regulations and Laws in 2025

AI governance became a motto in 2024, as an estimated 42% of companies use AI. This situation can lead to biased outcomes, heightened inequalities, and violations of individual rights.

AI is particularly difficult to regulate because it touches on many aspects of society and includes many stakeholders. In this article, we'll move from specific legal issues of AI to the general regulatory landscape, focusing on the EU and the US as global trendsetters for other regulators.

Key Takeaways

- The EU's AI Act sets a global precedent for comprehensive AI regulation, focusing on high-risk applications. In contrast, the US has taken a more decentralized approach, allowing states to draft their rules.

- International organizations are collaborating to develop multilateral AI governance frameworks.

- The UN Resolution A/78/L.49 emphasizes the importance of ethical AI principles and adherence to international human rights law.

- Private sector companies must prioritize data privacy, transparency, and legal liability in AI development and deployment.

- AI regulatory compliance is crucial for ensuring responsible and sustainable use of AI technologies. Compliance will differ across continents, and we'll likely see the global AI ecosystem fracture into specific jurisdictions.

The Urgency for AI Regulation

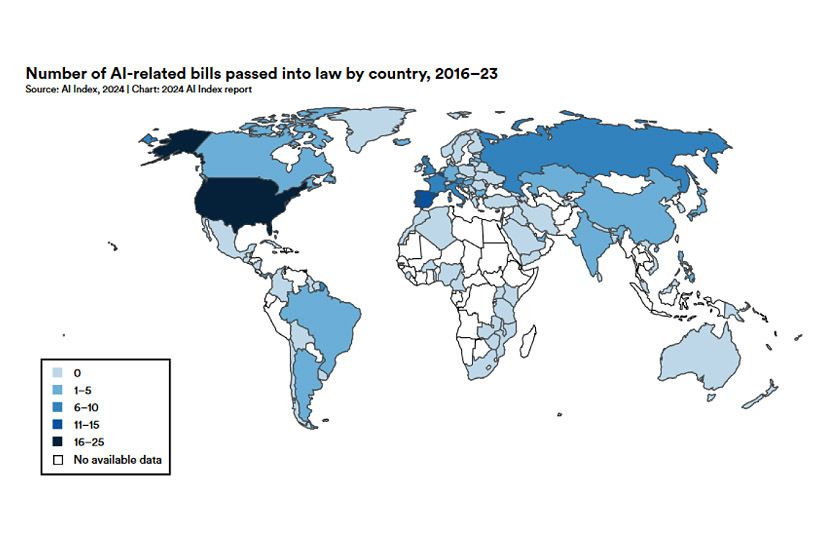

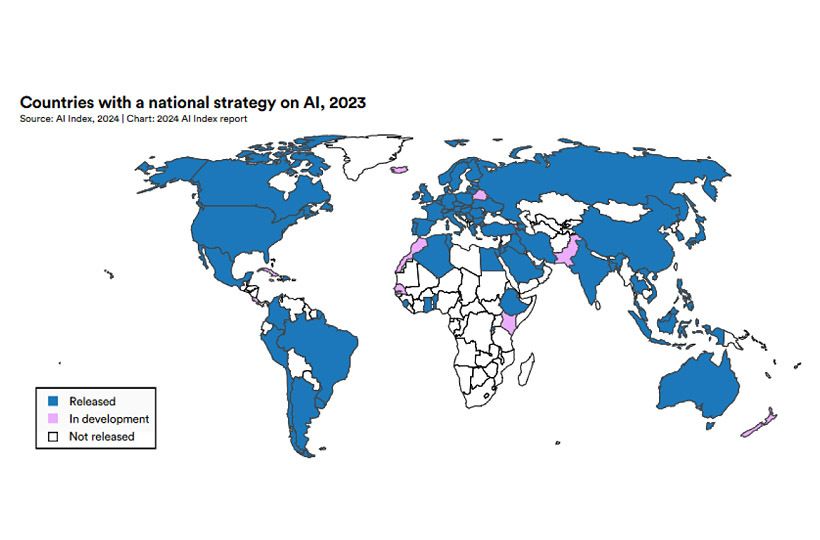

The push for AI regulation is a worldwide affair, evident in laws and policies on six continents. Intergovernmental bodies such as UNESCO, the International Organization for Standardization, and regional groups like the African Union are all working on this. They aim to create global frameworks for governing AI and addressing its challenges.

The United Nations moved significantly by adopting Resolution A/78/L.49 on "Seizing the opportunities of safe, secure and trustworthy AI." This happened on March 21, 2024. With 125 State co-sponsors, the United States highlighted the importance of human rights and minimized AI's bias. The goal is to prevent the reinforcement of social inequalities through these technologies.

Regulating AI is complex, primarily due to the absence of clear-cut definitions that we discussed in detail in this article. Additionally, the development of AI needs contributions from all sorts of sectors, including labor, data, software, and finances. Effective regulations require a detailed approach to meet the challenges of this swiftly moving field.

Let's dive deeper into different jurisdictions to see how things are evolving.

United States AI Policy Landscape

The United States' AI regulation landscape involves the federal and state governments, industry players, and the courts. Currently, policymakers are trying to balance the promotion of innovation against security concerns and the potential impacts of AI on society.

President Biden's Executive Orders on AI

The US Federal Government released two important Executive Orders about the issue: the Blueprint for the AI Bill of Rights and a draft of the AI Risk Management Framework.

Executive Order 14110

2023 President Joe Biden introduced Executive Order (EO) 14110, "On the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence." It outlines the general approach of the US government to regulating AI. Here are the eight governing principles of the Federal Government according to the document:

- Artificial Intelligence must be safe, which requires navigating all the complexities involved.

- Promoting responsible competition and collaboration. The aim is to invest in AI-related education, training, and development while dealing with IP-related challenges.

- Commitment to using AI for supporting American workers and augmenting human work rather than causing labor-related disruptions.

- Policies about AI must be consistent with advancing equity and civil rights, which means reducing algorithmic bias. This follows the blueprint for the AI Bill of Rights, which is related to equitable design for AI.

- Protecting consumers who use AI daily means enforcing existing protections and aiming to hold organizations accountable for products and services.

- Respecting the privacy and civil liberties of Americans in AI development. This relates to the proper collection of personal data and the protection of sensitive information.

- The Federal Government's use of AI must be managed carefully. The goal is to attract and retain public-oriented professionals to help develop and govern AI.

- The federal government aims to lead the way to global societal, economic, and technological progress through its future policies.

These principles form less of a governing framework and more of a commitment to future AI regulations. Individual states are strongly expected to develop specific laws, which will be covered further in the article.

Executive Order 14105

Another EO, 14105, aims to limit investment in certain technologies by specific nations, including those involved in semiconductors, quantum IT, and AI. It defines AI as any machined-based system that affects real or virtual environments. The countries in question currently include China and the special administrative regions of Hong Kong and Macau.

Under the upcoming regulations, some investments will likely be outright prohibited, and others will need notification to the government. Final regulations will be shared later, following the NPRM review process. It's worth noting that no outbound investment restrictions are currently in effect.

This EO allows the administration to widen the scope of sensitive technologies. New areas like biotechnology and battery technology might also be considered. However, the current attention on AI remains striking. The US government sees investments in AI in countries of concern as a significant national security issue, translating to policies.

Blueprint for AI Bill of Rights

The Blueprint for an AI Bill of Rights is a non-binding framework that outlines five principles to guide the design, use, and deployment of automated systems to protect the American public in the age of AI. These principles are:

- Safe and Effective Systems. In short, systems should be developed with all stakeholders and domain experts to identify potential risks and impacts in advance.

- Algorithmic Discrimination Protections. Biases present in automated systems should be addressed. In other words, algorithms should never favor people based on race, color, ethnicity, sex, identity, disability, and other protected characteristics.

- Data Privacy. You should have agency about how and when algorithms use and process your data.

- Notice and Explanation. You should understand how automated systems contribute to outcomes that impact you, such as hiring, credit, monitoring, etc.

- Human Alternatives, Consideration, and Fallback. You should be able to opt-out, where appropriate, and access human assistance for your issues.

The AI Risk Management Framework

The AI Risk Management Framework (AI RMF) is a voluntary set of guidelines developed by the National Institute of Standards and Technology (NIST) to help organizations address AI risks. It currently exists in draft form and is still evolving.

Key aspects of the AI RMF:

- Voluntary use and flexibility for different organizations and use cases.

- Promoting trustworthy and responsible AI by addressing risks to individuals, organizations, and society.

- Iterative process for managing AI risks throughout the entire lifecycle of AI systems

- Clear roles and responsibilities for managing AI risks and ensuring accountability.

- Identifying and assessing potential risks associated with AI systems, including those related to fairness, bias, transparency, and safety.

- Implementing technical controls, establishing governance mechanisms, and engaging stakeholders.

One interesting aspect of the AI RMF is its risk-based approach to AI systems. As we'll learn later, this is precisely how the European Union makes decisions in a far stricter and more detailed regulatory framework.

AI Laws in Different States

At the state level, most are looking into AI-related regulation, with fifteen already enacting laws. For example, California has rules that require disclaimers on political ads showing fake images created using AI. Similarly, Michigan and Washington need disclaimers on all AI-generated ads. This is done regardless of their intent to deceive.

These laws span various industries, though they most commonly converge in consumer protection, economics, finance, education, and civil liberties. Three specific bills in California are designed to protect actors and singers due to AI-related problems that arise with generative AI like DALL-E and deepfake video creation software, which can allow studios and labels to abuse artists' likenesses.

The regulatory landscape is rapidly changing. Many laws, such as SB149, recently passed in Utah, demand that companies' clearly and conspicuously' declare their use of generative AI to customers, aiming to increase transparency. Another general trend includes increased tightening of privacy laws, especially regarding personal information.

Congressional Proposals and Legislative Prospects

The Congress has actively debated AI regulation. Senate Leader Chuck Schumer from New York started a cross-party effort to draft AI laws, stressing the importance of the US leading over China in AI. Hearings have discussed challenges in the Department of Defense's adoption of AI and the threat of hostile AI. Congress has also raised concerns about AI's job impacts and the necessity of retraining workers. Various legislative ideas have been put forward addressing issues like transparency and deepfakes.

The future success of these bills, however, remains uncertain.

European Union's Groundbreaking AI Act

In 2024, the European Union Council made history by approving the EU AI Act, the first-of-its-kind comprehensive law for artificial Intelligence. This legislation is designed to mitigate the risks and challenges of AI systems across the 27 EU member states.

Risk - Key Component of the EU AI Act

As a general guiding principle, entities must not use AI in ways that endanger safety, rights, and livelihoods. The EU AI Act takes a risk-based approach, categorizing AI systems into four levels of risk:

- Unacceptable Risk

Cognitive behavioral manipulation, social credit systems, systems that exploit vulnerable individuals and cause harm, assessment of the likelihood of committing criminal offenses, and biometric categorization systems (which extend from race to sexuality, politics, etc).

- High Risk

AI systems with potential negative impacts on safety or fundamental rights, like those used in critical infrastructure, healthcare, or law enforcement, are subject to stricter regulation. For example, the use of emotional inference is prohibited in workplaces and educational institutions outside of the context of medical and safety reasons.

- Limited Risk

AI systems like chatbots and image generators are subject to transparency obligations. The act requires users to be informed that they are interacting with AI. Disclosure is an integral part of the act, as it aims to combat AI's 'black box' nature.

- Minimal or No Risk

The majority of AI applications are currently in use. Classic examples include spam filters and simple AI used in video games. These don't fall under the AI Act's regulation and can continue existing without change.

Roles for compliance under the EU AI Act include developers, providers, distributors, importers, and deployers, each with unique responsibilities. There's also a push for creating non-binding codes of conduct for AI, which would help ensure AI transparency and accountability.

Impact on AI Companies and Compliance Requirements

Strict standards apply for AI with significant risks, like autonomous vehicles or medical devices. These include thorough testing, documentation, and human oversight to protect health, safety, and individual rights.

AI companies face specific obligations based on their system's risk level. High-risk AI developers must adhere to EU copyright laws, offer transparency, conduct regular tests, and bolster cybersecurity. Fines for non-compliance can be as high as €35 million or 7% of annual revenue.

GPAI model providers, like ChatGPT, must meet specific criteria, including technical docs, copyright compliance, and extra testing for systemic issues. After the AI Act's enforcement, companies have 36 months to align with it.

To comply, companies should analyze their readiness and may need to go beyond basic requirements for reputational reasons. Exceptions apply to open-source AI unless their models are as complex as GPT-4 - which, currently, no one-source models are, but this may change going forward.

The Brussels Effect: Setting Global Standards

The EU AI Act might trigger the "Brussels Effect," making EU standards the global norm. This influence could encourage universal compliance and mimicry by governments worldwide. Effective enforcement would mean other countries adopting already tried and tested policies. This echoes how the EU's GDPR became a global standard for data privacy.

The EU is also working on another bill called the AI Liability Directive, ensuring that people harmed by technology can receive financial compensation.

We've discussed the issues of AI liability and its meaning in legal systems earlier. This is a question of both consumer protection and ownership that needs a comprehensive approach.

Comparing and Contrasting the EU AI Act with the United States Approach

The EU and the US have chosen different paths in regulating AI. The EU's AI Act sets stringent conditions for high-risk uses and prohibits some AI applications, such as real-time remote biometric identification, in public areas.

On the other hand, the US is taking a more decentralized route. Different agencies are developing AI guidelines, each stemming from existing legal frameworks. President Biden's strategy prioritized setting best practices and encouraging industry standards without firm legal obligations. Individual states mainly introduce these obligations.

Both regions underline the need for thorough AI testing and close monitoring and emphasize the importance of safeguarding privacy and data in AI development. The EU aligns its AI strategies closely with the General Data Protection Regulation (GDPR), offering a more unified approach. In comparison, the US lacks a comprehensive federal privacy law specific to AI, but it has existing data protection policies that all apply to the subject.

The EU's restrictive AI Act enforces a strong compliance protocol, including significant violation fines. Entities breaking the rules may be fined 35 million euros or 7% of their global revenue. In contrast, the US doesn't impose federal penalties. Some states, however, intend to. In California, bill SB1047 proposes penalties that escalate from 10 percent of the cost of model training for the first offense to 30% for each subsequent violation.

The EU and US collaboration prospects on AI governance, primarily through the Transatlantic Trade and Technology Council (TTC), remain uncertain due to potential policy shifts with changing administrations. Nonetheless, global organizations like the OECD and the G7 strive to develop a unified AI governance approach.

Striking a balance between innovation and ethical AI practices is a key future challenge for the EU and the US.

China's Evolving AI Regulatory Framework

China's step-by-step approach marks China's strategy for AI regulation. It deals with different AI advancements separately rather than regulating AI broadly. Specific rules are implemented as AI technologies like algorithmic recommendations and deepfakes grow.

The benefit of this method is its agility in addressing new and complex risks. It aims to protect users and the government from potential harm.

The most notable legislation is the Interim Measures for Managing Generative AI Services, which began on August 15, 2023. They mark the first set of rules focusing on Generative AI in China. These regulations cover any operations with Generative AI available in China, no matter where the company is based.

Another set of measures for scientific and technical ethics reviews took effect on December 1, 2023. The guidelines for recommendation algorithms began on March 1, 2024, and are still evolving.

This tailored approach to lawmaking has some drawbacks. It obstructs the formation of a clear, all-encompassing view of AI management in China. Since 2016, China has been enacting intricate laws on cybersecurity and safeguarding data. How these laws will interact with AI's increasing prominence remains unclear.

Prospects for a Comprehensive Chinese AI Law

In June 2023, China's State Council announced its intention to fashion a thorough AI law. This announcement hints at a shift towards a more complete strategy to regulate AI. Scholars have proposed a draft law prioritizing industry innovation and pushing for tax incentives and government contributions.

By the end of 2023, 22 organizations had registered their AI models with the Chinese authorities. This is crucial before releasing any basic model to the Chinese market. China considers its evolving AI regulations as key to managing the dangers of false facts and societal disruptions. These efforts underscore China's ambition to lead in global AI governance.

Emerging AI Strategies in Africa

The African Union (AU), comprising 55 member states, is formulating an AI policy draft. It targets industry-specific codes, regulation sandboxes, and the creation of national AI councils to oversee ethical AI deployment. This effort signals a significant step toward enhancing AI governance and fostering responsible AI development throughout Africa.

In South Africa, regulatory frameworks for AI are beginning to take shape. The Department of Communications and Digital Technology tabled a discussion document on AI in April 2024. It's worth noting that AI in South Africa is currently governed by laws like the Protection of Personal Information Act (POPIA). Furthermore, the country has launched the South African Centre for Artificial Intelligence Research (CAIR). With participation from nine established and two emerging research groups within eight universities, this center demonstrates South Africa's serious approach to AI innovation.

With a significant presence in the African AI landscape, Nigeria uses existing regulations, including the Nigeria Data Protection Act (NDPA), to oversee AI applications. It is anticipated that with increased use of AI tools, Nigeria, Ghana, Kenya, and South Africa could collectively benefit by up to $136 billion by 2030.

Australian Approach to AI Regulation

Australia's approach to AI regulation is evolving. At the time of writing, there is no universal law for AI use. The country has introduced voluntary AI ethics principles and guidelines, even without specific legal statutes. These aim to foster AI technologies' responsible growth and application.

Government Consultations and Reform Proposals

In June 2023, the Australian government began several discussions to gather reform suggestions about AI control and automated decision-making. The Department of Industry, Science and Resources' consultation on "Safe and Responsible AI in Australia" drew over 500 responses. The government's early reply highlighted possible regulatory gaps and conversations on enforcing essential protections.

By September 2023, the government adopted regulations concerning the use of personal data in automated decisions, as outlined in the Privacy Act Review Report.

The authorities suggested a framework to manage AI risks and prevent harm. This includes setting up safety measures, creating a toolkit, and marking AI output voluntarily. Additionally, they recognize the shortcomings of solely a risk-based method for unforeseen threats. Therefore, there are plans to enforce dedicated AI laws for high-risk AI inspired by the EU's framework.

Australia, in general, is a good case study for what most developed countries are likely to do to steer their AI administration strategy in response to the development of global standards. As the discussion evolves, most of the world will likely use blueprints designed by the US, EU, and international initiatives.

Global Bodies and AI Governance Initiatives

The United Nations, the OECD, and the G20 have their own AI governance steps. They work to set common principles on AI ethics, transparency, accountability, and fairness. This guides countries to sync their AI plans and rules, aiming for global AI standards and practices.

The World Economic Forum and the IEEE also contribute to their work on AI governance. For instance, the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems has established highly regarded standards for fair AI use in the technician and scientific communities.

The Hiroshima AI Process Friends Group, initiated by Japanese Prime Minister Kishida Fumio, is leading a global effort. With backing from 49 countries, mainly OECD members, it achieved a milestone in shaping inclusive governance for AI. It's the first agreement among G7 democratic leaders to enhance AI, focusing on people's rights.

Overall, efforts for AI regulation are ongoing globally and domestically, with decisions influencing each other as specific principles and ideas are debated on different levels.

Preparing for Upcoming AI Regulatory Reforms

A proactive stance is essential to maintaining adherence to AI regulations and curbing risk exposure. Initiatives such as AI audits and crafting sturdy AI governance frameworks are critical. They enable companies to navigate the intricate maze of evolving AI statutes at state and federal levels and the forthcoming EU AI Act. At Keymakr, we always stay at the forefront of training data compliance to ensure that your models always use clean and legal data.

Conducting AI Audits within Organizations

Organizations should conduct exhaustive AI audits to glean insights and identify enhancement areas. A detailed assessment of AI structures should focus on scenarios involving personal data, automated decision-making, or significant legal and financial ramifications for individuals.

Inspect the origin and quality of your training data, analyze the fairness and partiality of AI constructs, and be diligent about rooting out biases. These steps proactively mitigate risks and bolster AI transparency and accountability.

Developing and Implementing AI Governance Frameworks

Building resilient AI governance frameworks is essential for enabling responsible AI applications and bolstering faith among stakeholders. You need precise guidelines, protocols, and checks for the initiation, execution, and supervision of AI solutions. Incorporating elements like defining AI-related roles, setting benchmarks, and ensuring transparency. Here are some suggestions for creating governance frameworks:

- Defining roles and responsibilities for AI development and oversight

- Setting ethical principles and guidelines aligned with AI ethics frameworks

- Establishing mechanisms for AI transparency, explainability, and redress

- Regularly monitoring and updating AI systems to ensure ongoing compliance and performance

This readiness extends to compliance with the stipulations of the EU AI Act, which is especially important if you are creating systems that deal with sensitive data or interact with customers in ways that could become misleading.

Where the World of AI Regulations is Headed

Countries worldwide are actively discussing AI regulation. Recent examples include Egypt unveiling a national AI strategy, Turkey providing guidelines on data protection, South Korea rolling out its own AI act, Japan settling on AI regulating principles, and so on. It's reasonable to expect most nations to develop specific laws shortly.

Governments worldwide struggle to define and regulate AI as organizations prepare for these imminent legal changes. Companies are urged to conduct thorough AI risk assessments, create strong AI governance rules, and stress the importance of AI transparency and accountability. For instance, France aims to become a global AI superpower and uses US companies and their capital to make it happen. It has also set up a Commission on AI that handed down 25 recommendations in March 2024.

Most countries will take the French approach, trying to become as attractive as possible for innovative AI companies while also harnessing AI for development. Ultimately, this is why compliance is becoming so important. Developers who can achieve it will open doors in various parts of the world, all vying to invest in transparent and proven solutions.

For businesses, staying ahead of the developing AI regulation is key. This could mean working closely with regulators, joining broader industry efforts, and instilling a culture of ethical AI within their ranks. Start with high-quality training data for your models and work up from there!

FAQ

What are the key components of the EU AI Act?

The EU AI Act identifies AI applications according to risk levels. It introduces strict rules for each group. For example, it bans certain AI practices like biometric mass surveillance. Moreover, it mandates checks for high-risk AI to ensure they meet standards. This act demands AI companies be open about their technologies. It also ensures high-risk AI is trained and tested using fair data, helping reduce any hidden biases in the technology.

How does the US approach to AI regulation differ from the EU AI Act?

The US takes a different approach by significantly emphasizing state-specific legislation. Various agencies make rules, focusing on the best ways to handle different sectors. This approach is more flexible and adaptable.

What is the current state of AI regulation in China?

China's approach to regulating AI is patchwork, targeting specific AI types. For instance, it has laws for deepfakes and recommendation algorithms. However, a national law covering all AI is in the works, with the first draft already out. This law will follow the EU AI Act's approach, bringing everything under one regulation.

What steps can organizations take to prepare for upcoming AI regulatory reforms?

Organizations can get ready by checking their current AI setups. They should look for any risks and room for better practices. Setting up clear policies and procedures and ways to track and fix errors is vital. In general, taking your AI out of the 'black box' will be helpful in the compliance process. Make sure your data is transparent and explainable regarding its origins, too.

What are some of the challenges in defining and regulating AI?

One big hurdle is agreeing on what AI is. Various US agencies and Congress have suggested definitions, which often leads to confusion. Finding the right balance is tough, too. We must keep sensitive data secure without blocking new ideas.

How can organizations ensure compliance with AI regulations and mitigate potential risks?

Organizations can ensure they follow the rules by conducting frequent AI checks. They should also establish strong leadership and policies that stress ethics. Straightforward dealings and feedback channels are also key. Staying updated on rules and trends is equally important for their industry.

What role do global bodies play in shaping AI governance and standards?

International groups like the UN, OECD, and G20 are pivotal in AI rules. They work on suggestions to guide AI use globally, aim to set standards that promote good AI and fairness and help countries collaborate on their AI plans.