Reducing Manual Labeling Effort Through Smart Sample Selection

Reducing the manual labeling required in machine learning pipelines has become an increasingly important goal as data continues to scale in volume and complexity. One promising approach to alleviate this burden is strategic data sampling, where only the most informative or influential examples are selected for labeling. This approach, rather than treating every data point equally, allows teams to focus their efforts more effectively. The result is a more optimized process that still maintains or even improves the performance of the learning systems.

This idea is based on the notion that not all data contributes equally to model training, and some examples offer more value than others when shaping model behavior. This sampling strategy can also support faster iterations and feedback loops, making it well-suited for real-world applications where flexibility is often essential.

Key Takeaways

- Active learning reduces required training data by prioritizing high-value samples.

- Strategic selection improves model accuracy while cutting annotation costs.

- Modern NLP techniques enable smarter identification of critical data patterns.

- Efficient training processes accelerate AI deployment timelines.

- Resource optimization directly impacts project ROI and scalability.

Overview of the Labeling Process

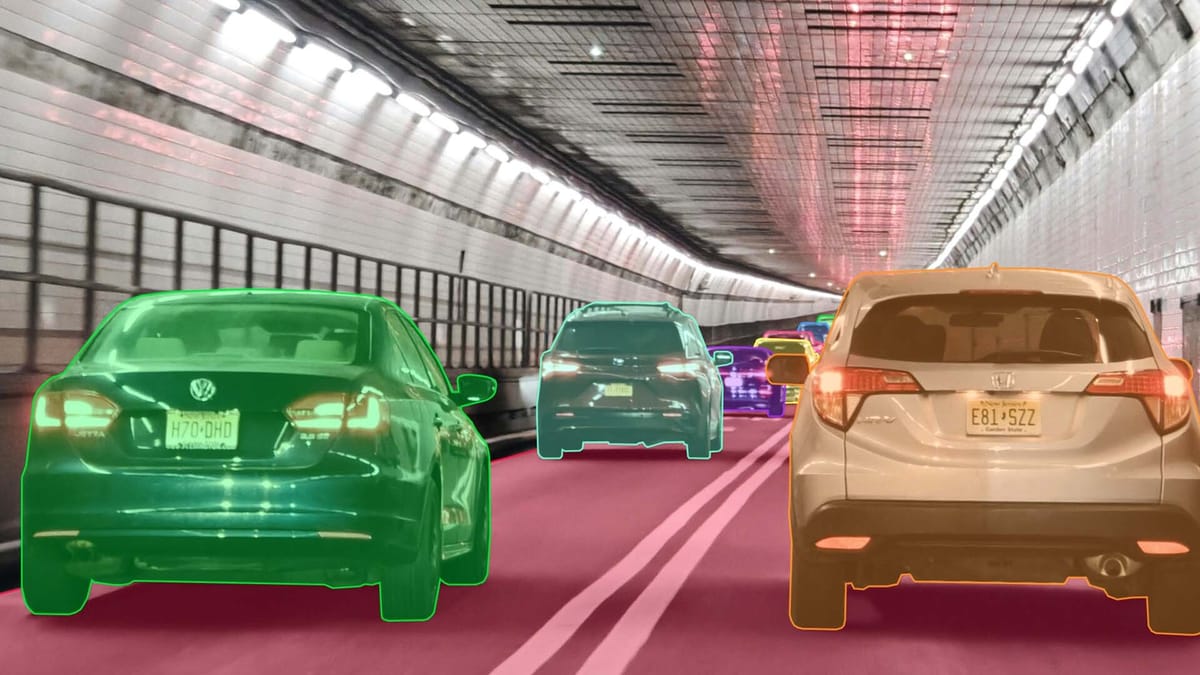

This process typically involves humans reviewing examples, such as images, text, or audio, and assigning predefined labels representing the desired outcome. Whether performed by in-house experts or outsourced to large teams of annotators, the accuracy and consistency of this step are critical to the model's overall performance. However, as datasets become larger and more diverse, labeling efforts become increasingly time-consuming and expensive. Despite advances in automation, the need for high-quality human-labeled data remains a significant limitation in many fields.

In most workflows, labeling begins after data collection and pre-processing and follows guidelines to ensure consistency across annotators. The guidelines, often called labeling protocols, help minimize ambiguity and standardize how complex or subjective input data should be handled. The result is a labeled dataset that can be used to train, validate, and test machine learning models.

The labeling process is further complicated because large parts of the data may have little impact on model performance but still require the same annotation effort. In many cases, datasets contain repetitive, redundant, or uninformative examples that do not significantly improve the model's generalization ability.

Why Efficient Data Annotation Matters

The importance of practical data annotation can be understood in terms of saving time or money and how it shapes the entire trajectory of a machine learning project. The quality and structure of annotated data determine how well a model can generalize, how quickly it can be iterated, and how robust it will be when deployed in real-world environments. Practical annotation ensures these benefits are achieved without wasting resources on labeling data with little value. A well-designed annotation process improves results in several ways:

- Model performance. High-quality labels applied to the most informative samples directly improve a model's ability to learn and generalize.

- Speed of development. Prioritizing what gets labeled allows teams to move faster, especially during experimentation and early prototyping.

- Cost-effectiveness. Reducing the number of redundant or ineffective labels helps minimize annotation costs without sacrificing accuracy.

- Resource allocation. Experienced annotators can focus their efforts where subject matter expertise is most needed rather than spreading themselves across the entire dataset.

- Scalability. An effective annotation strategy becomes essential to maintain a sustainable development pipeline as data volumes increase.

Understanding the Challenges in Manual Labeling

One of the most immediate challenges is the labor-intensive nature of the task, especially when large datasets require thousands or even millions of tags. Even with a well-trained team of annotators, maintaining consistency across such a volume of data is difficult, and errors can easily slip through the cracks. The more complex or subjective the labeling criteria, the higher the likelihood of variation between annotators, which can introduce noise into the dataset.

Another common problem is cost, especially when tagging requires specialized knowledge or detailed inspection. In fields such as medicine, law, or scientific research, access to skilled annotators is limited, and their time is expensive. Crowdsourcing can offer a more affordable alternative but often sacrifices accuracy if not managed carefully. In addition, creating clear annotation guidelines that reduce ambiguity while covering all necessary cases is a challenge.

Common Obstacles and Laborious Processes

Manual labeling is often associated with several obstacles and tedious processes that can slow down machine learning development and limit scalability. These challenges are not just about time or cost but reflect deeper structural inefficiencies in how data is prepared for model training. From managing annotation teams to ensuring consistency across complex datasets, the process requires constant oversight and adjustment. Some of the most common obstacles and time-consuming tasks include:

- Creating annotation guidelines. Developing thorough, unambiguous guidelines takes considerable time and effort, especially for tasks that involve subjective judgment.

- Training annotators. Ensuring that all annotators understand and consistently apply the instructions is an ongoing challenge that often involves repeated rounds of feedback and correction.

- Quality control. Maintaining labeling accuracy across large datasets requires careful review, spot-checking, and sometimes multiple annotators per item.

- Limitations of tools. Annotation platforms may not have the flexibility needed for complex tasks or integrate seamlessly into existing workflows, requiring manual data transfer and formatting.

- Excessive labeling. Much of the data often contains repetitive or uninformative examples that consume labeling resources without improving model performance.

Active Learning: A Game Changer in Data Annotation

Instead of treating all unlabeled examples as equally valuable, active learning allows the model to identify the samples it is least confident about and request labels for those only. This creates a more efficient loop where labeling efforts are focused on the data that contributes the most to model improvement. It also helps teams avoid wasting time on redundant or predictable examples that do little to improve learning.

One of the most compelling aspects of active learning is how it makes annotation an interactive process between the model and the annotator. As the model develops, its understanding of the data improves, and the set of examples it needs help with becomes more focused. Learning can significantly reduce the workload for areas where labeled data is challenging to obtain due to cost, expertise, or privacy concerns.

The broader impact of active learning goes beyond simply saving time or reducing costs. It encourages a more thoughtful, feedback-driven approach to learning models, where data selection becomes part of the model training process rather than a static input. As machine learning continues to move into areas with limited labeled data, active learning stands out as a key enabler of practical and scalable solutions.

How Active Learning Optimizes Sample Selection

How active learning optimizes sample selection is at the heart of its value in reducing labeling effort while maintaining or even improving model performance. It introduces a feedback loop between the model and the data selection process, where the model helps decide which examples to label next. The result is a learning pipeline that is not only faster but also more responsive to the model's current state. Here are some of the ways that active learning optimizes sample selection:

- Uncertainty sampling. The model selects the data points it is least sure about, focusing on the areas where it needs the most help to improve.

- Requested by the committee, multiple models or variations of a model estimate the same data, and the samples with the most significant discrepancy are selected for labeling, providing diversity and reducing bias.

- Diversity sampling. Instead of labeling similar examples, this strategy selects diverse data points to cover more of the input space and improve generalization.

- Iterative feedback loops. The model learns in cycles, refining its understanding and recalibrating which data is most informative for the next labeling.

- Stopping criteria. Built-in mechanisms help to determine when enough informative data has been labeled, preventing unnecessary annotations that exceed the limits of diminishing returns.

Smart Sample Selection for Improved Model Accuracy

Rather than assuming that more data always leads to better results, this approach emphasizes the quality and relevance of the examples selected. By focusing on the right samples, models are exposed to outliers, ambiguities, and underrepresented patterns that random sampling might otherwise miss. This leads to more enriched learning and better generalization, especially in real-world settings where input data distribution is rarely uniform.

One of the key benefits of innovative sampling is that it aligns the labeling effort with specific model weaknesses. Instead of building a dataset in isolation, the process becomes interactive - guided by model behavior, performance metrics, or sampling strategies designed to fill knowledge gaps. Such targeted selection helps avoid overfitting with redundant data and allows models to understand complex or rare scenarios better. It creates a more adaptive learning process where each newly labeled example serves a clear purpose. As a result, improvements in model accuracy can often be achieved with much less labeling effort than traditional approaches would require.

Ensuring Quality Control in Data Annotation

Without consistent and accurate annotations, models risk learning from imperfect or noisy data, which can lead to poor performance and unpredictable behavior. Quality control includes implementing processes to detect, correct, and prevent errors throughout the annotation workflow. This attention to detail helps maintain a high standard across the dataset, even as volumes grow and multiple annotators contribute to the labeling effort. Maintaining this consistency is especially important when dealing with subjective tasks or complex data types where interpretation can vary widely.

Several strategies are commonly used to maintain annotation quality, balancing the need for thoroughness with efficiency. Peer review or cross-checking, where multiple annotators annotate the same data and the results are compared, helps identify discrepancies early. Automated checks can identify obvious errors or inconsistencies, reducing the workload of human reviewers. Clear and detailed instructions that are regularly updated based on feedback and observed issues provide a framework for annotators and minimize ambiguity. In addition, ongoing training and communication with annotators ensures that everyone adheres to the project's goals and standards.

Human-in-the-Loop: Enhancing Annotation Accuracy

Rather than relying solely on automated systems or manual labeling, this method integrates human expertise at key points in the workflow to review, correct, or guide the annotation process. By selectively engaging humans, especially where the model is uncertain or likely to make mistakes, teams can improve data quality while benefiting from the efficiencies of automation. Such collaboration allows for a more nuanced understanding of complex or ambiguous data that machines may have difficulty interpreting independently.

One of the main advantages of human-in-the-loop systems is their ability to dynamically adapt to the ever-changing needs of a machine learning project. Humans can provide feedback that helps improve models and annotation tools, creating a continuous improvement cycle. This approach also helps to identify outliers or rare events that automated processes may miss or mislabel.

Integrating Automated Processes into Labeling Workflows

Automation can handle repetitive, simple tasks, freeing human annotators to focus on more complex or ambiguous cases that require nuanced judgment. Integrating automation is not only about speeding up the process; it also improves overall quality by standardizing routine steps and allowing human input to be more focused and effective. Some common points in the labeling workflow where automated processes can be integrated include:

- Data pre-processing and cleaning. Automating raw data preparation, such as noise filtering, formatting, or normalizing input data before annotation begins.

- Initial labeling or low supervision. Models or heuristics create preliminary labels annotators can review and correct instead of starting from scratch.

- Consistency checking. Automatically flag inconsistencies or conflicts in annotations across multiple annotators for further review.

- Quality control and validation. Use automated tools to identify errors, missing labels, or anomalies requiring human attention.

- Post-annotation processing. Automate the aggregation, formatting, and integration of annotated data into training sets or databases to optimize the transfer to simulation teams.

Summary

Reducing manual labeling efforts through smart sample selection reshapes how machine learning teams approach data annotation by making the process more efficient, targeted, and effective. Instead of labeling every data point, this approach focuses on selecting the most informative and impactful samples, which helps save time, reduce costs, and improve model accuracy. Techniques such as active learning and human-in-the-loop systems create dynamic feedback loops between models and annotators, allowing for continuous refinement and better use of human expertise. Integrating automation at key points in the workflow further streamlines the process, balancing speed with quality control. These innovations enable organizations to build high-performing models with less manual effort, making machine learning more accessible and scalable across diverse applications.

FAQ

What is the primary goal of reducing manual labeling through smart sample selection?

The main goal is to minimize the data that needs to be manually labeled by focusing on the most informative and impactful samples. This approach saves time and resources while maintaining or improving model performance.

Why is manual labeling considered a bottleneck in machine learning projects?

Manual labeling is time-consuming, expensive, and often inconsistent, especially with large or complex datasets. It slows down development and can limit the scalability of machine-learning efforts.

How does active learning help in reducing labeling efforts?

Active learning allows the model to identify uncertain or ambiguous samples and request labels only for those. This targeted approach reduces redundant labeling and focuses human effort where needed.

What role does human-in-the-loop play in data annotation?

Human-in-the-loop combines automated labeling with human review, allowing experts to correct or guide the process. This improves accuracy and helps handle complex or ambiguous cases that machines might misinterpret.

Where can automation be integrated into the labeling workflow?

Automation can be applied in pre-processing data, generating initial labels, checking annotation consistency, quality control, and post-annotation data processing. This reduces manual workload and speeds up the workflow.

Why is quality control important in data annotation?

Quality control ensures that labeled data is accurate and consistent, which is critical for training reliable machine learning models. Without it, errors can propagate and degrade model performance.

How does smart sample selection improve model accuracy?

Smart sample selection prioritizes challenging, diverse, and informative samples. This exposes models to data that helps them generalize better. It avoids redundancy and focuses learning on areas where improvement is most needed.

What are some common challenges in manual labeling?

Challenges include high time and cost requirements, inconsistency among annotators, difficulty handling complex or subjective data, and inefficiency when labeling redundant or low-value samples.

How does iterative feedback in active learning benefit the annotation process?

Iterative feedback allows the model to continually refine its understanding and adjust which samples it requests for labeling. This dynamic cycle leads to more efficient learning with fewer labeled examples.

What impact does integrating automated processes have on labeling workflows?

Integrating automation streamlines repetitive tasks, reduces human error, and accelerates annotation. It enables teams to scale labeling efforts while maintaining quality through a balanced human-machine collaboration.