LLM as a Judge for Automated Output Evaluation

The stage of evaluating LLM responses is the most critical stage of development, as it is impossible to improve a product without an objective measurement of quality. However, with the evolution of technology, traditional verification methods have faced serious challenges that make them ineffective in today’s market conditions.

Manual evaluation of responses by humans is considered the "gold standard," but it has significant drawbacks, the main one being the impossibility of scaling. Reviewing thousands of dialogues daily requires enormous human resources, making the process extremely expensive and slow. Additionally, there is the problem of consistency: different people may interpret the "politeness" or "usefulness" of a response differently, which introduces subjectivity into the final metrics.

The lack of speed in evaluation slows down development cycles, as developers cannot promptly receive feedback after every change in model settings. This creates a need for an automated approach that could combine the flexibility of human thinking with the productivity of a machine. It is against this backdrop that the concept of LLM-as-a-Judge emerged, where one powerful model takes on the role of an expert to evaluate the performance results of other algorithms, ensuring a fast and relatively cheap result.

Quick Take

- An LLM judge allows for the instantaneous evaluation of thousands of responses, making development dozens of times faster and cheaper.

- Unlike mathematical metrics, an AI judge understands context, logic, style, and complex user instructions.

- For high-quality evaluation, the judge requires a detailed prompt with a description of scores and examples of "ideal" responses.

- The best result is provided by a system where AI performs bulk verification and human experts analyze only the disputed cases.

- Real-time automatic evaluation allows AI systems to self-improve and pass through update cycles faster.

The Essence of the Judge Model's Work

The concept of using artificial intelligence to check another artificial intelligence is becoming a standard in the development of modern systems. This approach allows for the transformation of subjective feelings about text quality into concrete numbers and data that can be analyzed and compared across different model versions.

The Idea of One Model Evaluating Another

The approach is based on the idea of using a powerful model to check the output of smaller or specialized algorithms. Imagine a situation where an experienced teacher grades the essays of their students. In this case, the role of the teacher is performed by a large model, such as GPT-4, which has a deep understanding of language and context.

Such automated evaluation allows for the immediate processing of thousands of responses. The judge analyzes the text not just for the presence of required words, but for logical coherence and the appropriateness of what was stated. This is significantly more accurate than old methods of text comparison because a large model understands synonyms and complex instructions.

Thanks to this, developers receive objective LLM evaluation metrics that show how well the model is handling the assigned task. The process looks like an automated conveyor: the first model generates a response, and the second model – the judge – examines it and renders a verdict. This provides the rapid feedback essential for the constant improvement of the technology.

Areas of Application for Automatic Evaluation

Automated evaluation covers a wide spectrum of text characteristics that previously only humans could verify. This makes model assessment a comprehensive tool that helps identify weaknesses in chatbot performance even before a real user sees them.

Here are the main parameters that a judge can analyze automatically:

- Factual correctness – checking whether the model invented non-existent facts or dates.

- Completeness of the response – analyzing whether all parts of the user’s query were taken into account.

- Logic and style – evaluating whether the text is consistent and whether it matches the specified tone of communication.

- Instruction following – checking whether the model adhered to constraints, such as word count or format.

- Safety – identifying harmful content or toxic statements.

Each of these points receives its own individual score, allowing one to see the full picture of quality. Such detailed LLM scoring helps developers understand exactly what needs to be adjusted: factual knowledge or the manner of communication. This makes the testing process clear and structured for the entire team.

Mechanics of Forming Evaluation Criteria

For a judge model to work effectively, it needs a clear instruction called an evaluation rubric. Without specific directions, even the smartest model can be too lenient or, conversely, too harsh. The process of setting up a judge begins with creating a special prompt that describes in detail what earns a high score and what earns a low one.

An important part of response quality evaluation is the use of examples. Developers add samples of "ideal" responses and examples of typical errors into the judge's prompt. This sets the correct scale for the system and helps avoid situations where the judge model evaluates texts randomly. It begins to orient itself toward the given standards, which makes the verification stable.

A specific scale is developed for each characteristic, for example, from one to five points. The judge must not simply provide a number but also explain their choice in text. Such justification helps humans understand the machine’s logic and ensures that the score was given fairly. This creates a system of transparent control where every score is backed by solid arguments.

Limitations and Real Practice

Using artificial intelligence as an arbiter requires a critical approach. The judge model itself is a neural network, which means it can inherit the same errors it is intended to detect in others.

Typical Risks

One of the most well-known risks is the tendency of models toward self-assessment or giving preference to algorithms similar to themselves. If the judge and the model being checked were developed by the same company, there is a risk of inflated scores due to a similar writing style. There is also the problem of text length: judge models often give higher scores to longer responses, even if they contain much redundant information, simply because such text looks more substantial.

Another challenge is the judges' hallucinations. The model might invent an error where there is none, or conversely, miss a factual inaccuracy if it is written in a very confident tone. Additionally, the quality of evaluation depends critically on the phrasing of instructions: the slightest change in the judge's prompt can completely alter the final results. Finally, there are very subjective tasks, such as writing poems or jokes, where even humans cannot always reach an agreement, and for AI, this becomes a nearly impossible task.

The Role of Annotation and Reference Data

In order to trust an automated judge, it must first be calibrated using human evaluations. Developers create small sets of "golden data" where every response has been checked by experienced assessors. This reference data serves as a mirror into which the judge model looks to understand if it is correctly interpreting the quality criteria.

The process of verifying the quality of an automatic evaluation looks like a comparison between the model's scores and human scores. If their opinions coincide in the majority of cases, such a system can be scaled to thousands of new tasks. Human annotation remains the foundation, as it is people who define what is ethical, useful, or correct in the long term. Only through constant comparison of automated scores with human experience can a system be built that can truly be trusted in production.

Practical Usage Scenarios

Despite the limitations, the automated evaluation approach finds application at every stage of creating intelligent systems. This allows teams to move significantly faster and make decisions based on numbers rather than intuition.

Here is where this method works best:

- A/B testing of models. Quick comparison of two versions of an algorithm to understand which one better answers complex queries.

- Response filtering. Automatic weeding out of unsuccessful or dangerous text variants before they reach the end user.

- Continuous evaluation. Constant monitoring of the system's performance quality in real time after the product launch.

- Fine-tuning analysis. Instant verification of how each new portion of training data affects the model's overall intelligence.

Using a judge model during fine-tuning allows developers to conduct dozens of experiments per day. This accelerates the evolution of artificial intelligence and enables the creation of safer, more useful tools for all of us.

Coordination of Experts and Algorithms in Quality Control

The future of artificial intelligence evaluation lies in building efficient hybrid processes. This allows for utilizing the strengths of both sides to achieve an ideal result.

Hybrid Approaches to Data Verification

The most effective strategy is for the judge model to act as the first filter on the way to the final evaluation. It takes on the routine work, weeding out obviously correct or grossly erroneous responses. This frees up expert time to focus on complex cases where context is ambiguous or where a difficult ethical decision needs to be made.

Humans in such a system act as arbiters of last resort. They review only those responses where the judge model expressed doubt or where its evaluation diverged from expectations. This approach allows for the verification of dozens of times more data without loss of quality, as human attention is spent only on what truly requires intellectual intervention.

What This Means for the Future of AI Development

The transition to using artificial intelligence as a judge radically changes the speed of technological development. Instead of waiting weeks for testing results, development teams are moving to continuous automatic evaluation. This means that every new idea or change in code can be checked instantly, allowing for much more frequent model updates.

The quality of systems becomes more manageable and predictable. Developers can precisely tune the "behavior" of their models, guided by measurable numbers from automated judges. In the future, this will lead to the creation of systems that independently detect their own errors and learn from them in real time, making artificial intelligence much more reliable and safer for every user.

FAQ

How to avoid position bias?

AI judges often choose the first response out of two simply because of its place in the prompt. To fix this, "cross-evaluation" is conducted: the model evaluates a pair of responses twice, swapping their places each time, and the final result is averaged.

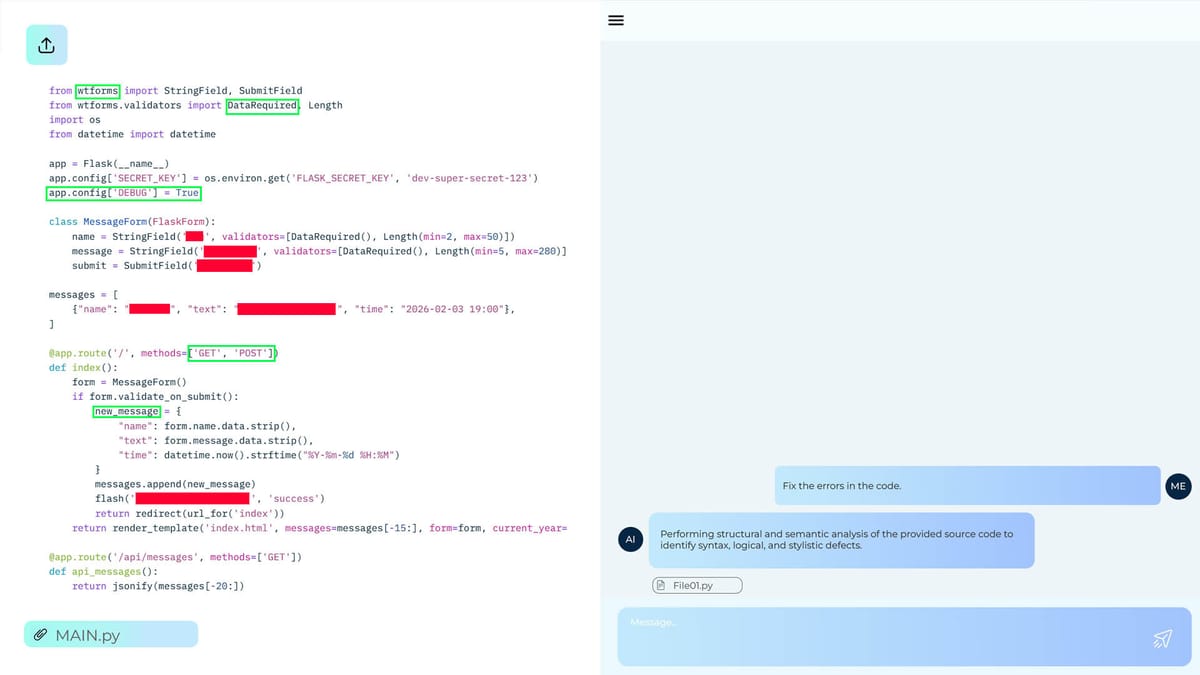

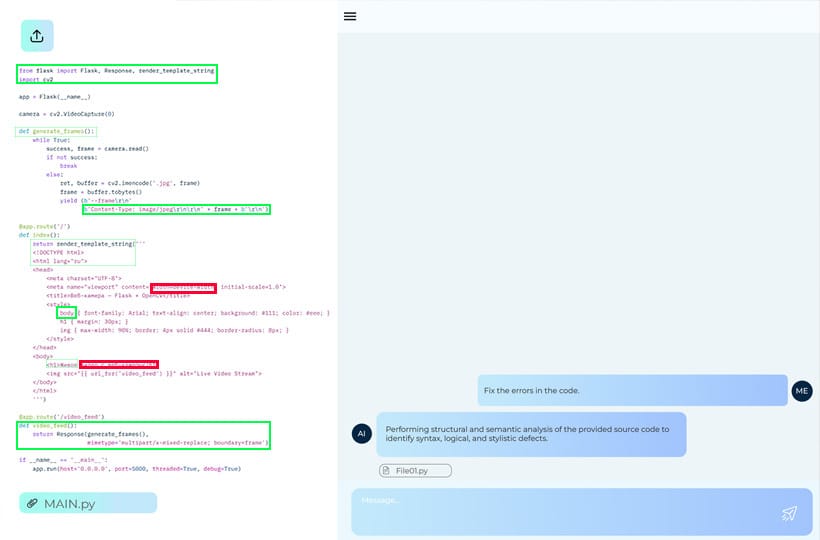

Is the model-judge suitable for evaluating program code or mathematical expressions?

Such a check is quite possible, although it is more complicated than analyzing ordinary text. For code evaluation, it is better to provide the judge not only with the program text itself, but also with the results of its execution. In this case, the LLM analyzes not only the correctness of the syntax but also how effectively this code solves the task.

How does the cost of tokens affect the choice of a judge model?

For mass evaluation, models are often used, which are specifically trained to evaluate texts. This is cheaper than constantly turning to massive models like GPT-4 while maintaining an acceptable level of accuracy.

What is "agreement rate" and why is it important?

This is an indicator of how often the LLM's scores match those of human experts. If this indicator is low, developers change the judge's prompt or add more examples for calibration.

How does an LLM judge fight the hallucinations of another model?

The judge can be given access to external sources of information or reference facts. Then it compares the response not with its own knowledge, but with a verified text, which minimizes the risk of missing invented information.