LLM Agents: Building Autonomous AI Systems That Reason and Act

The rapid development of LLMs has significantly influenced modern approaches to building intelligent systems. Models capable of processing natural language, code, and multimodal data are increasingly used in applied tasks related to information analysis, decision support, and automation of complex processes.

The relevance of agent-based approaches based on large language models stems from both the practical needs of automation and the theoretical challenges associated with interpretability, reliability, and control of autonomous behavior. Autonomous language agents are considered a promising step in the development of intelligent systems that combine the flexibility of statistical models with elements of purposeful action.

Why LLM Agents Matter Right Now

The relevance of agent-based approaches based on large language models stems from the simultaneous development of several technological and methodological directions. On the one hand, language models have reached a level where they can support complex chains of reasoning, work with heterogeneous sources of information, and generalize knowledge beyond individual tasks. On the other hand, there is a growing need for systems that go beyond passive generation of answers and are able to operate in dynamic environments.

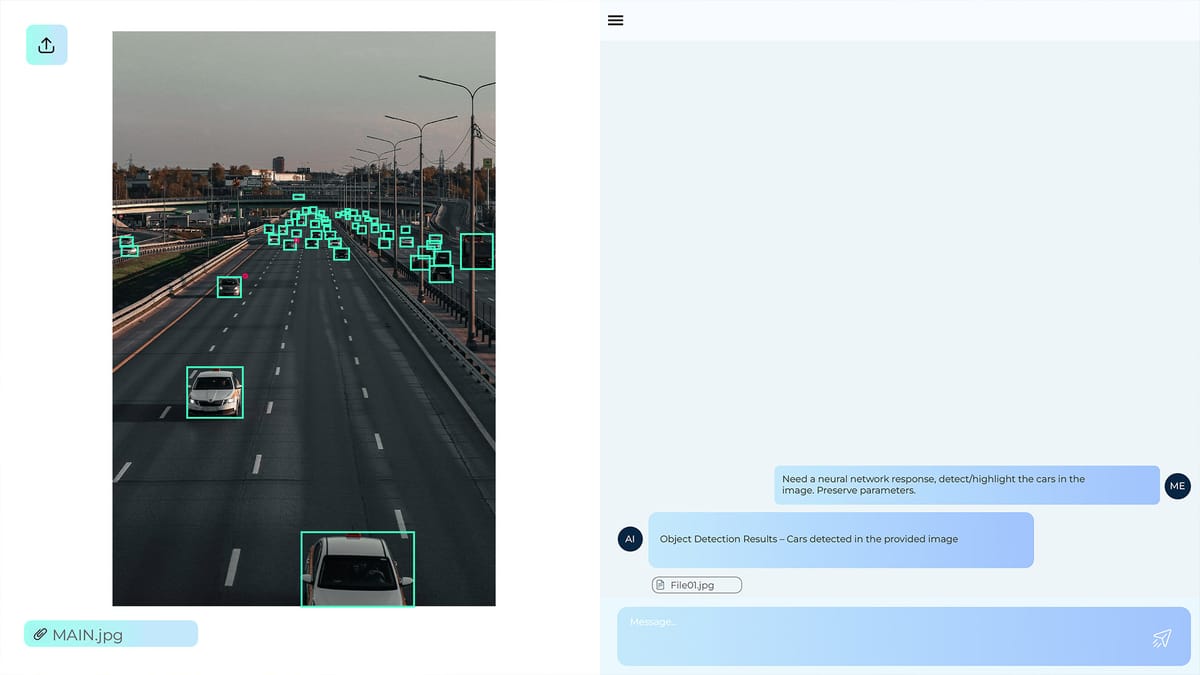

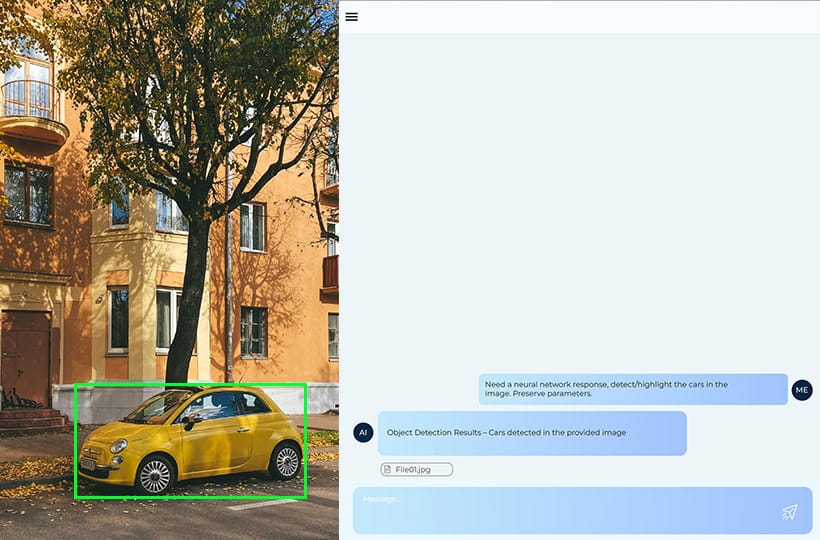

Modern autonomous AI agents gain practical significance due to their ability to integrate language models with external components, including execution, memory, and control. This enables systems not only to formulate plans but also to implement them through agent tools, interacting with software environments, services, and data.

Static pipelines and rigidly defined scenarios increasingly fail to meet the requirements of real-world environments, where flexibility, scalability, and the ability to self-correct are essential. LLM-based agent systems provide a generalized paradigm that unifies reasoning, data, and tools within a framework for autonomous behavior.

What Are LLM Agents

LLM agents represent a modern approach to building artificial intelligence systems, in which large language models are combined with mechanisms for planning, memory, and interaction with the external environment. Such systems are not only able to process information but also to perform multi-stage actions, adapting to a changing context and evaluating the results of their decisions. The architectural features of the LLM agent architecture enable the integration of language models with agent tools, including APIs, databases, and software services, which significantly expand the functionality of autonomous agents.

The data used during training plays a key role. Agent training data determines the system's ability to maintain consistent logic and consistency of actions, which in turn affects the stability and predictability of its behavior. In combination with planning algorithms and reasoning mechanisms, such systems form reasoning agents that can break down complex tasks into subtasks and adjust their strategy based on intermediate results.

The integration of all these components makes LLM agents an example of autonomous AI agents that combine the adaptability and flexibility of language models with the practical ability to act in a real environment. This approach separates them from traditional LLMs, which are limited to text generation, and creates the prerequisites for widespread application in business, research, and complex technical tasks.

When basic LLMs or simple RAG aren’t enough

In many application scenarios, standard large language models or simple RAG (retrieval-augmented generation) approaches are limited by their lack of consistency and limited adaptability. Such systems are effective for text generation or information retrieval, but they are not able to support long-term strategies or coordinate multi-step processes.

The quality of agent training data also plays a crucial role, as it determines the agent’s ability to maintain consistency and stability in its behavior. The combination of data, tools, and architectural solutions enables the creation of autonomous AI agents capable of performing tasks that previously required human intervention or numerous manual integrations.

Core Architecture of Agentic Systems

- LLM reasoning engine. The heart of the system provides natural language processing, logical reasoning, and action planning. This component forms the basis of reasoning agents, enabling them to analyze complex tasks and determine the sequence of steps required to achieve their goals.

- Memory and context management. Memory mechanisms store the history of interactions and the context of execution, which ensures the consistency of actions in long-term scenarios. The use of agent training data enables the system to enhance its ability to adapt and refine strategies.

- Decision module. The planning component breaks complex tasks into subtasks and determines the optimal order of actions. Its integration with LLM increases the effectiveness of autonomous AI agents in dynamic environments.

- Tool integration layer. The interaction layer with tools (agent tools) allows the agent to perform operations in external systems, including APIs, databases, web services, and specialized software environments.

- Feedback and evaluation loop. The feedback loop analyzes the results of performed actions and corrects further behavior, ensuring the consistency and reliability of autonomous task execution.

- Safety and control mechanisms. Control and constraint mechanisms ensure that agent systems operate within acceptable limits, minimizing risks and unintended consequences, which is especially important for autonomous AI agents.

Planning in Practice: CoT, ToT, and Feedback Loops

Designing Memory for Agents

Memory design involves several aspects. First, it is necessary to determine the volume and structure of context storage, which enables the effective integration of agent training data to enhance generalized abilities. Second, it is necessary to provide mechanisms for extracting and updating information in real time, which provide flexibility in interaction with agent tools and external services.

Integrating memory with planning and feedback loops helps to increase the autonomy of autonomous AI agents. Preserving the history of interactions enables agents not only to react to the current state of the environment but also to predict the consequences of future actions, thereby optimizing their behavior to achieve complex goals.

Tools and External Actions

Summary

Modern LLM agents exhibit a shift from reactive language models to autonomous systems that can combine reasoning and action. Their effectiveness is due to a complex architecture that integrates memory mechanisms, planning, interaction with tools, and feedback loops. High-quality agent training data allows for increased consistency and reliability of behavior, while agent tools provide the ability to influence the real environment.

This combination of components forms reasoning agents that can adapt to changes in context and perform multi-step tasks. Thanks to such capabilities, autonomous AI agents open up new prospects for automating complex processes, integrating with external systems, and creating more flexible intelligent solutions.

FAQ

What is the main advantage of an LLM agent over a standard LLM?

LLM agents combine reasoning with action, allowing them to execute multi-step tasks in dynamic environments. They use LLM agent architecture to integrate planning, memory, and external tools.

How does memory influence the behavior of LLM agents?

Memory stores context and past interactions, enabling reasoning agents to maintain coherent strategies. Proper design ensures adaptability and long-term task consistency.

What role do agent tools play in autonomous systems?

Agent tools allow LLM agents to interact with external APIs, databases, and software environments. This expands the system’s capabilities beyond text generation.

Why is agent training data important for LLM agents?

Agent training data determines the reliability and generalization of agent behavior. High-quality data supports consistent reasoning and effective decision-making.

What is the purpose of planning techniques like CoT and ToT?

Chain of Thought (CoT) and Tree of Thoughts (ToT) guide step-by-step or branching reasoning for reasoning agents. They improve problem-solving and optimize multi-step strategies.

How do feedback loops enhance autonomous agents' AI?

Feedback loops enable agents to assess the outcomes of their actions and adjust their plans accordingly. This mechanism increases adaptability and autonomy in dynamic environments.

What components are essential in LLM agent architecture?

Key components include the reasoning engine, memory, planner, tool integration layer, and feedback mechanisms. Together, they enable autonomous agents AI to perform complex tasks reliably.

In what scenarios are simple LLMs or RAG insufficient?

Standard LLMs or basic RAG systems struggle with multi-step reasoning and dynamic decision-making. LLM agents handle complex workflows that require planning and adaptive execution.

How does tool integration improve agent performance?

Integration with agent tools allows agents to act in the real world, execute commands, query databases, and automate processes. It bridges reasoning with practical actions.

What distinguishes reasoning agents from reactive LLMs?

Reasoning agents use context, memory, and planning to anticipate outcomes and adjust strategies. Reactive LLMs only respond to immediate prompts without long-term coherence.