How to Train LLM: A Guide for Enterprise Teams

Enterprise teams are increasingly turning to large-scale language models (training large language models) to automate workflows, improve decision-making, and engage with customers. Successful LLM model training requires not only access to a pre-trained model but also a structured, step-by-step approach that aligns the model with your organization's specific data, processes, and compliance requirements.

This guide provides practical information for training, configuring, and integrating LLMs, ensuring they deliver reliable, high-quality results in real-world business scenarios.

Quick Take

- Extracting high-quality data from a diverse range of sources is essential for generating reliable information.

- Multi-level deduplication and FAIR documentation reduce the risk of contamination.

- LoRA and adapter methods outperform full customization in cost and speed.

- Benchmarking and business metric evaluation ensure secure and compliant deployment.

Prerequisites and Architecture Selection for Large Enterprise-Level Language Models

Unlike public LLMs, enterprise models work with internal data, adhere to privacy policies, comply with regulatory standards, and easily integrate with existing IT systems. This necessitates a controlled deployment environment, predictable inference costs, and fine-tuning for specific business processes.

The choice of architecture begins with an assessment of the tasks that the model must perform, including document analysis, customer support, code generation, working with domain-specific knowledge, and automating internal processes. For complex scenarios, transformative architectures with attention mechanisms that scale and support contextual processing of large volumes of text are chosen. At the enterprise level, achieving efficiency while maintaining quality often involves pretraining fine-tuning and model adaptation to specific business processes.

An important factor is the infrastructure. Availability of GPU or dedicated accelerators, latency requirements, ability to scale out, and support for MLOps processes. The architecture should include secure data management, logging, model versioning, and the ability to upgrade without stopping business services.

Designing Training Datasets

Designing training datasets is a step in LLM model training and training large language models, significantly impacting the quality, manageability, and security of the model. In addition to collecting domain data, it is essential to configure the instructions accurately, align the model's behavior with business preferences, and scale the data using synthetic augmentation.

Taken together, these approaches enable stable results and adapt LLMs to real-world enterprise scenarios.

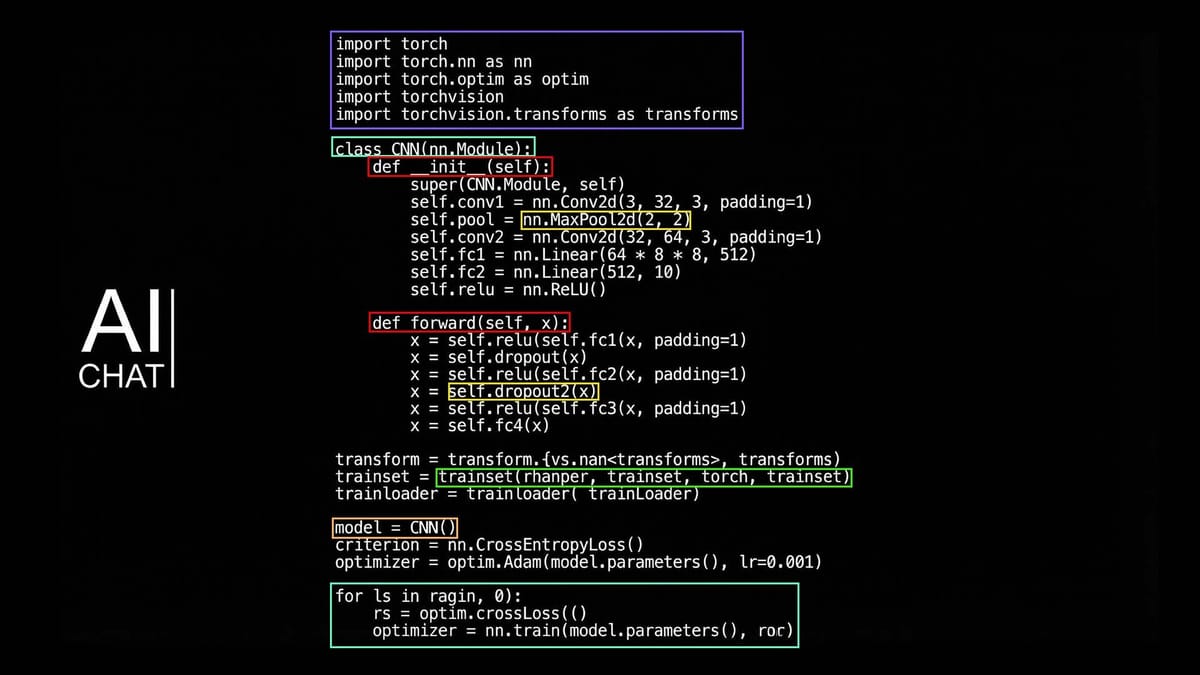

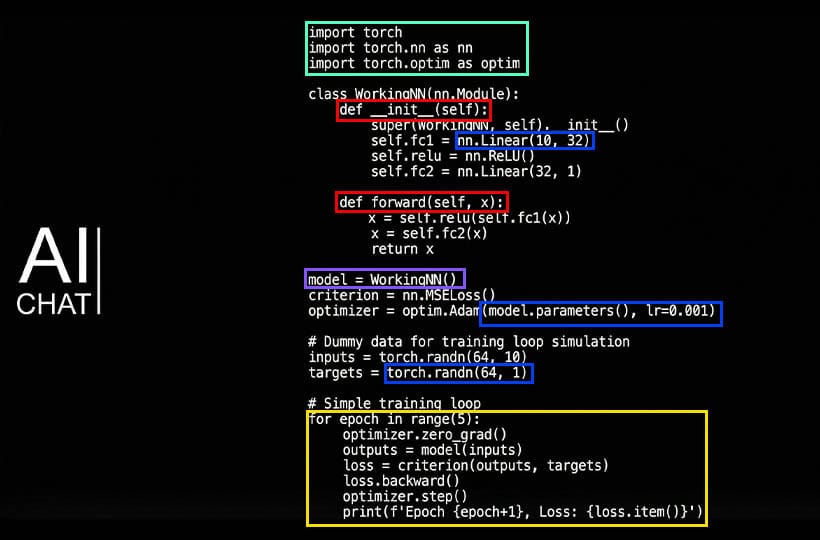

Train LLM: a step-by-step workflow

Training a large language model in an enterprise environment is a practical workflow that teams can implement today, using available tools and infrastructure.

1. Formulate business goals and use cases.

The process begins with a clear definition of tasks, including customer support, document analysis, internal search engines, code generation, or analytics.

2. Audit and prepare data.

Corporate teams analyze internal data sources. The data is cleaned, deduplicated, anonymized, and brought to a single format for training the LLM.

3. Design the training dataset.

Create instructions with examples and query scenarios to provide clear guidance. Add data for preference tuning and synthetic examples to cover rare situations as needed.

4. Select the base model and training strategy.

Teams select a foundation model and determine the approach: pretraining fine-tuning, LoRA/PEFT, instruction tuning, or RAG. These methods allow efficient model adaptation without retraining the entire LLM.

5. Training and iterative refinement.

The model is trained on prepared data with short iterations. After each cycle, the results are analyzed, the dataset is adjusted, and the instructions are refined to improve the quality of the answers.

6. Quality and security assessment.

Automatic and manual evaluation is performed: accuracy, hallucinations, policy compliance, and resistance to unwanted requests. Domain experts are involved to verify the business relevance of the answers.

7. Deployment in a controlled environment.

The model is integrated into internal systems via APIs, chat interfaces, or plugins. Access control, logging, monitoring, and data usage restrictions are configured.

8. Monitoring and continuous learning.

After launch, teams collect user feedback, analyze errors, and gradually update the datasets and models. This transforms LLM into a living system that evolves in tandem with the business.

Hyperparameters, PEFTs, and Environment Optimization

Hyperparameter tuning and PEFT techniques are essential for LLM model training, enabling effective model adaptation and ensuring high performance without full retraining.These approaches can reduce computational costs, speed up inference, and ensure consistent response quality without requiring the retraining of the entire model.

Evaluation, deployment, and monitoring in real conditions

After training, the model is evaluated using metrics such as accuracy, completeness, F1 score, "hallucination" rate, and manual verification by experts with domain expertise. The evaluation also considers compliance with security policies and regulatory requirements, as corporate data frequently contains sensitive information.

Deploying the model in a production environment requires careful planning of infrastructure and integration, including APIs for interaction with internal systems, access control, request logging, data usage restrictions, and disaster recovery and backup plans. Apply phased launch strategies, such as Canary Deployment or A/B testing, to minimize risks and evaluate the model's impact on business processes in real-time.

Monitoring is an ongoing process and includes tracking model performance, response delays, error rates, and analyzing user feedback. Based on this data, the model is regularly updated and retrained, and the instructions and data are adjusted accordingly.

Security, Governance, and Compliance by Project

Security, governance, and compliance are essential for the development and deployment of large enterprise-level language models. Security encompasses the protection of data used for training and inference, including encryption, anonymization, and access control. This protects confidential company information, customer data, and internal documents from unauthorized access or leaks.

Model governance encompasses version control, change logging, performance monitoring, and supporting MLOps processes to ensure stable operation. It is essential to implement audit procedures, internal policies, and development standards to track changes and respond promptly to any incidents or deviations from expected model behavior.

Compliance ensures adherence to regulatory and legal requirements, such as the GDPR and CCPA, as well as other industry standards. This includes data management, control of model usage, transparency of decisions, and documentation of training and testing processes. The combination of security, governance, and compliance creates a reliable infrastructure for enterprise LLM applications, allowing businesses to integrate intelligent systems without the risk of violating regulations or compromising user trust.

FAQ

What are the main steps in training a large language model for enterprise use?

The main steps include defining business goals, preparing and cleaning data, designing the training dataset, selecting a base model and training methods, iterative retraining, quality assessment, deployment, and ongoing monitoring.

What compute planning approaches work for teams with limited GPU resources?

For teams with limited GPU resources, approaches such as parameter-efficient learning (LoRA), mixed precision, and distributing compute across multiple GPUs or using the CPU for part of the workload are effective.

How should enterprises ingest and preprocess different types of documents?

Enterprises should centrally collect documents of different formats, standardize their structure, clean up redundant data, and anonymize before further processing or training models.

What are the most important hyperparameters and PEFT settings?

The learning rate, batch size, and choice of PEFT method (LoRA, Adapter, or Prompt tuning) have an impact on LLM results, as they determine the learning speed and stability of the gradients.

What benchmarks and monitoring should be used in evaluation and production?

Benchmarks of accuracy, F1 score, error rate, and hallucination rate, as well as response latency, should be used, along with monitoring user feedback and compliance with security policies.

How to build security, governance, and compliance into the pipeline?

Security, governance, and compliance can be integrated into the pipeline by incorporating access control, logging, auditing, and compliance checks at every stage of data preparation, training, deployment, and model monitoring.