Creating Feedback Loops: Using Annotation Insights to Improve Models Iteratively

Feedback helps teams work in a coordinated manner, minimizing misunderstandings and ensuring that each iteration leads to improved results. A key benefit of such feedback is the rapid detection and correction of errors, allowing models to become more accurate with each iteration.

Effective feedback improves the quality of work on projects and significantly improves model training. Integrating annotation results provides a clear understanding of how to move forward, making the model improvement process more structured and predictable. Platforms that use iterative feedback demonstrate how high efficiency can be achieved without sacrificing quality.

Key Takeaways

- Feedback loops are essential for iterative model improvement and accuracy.

- Systematic Feedback reduces miscommunication and guides teams effectively.

- Consistent Feedback leads to refined AI models by quickly addressing errors.

- Feedback loops contribute to efficient project management and reliable model training performance.

- Integration of annotation insights provides a roadmap for continuous machine learning improvement.

Introduction to Annotation Feedback Loops

Feedback annotation involves regularly checking the already annotated data, adjusting it based on new insights, and rerunning the model for validation. It's a cyclical process in which each step not only improves the accuracy of the data but also helps you assess whether the model is learning correctly and whether its performance has changed.

When creating a basic model, you can initially annotate the data manually, but as the model learns, it becomes obvious that certain labels may be unclear or incomplete. This is where feedback comes in. Iterating with revisions and adjustments to the data allows you to create a more accurate model that better understands the context and does not make mistakes.

This process helps to avoid systemic errors that could arise due to inattention or incorrect annotations. When feedback is set up correctly, models become much more reliable because they are constantly adjusted in real-time based on new data.

Why Feedback Loops Matter for AI Model Improvement

Clear metrics and timelines are also essential to keep teams on track and reduce the risk of misunderstandings. Keymakr applies a clear project development plan, providing functionality for easy annotation and feedback collection. Here's how it works:

- Real-time feedback allows you to correct errors, improving data quality quickly.

- Teamwork tools - improve interaction between process participants, ensuring consistency and accuracy.

- Automation - speeds up the annotation process and reduces the likelihood of errors.

The Role of Annotation in Enhancing Model Performance

High quality is the foundation of successful machine learning models. They ensure that the data the models are trained on is accurate, consistent, and free of biases, which is critical to achieving accurate predictions.

Errors in the annotation process can seriously affect model accuracy and increase bias. For example, incorrectly labeled data can disorient the model, leading to inefficient performance. Finding a balance between manual verification and automated annotation tools is necessary to achieve high standards. Automation speeds up the process, but human attention ensures accuracy and high quality.

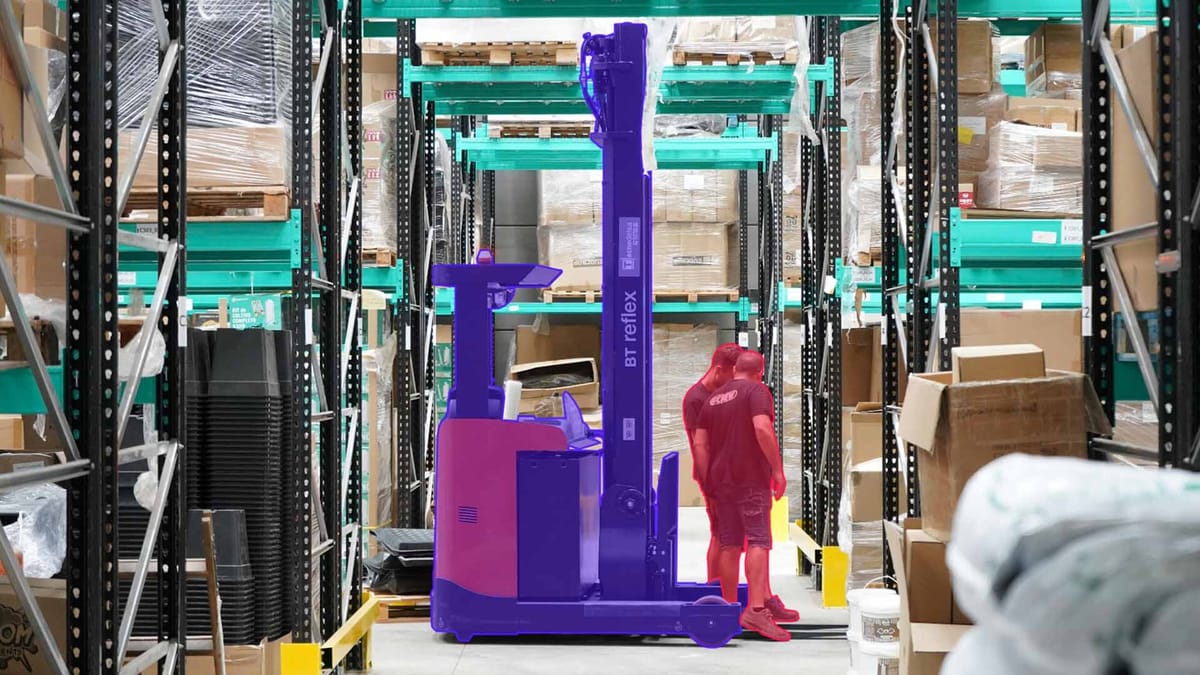

Well-established workflows and constant feedback can improve model results. For example, accurately labeling objects in image annotations allows models to recognize patterns better and increases overall performance.

Step-by-Step Process

- Project planning: First, set clear goals and deadlines for your annotation project. This will help everyone involved understand their responsibilities and deadlines, preventing misunderstandings and delays.

- Data preparation: Before you start annotating, organize your dataset. This includes cleaning up the information and removing redundant or incorrect data that may interfere with labeling accuracy.

- Perform the Annotation: Use specialized tools to simplify the annotation process. They can automate repetitive tasks and provide opportunities for real-time collaboration and quality checks.

- Quality review: Create a robust review process to ensure the accuracy of annotated data. This can include both automatic checks and manual checks by experienced annotators.

- Iteration and improvement: Use feedback from the validation phase to improve the annotation workflow. This iterative approach helps identify and correct errors early, improving the overall quality of work.

Implementing Effective Annotation Feedback Loops

When a model is created, it needs to recognize objects in images or interpret text correctly. To be accurate and reliable, this model needs high-quality training data. This is where feedback comes into play: it allows teams to correct mistakes early on, which can significantly improve the quality of the result.

Each annotation cycle analyzes potential errors in the model and the data. This allows us to identify weaknesses quickly and correct them at subsequent stages.

The key aspect here is continuity. By constantly receiving Feedback, teams can quickly adjust annotations and data so that the model gradually learns to recognize more accurately.

This continuous process allows us to pinpoint exactly where and why the model makes the most mistakes. For example, if the model often makes mistakes when recognizing objects in difficult lighting conditions, annotators can specifically mark such images to help the model better navigate such situations.

Best Practices for Continuous Improvement

Automated tools allow for real-time error tracking, while manual check adds the necessary level of quality control, especially for complex tasks.

- Collaboration tools and structured review systems allow teams to respond quickly to emerging issues. Regular feedback helps maintain high standards, fostering a culture of continuous improvement. Features and benefits:

- Automatic Feedback: enables real-time detection and correction of errors.

- Manual review: ensures high-quality annotations through expert supervision.

- Collaborative tools: facilitate teamwork and ensure consistency of annotations.

Integrating Annotation into MLOps Pipelines

MLOps pipelines are not just a technical tool but a true architecture for managing the development of machine learning models. They are designed to automate, optimize, and simplify the entire process, from data preparation to model training and deployment. However, as with any complex process, the main difficulties are often hidden in the data processing stage. This is where the integration of annotations into pipelines comes in.

All stages of data processing, from data collection to pre-processing, will have a clear structure, and data quality will remain high throughout the model's life cycle. For example, when training a model, you can easily track which annotations helped improve accuracy and which need to be improved.

But it's not just about data quality. Integrating annotations into your pipelines also helps you solve scaling issues. Automating the annotation process can efficiently process vast amounts of data that are constantly coming in.

Automation and API Integration

API integration allows for real-time feedback and quick detection and correction of errors. This is convenient and ensures the stability and speed of the process, as each data update is automatically integrated into the model.

Automated endpoints greatly facilitate the continuous implementation of annotated data, which ensures uninterrupted model updates without unnecessary delays. Real-life case studies show how API integration allows you to effectively manage data flow and increase operational efficiency without losing strict quality control.

A reliable workflow that harmoniously fits existing MLOps systems must be set up for everything to run smoothly.

Utilizing Advanced Tools and Techniques for Annotation

Modern tools have changed how we work with data, making the process faster and more accurate. Thanks to the integration of machine learning technologies, these tools can automatically detect patterns, prompt users where labeling needs to be clarified, and even correct some errors.

But it's not just about speed and automation. Using these tools, you can achieve higher accuracy. For example, when processing large amounts of data, where human error can be introduced, such tools help make the annotation process almost error-free.

What's more, modern annotation tools have an intuitive interface and support a variety of formats, making them accessible even to those without deep technical knowledge.

Leveraging Machine Learning for Enhanced Labeling

Today, machine learning-based tools significantly reduce humans' workloads by automatically creating bounding boxes or labels, allowing specialists to focus on more complex cases.

Automatic annotation reduces routine work and minimizes errors, speeding up the process. Thanks to collaborative dashboards and visual indicators, the team can work in a coordinated manner and ensure high consistency of results. Real-world use cases show how machine learning can significantly increase the accuracy and speed of Annotation.

Overcoming Challenges in the Annotation Process

While extremely important, the annotation process often faces a number of challenges. Some of the biggest challenges are data bias, instability of results, and scalability issues. To ensure high-quality training data and guaranteed accurate AI models, it is necessary to work carefully to overcome these barriers.

Data bias can significantly distort the results, and incorrect or inconsistent annotation makes the model less reliable. Scalability, in turn, becomes a problem when dealing with large amounts of information, which can require significant resources and time. Therefore, it is essential to understand these challenges and actively seek solutions to make the results accurate and scalable for broader applications.

Managing Data Bias and Inconsistency

Data bias and inconsistency can significantly affect the performance of models. For example, facial recognition systems may produce false results due to insufficiently diverse training data, leading to racial or gender bias. To mitigate these risks, it is essential to develop clear guidelines and provide training for annotators to ensure diversity in their approach. Regular checks and checkpoints help maintain consistency by ensuring that all annotators work in the same way.

Summary

In summary, strategic use of feedback and high-quality annotation practices is key to success in developing machine learning models. By integrating continuous Feedback, teams can identify errors early on, providing accurate and reliable data for training. Clearly defined goals, structured workflows, and advanced tools are essential to achieving high results.

Managing data bias and scalability challenges is crucial to maintaining model accuracy. Constant feedback and iterative improvement remain key success factors in AI projects, enabling teams to refine their approaches and improve their results continuously. Implementing these practices into existing MLOps processes allows organizations to simplify development and deliver high-quality Annotation.

As feedback systems show, consistent, high-quality annotation is the foundation for achieving breakthrough results in AI. The future lies in seamlessly integrating this feedback into ongoing data annotation and machine learning efforts, driving innovation and increased industry efficiency.

FAQ

What is the role of feedback loops in improving AI models?

Feedback loops are critical in enhancing AI model performance by allowing continuous refinement. They enable teams to identify gaps, improve annotation quality, and iteratively fine-tune models for better accuracy and reliability.

How does Annotation contribute to machine learning workflows?

Annotation is essential for training accurate machine learning models. It provides the labeled data for models to learn patterns, understand context, and make informed decisions, ensuring high-quality training datasets.

What are the key differences between automated and manual Annotation?

Automated Annotation uses algorithms to label data quickly, while manual Annotation relies on human input for precision. Computerized methods are efficient for large datasets, but manual Annotation is often required for complex or nuanced tasks to ensure accuracy.

How can teams maintain consistency in annotation projects?

Clear guidelines, extensive annotator training, and regular quality reviews can achieve consistency in Annotation. Implementing standardized practices helps ensure uniformity across the dataset.

What steps can be taken to address data bias in Annotation?

Addressing data bias involves diversifying the dataset, ensuring balanced representation, and regularly auditing for bias. Clear guidelines and consistent labeling practices also help mitigate bias in annotation projects.

How does active learning improve the annotation process?

Active learning streamlines the annotation process by prioritizing the most valuable data points for model training. This approach reduces the workload while improving model performance by focusing on uncertain or high-impact data.