Beginner's Guide to Semantic Segmentation

Three types of image annotation can be used to train your computer vision model: image classification, object detection, and segmentation. Image classification and object detection are fairly straightforward — the former involves labeling the object featured in an image while the latter takes the same process a step further by introducing multiple objects.

But what about segmentation? Image segmentation is the most difficult—and the most precise — type of image annotation. That’s because it involves delineating the exact boundary of each object featured in an image.

The story doesn’t end there. What exactly are the differences between instance segmentation vs. semantic segmentation? And can deep learning semantic segmentation improve the performance of your next computer vision project? We’ve done the legwork and put together a comprehensive guide on image semantic segmentation and its many uses.

What Is Image Segmentation Used For?

Here are a few popular use cases for image segmentation that you may not have heard of:

- Portrait Mode. Google’s Portrait Mode feature on its line of Pixel phones automatically separates foreground and background through image segmentation.

- Virtual try-on. Have you ever tried on a new outfit or makeup look through the web? Virtual transformations wouldn’t be possible without image segmentation.

- Visual search. Image retrieval algorithms rely on image segmentation to deliver visually similar results and recommendations on sites like Google, Amazon, and Pinterest.

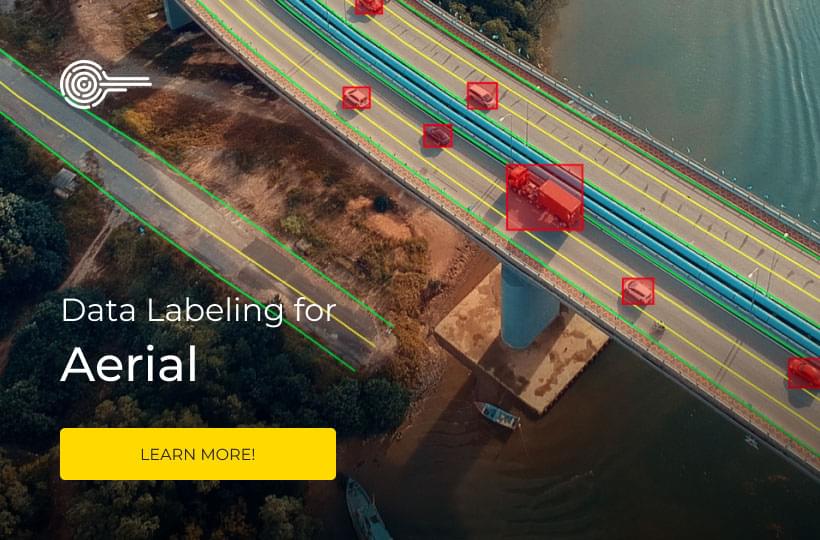

- Self-driving cars. Autonomous vehicles and drones require pixel-perfect levels of accuracy to guarantee safety. That’s where image segmentation comes in.

Sometimes bounding boxes simply aren’t accurate enough. But there’s a difference between instance and semantic segmentation models, and when they’re appropriate. Let’s start with semantic segmentation.

What Is Semantic Segmentation?

Semantic segmentation outlines the boundaries between similar objects and groups them under the same label. Semantic annotation tells you the presence and shape of objects, but not necessarily the size or shape.

Data annotators typically rely on semantic segmentation when they want to group objects. In cases where objects must be counted or tracked across more than one image, instance segmentation — which we’ll talk about later — is the preferred technique.

Let’s walk through an example. A data annotator must annotate an image that features a hand holding onto four balloons. Image classification would indicate the presence of balloons, while object classification would count four balloons and show each balloon's location. However, semantic segmentation would group the hand and the balloons into separate categories, telling us only that balloons the following pixels contain balloons.

Here’s another example. Let’s say you’re annotating an image of a concert. Using semantic segmentation, you might annotate the crowd to segment the seating from the stage. While conveying two categories (“crowd” and “stage”), semantic segmentation doesn’t count the number of people in the crowd or reveal their individual outlines. Similarly, semantic video analysis would track the outline of the entire crowd over time.

What Is Instance Segmentation?

Instance segmentation takes semantic segmentation to the next level by revealing the presence, shape, size, count, and location of the objects featured in an image.

If we refer to our balloon example from earlier, instance segmentation would tell us that there are four balloons of this size and shape, found in their exact locations. In our concert example, instance segmentation may reveal the presence of each individual in the crowd.

Whether you’re using semantic or instance segmentation, you can perform pixel-wise segmentation, which includes every pixel within the outline of an object, or boundary segmentation, which only considers border coordinates.

High-Quality Data Annotation Services for Computer Vision

Get to market faster with Keymakr. We provide pixel-perfect image and video annotations that meet your deadlines and suit your budget. We employ a combination of high-quality tools, verified workflows, and trained annotators to produce, structure, and label high volumes of training and testing data.

Are you interested in consistent, high-quality training data for your next computer vision project?

Contact a team member to book your personalized demo today.