Annotating for OCR: Preparing Datasets for Text Extraction in Images

In the emerging field of computer vision, the ability to extract text from images has opened the door to automation, accessibility, and data-driven insights in countless industries. From digitizing handwritten archives to real-time translation using mobile devices, systems built on optical character recognition (OCR) have become more sophisticated and widespread. Before any model can read, it must first learn what the text looks like in all its various forms.

Annotation is the foundation of OCR, shaping the accuracy, adaptability, and scope of what machines can recognize. It is a technical and human-centered job combining visual judgment with structured data. Preparing datasets becomes more critical and nuanced as OCR expands to new languages, formats, and use cases. The right annotation approach improves productivity and reduces the need for costly corrections and retraining later.

Key Takeaways

- High-quality datasets are crucial for accurate OCR models.

- OCR technology enhances data accessibility for NLP applications.

- Multilingual OCR faces challenges with memorable characters and diacritics.

- Factors like handwriting and complex fonts impact OCR accuracy.

- Clear annotation guidelines improve data quality and reduce errors.

Understanding Optical Character Recognition (OCR)

OCR (optical character recognition) is a technology that allows computers to detect and interpret text in images. It works by analyzing the visual patterns of letters, numbers, and symbols in an image (such as a scanned document, photograph, or screenshot) and converting them into machine-readable text. OCR can recognize printed or handwritten characters, depending on how the system is trained and the quality of the input. It is the main engine for document digitization, automatic data entry, license plate reading, and real-time text translation.

How OCR Works

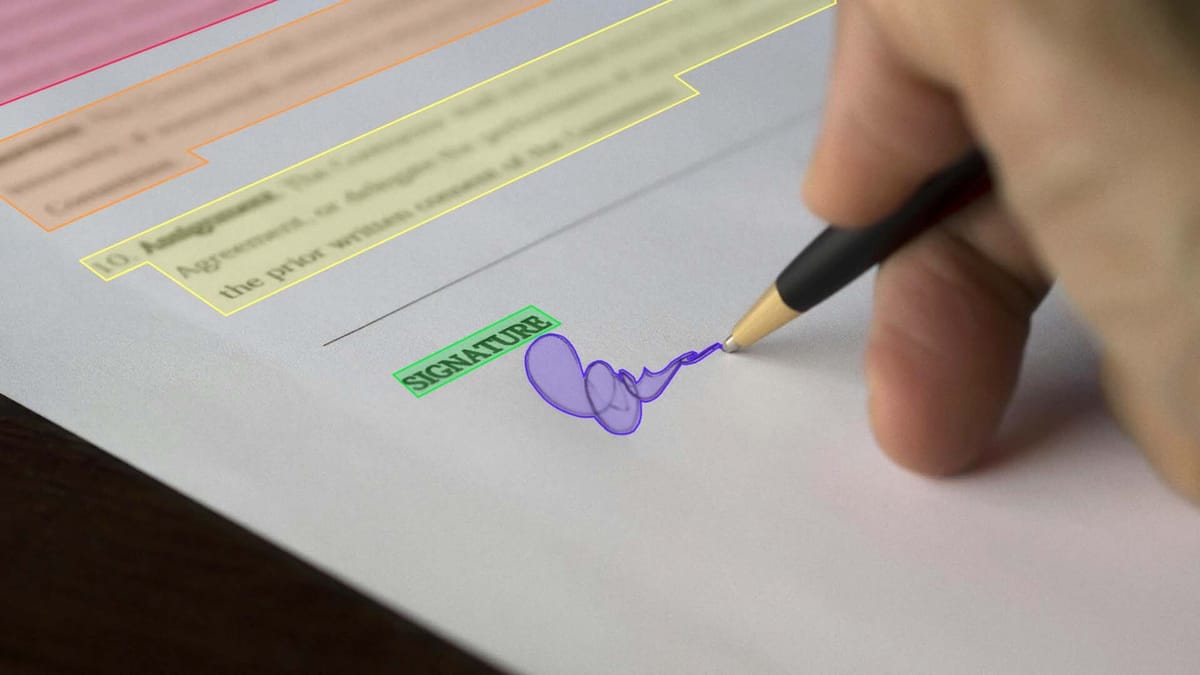

OCR annotation typically starts with text images, such as scanned documents, road signs, receipts, or screenshots. Annotators use tools to mark areas where text appears by drawing rectangles (or sometimes more detailed shapes) around each text segment. They enter the correct text transcription for each marked area, allowing the model to learn what the visual symbols represent. These labeled images and their transcriptions are then compiled into a dataset used to train or fine-tune the optical character recognition model to learn to find and read text in similar photos.

Key Steps in the OCR Process

- Image acquisition. The process begins with capturing an image of the text using a scanner, camera, or mobile device. This image becomes the input for OCR.

- Pre-processing. The image is enhanced to improve the clarity of the text. This may include noise reduction, binarization (converting to black and white), contrast adjustment, and correction of skew or blur.

- Text detection. The system determines which parts of the image contain text. Using algorithms or machine learning models, it finds lines, words, or individual characters.

- Character recognition. Detected text areas are analyzed to identify specific letters, numbers, or symbols. A trained model classifies these shapes into readable characters.

- Post-processing. The output of raw text is enhanced to improve accuracy. This may involve correcting errors, formatting text, and applying language rules or dictionaries to ensure consistency.

Applications of OCR

OCR has a wide range of practical applications across various industries, helping to automate tasks and unlock data trapped in physical or visual formats. One of the most common applications is document digitization, where printed materials such as books, invoices, forms, or records are converted into searchable and editable digital text. In banking and finance, OCR enables automated check processing, data extraction from receipts, and identity verification using scanned documents. Healthcare systems use optical character recognition (OCR) to digitize patient records, prescriptions, and insurance forms, improving accessibility and reducing manual data entry. In transportation and logistics, OCR reads license plates, tracks parcels by scanning labels, and processes customs documents quickly and accurately.

Other well-known applications include real-time translation using mobile apps that read foreign text through a smartphone camera, accessibility tools for visually impaired users that convert printed text to speech, and legal or government archiving, where historical documents are stored and made searchable. OCR supports automated inventory management in retail by reading product labels and barcodes.

Importance of High-Quality Datasets

OCR models rely on annotated data to understand how different characters and words look in different fonts, sizes, languages, and layouts. Suppose the training data is inconsistent, poorly labeled, or lacks variety. In that case, the model will have difficulty working with real-world examples, especially those involving handwriting, low-resolution scans, or complex background images. A well-matched dataset ensures that the model is exposed to a wide range of visual scenarios during training, helping it to generalize better and make fewer errors in production.

They also support better handling edge cases such as distorted text, overlapping characters, or unusual document structures. This means fewer manual corrections, smoother automation, and more confidence in the system's results. The data set's quality affects recognition accuracy and determines the overall performance, reliability, and adaptability of the OCR system.

Measuring OCR Accuracy

One of the most common metrics is the character error rate (CER), which calculates the number of insertions, deletions, and substitutions required to convert an OCR result to a correct transcription and then divides that total by the number of characters in the underlying truth. A lower CER means higher accuracy. Word Error Rate (WER) is another important metric that follows the same logic but at the word level, which is particularly useful when assessing readability or use in natural language contexts.

Accuracy can also be evaluated using precision metrics, which measure the percentage of lines or entire texts that the system gets perfectly correct without any errors. Even minor deviations matter in some applications, especially in form processing or legal documentation, so these stricter evaluations become more relevant. Comparative analysis of OCR systems in different data sets, such as scanned books and handwritten notes, can reveal strengths and weaknesses in specific scenarios.

Common Types of OCR Data

OCR systems are trained and evaluated on various data types, each reflecting real-world scenarios and problems. One of the most common is printed text documents, such as books, articles, and reports, which usually have clear layouts and consistent fonts, making them ideal for basic OCR training. Handwritten text is another major category that is significantly more complex due to differences in individual writing styles, letter shapes, and spacing.

Another commonly used type is scene text, which includes natural images where text appears on signs, posters, packaging, or displays. This data is particularly challenging due to background clutter, lighting changes, angles, and distortions. OCR also often deals with forms and structured documents, such as invoices, receipts, passports, and ID cards, combining printed text with tables, logos, and handwriting.

Different Languages and Fonts

OCR systems must recognize various languages and fonts, each presenting unique challenges. Languages differ in vocabulary and writing, character shape, writing direction, and text structure. For example, Latin-based languages such as English and Spanish are relatively straightforward because of their common numeric representation. At the same time, Arabic, which flows from right to left and connects characters, or Chinese, with thousands of different characters, requires special modeling.

A model that works well with standard printed fonts may have trouble with handwritten text, vintage fonts, or creative fonts found in advertising or logos. Some OCR systems are trained to normalize visual variations in fonts, but others benefit from being exposed to as many font styles as possible during training.

Essential Capabilities in OCR Annotation Tools

One important feature is support for a flexible bounding box, allowing annotators to mark text at the word, line, or character level and, in some cases, draw polygons for non-standard or curved layouts. The tool should also support text transcription directly associated with each annotation, providing a clear link between the visual area and its textual content.

A powerful OCR annotation tool will also include quality control features such as validation rules, duplicate checks, and version history to maintain consistency across large teams or extended annotation efforts. Batch processing and keyboard shortcuts are essential for speed and scalability, allowing users to work quickly without sacrificing accuracy. Integration with dataset formats used within machine learning, such as COCO, PASCAL VOC, or custom JSON/XML schemas, is another key capability that eases the transition from annotation to learning. Some tools also offer semi-automated features, such as AI-assisted window generation or predictive text suggestions, which can reduce manual effort and speed up annotation creation without compromising quality.

Summary

The quality and effectiveness of OCR systems are primarily determined by the datasets on which they are trained. These datasets need to be carefully prepared and annotated, as the more diverse and accurate the data, the better the model can learn to work with different fonts, languages, and real-world conditions.

OCR applications span many industries, making it a versatile tool for document scanning, data extraction, and even real-time language translation tasks. To build robust OCR systems, it is essential to focus on features such as adapting to different text formats, handling a variety of languages, and ensuring that the system can handle text from complex images or layouts.

FAQ

What is Optical Character Recognition (OCR)?

Optical Character Recognition (OCR) is a technology that converts text in images or scanned documents into machine-readable format.

Why is high-quality data important for OCR?

High-quality data is crucial for OCR because it directly impacts the accuracy and performance of OCR models. Well-annotated, diverse datasets help OCR systems handle various text types, fonts, and languages, improving their ability to recognize and extract text accurately.

What are the key steps in the OCR process?

The key steps in the OCR process include pre-processing, character segmentation, feature extraction, and classification. These steps work together to transform image-based text into editable, searchable data.

How does OCR handle different types of text data?

OCR systems are designed to handle various types of text data, including printed and handwritten text. Printed text is generally easier to recognize, while handwritten text requires more sophisticated algorithms and training data to achieve accurate results.

What challenges does multilingual OCR face?

Multilingual OCR faces challenges such as recognizing different writing systems (e.g., Latin, Cyrillic, Arabic, Asian scripts), handling diverse fonts and styles, and understanding language-specific nuances.

What is data annotation in the context of OCR?

Data annotation for OCR involves labeling and categorizing text elements within images to create structured training data.