Video Annotation For Machine Learning: A Practical Guide

Video annotation for machine learning can be helpful for many purposes, such as object, emotion, speech, and face detection. Machine learning is a field that allows computers to learn without human programmers.

With careful preparation, you can make video annotation go smoothly. This practical guide will help you get started with labeling videos.

How video annotation for machine learning works

Video annotation is a way to label parts of a video so that machine learning algorithms can better understand it. The annotations are added to the video in text or numbers and can be used by machine learning algorithms or other software applications.

There are many different applications of video annotation. One application is to generate bounding boxes around objects or people in the video. In addition, you can use video annotation for object detection. This field has many applications, such as facial recognition and emotion analysis.

Video annotation services | Keymakr

Collect or create video training data

You can use existing video content or create your own for training. If you want to collect data, it's easiest to start with an extensive collection of annotated videos. There are many online repositories of annotated videos. You can also find ample amounts of unlabeled videos on the web using an API.

The advantage of using existing video data is that it's often easier to find than creating your own. However, you may be unable to find enough data that exactly matches your needs, so you'll need to create synthetic data.

Synthetic data is generated by machine learning algorithms trained on real video data. For example, you can develop a model to recognize objects in videos using millions of labeled images and then generate new video clips showing those same objects.

Once you have the video data, you can split it into training and testing sets. The training set is used to train your model. And the testing evaluates how well it performs on unseen data.

Prepare your data

Preparing your data for use with a learning model is integral to developing that model. You can prepare your data by cleaning it, normalizing it, and labeling it with appropriate features.

Cleaning your data involves removing any errors or wrong entries. Normalizing your data means scaling and transforming it so that it is in a format that's suitable for annotating. Finally, labeling your data involves associating each piece of input with an appropriate label, which could be a number or another value.

Organize your team

Organizing your team is an essential step in machine learning. You can manage your team by clarifying roles and responsibilities. Create a schedule for meetings, and establish channels for sharing information.

Depending on the size of your dataset, you may need to hire multiple annotators. The annotation process can be time-consuming and requires attention to detail. Before starting, you should consider whether your team can do this task. You should also have an analyst who can analyze and interpret results from the machine learning model.

Create a list of labels

Video annotation for machine learning involves creating a list of labels (or tags) for each video clip frame. Each frame is labeled with a set of tags that describe its content or meaning. For example, a person could identify all scenes involving cars by labeling them as "car scenes." Once all the frames are labeled, you can use them as input data for training AI models.

The following are examples of labels for autonomous vehicle machine-learning projects:

- frequency of use of lane markings or curb markings

- speed limit sign location

- pedestrian crosswalk sign location

- traffic light signal's color, location, and shape

- stop sign and yield sign location

- school zone location

- directionality of traffic flow (one-way or two-way)

The label you use for an object in a video depends on the goal of your project. For example, if you are building a system that processes videos, it may be easier to use general labels. But if you are making a system for detecting pedestrians, cars, and other objects in an image, you might want to create a list of specific labels.

Pick your tools

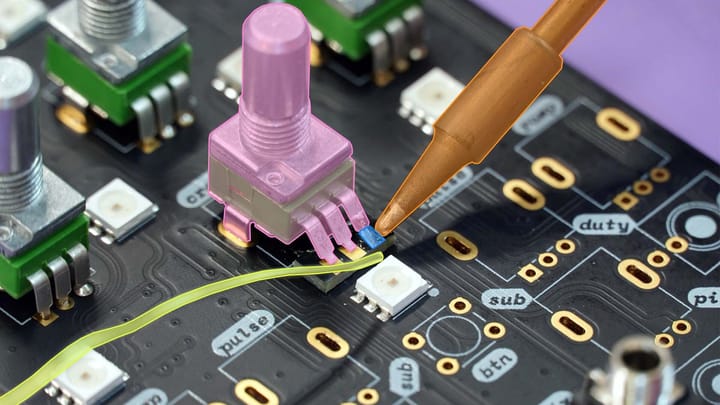

Features are the key to any machine learning model. You need to identify what features you want to include and how to extract them from your video. You can then use these features in a training data set to train your model. Video annotation tools include:

- bounding box

- polygon

- semantic segmentation

- key points

- skeletal

- lane

- instance segmentation

- custom

Semantic segmentation is the most critical task in video annotation. It allows you to label every pixel of a video with a specific category, such as "person" or "car."

First, your system must learn what each pixel means and how to classify it based on training data. Then, a convolutional neural network (CNN) can understand what each pixel means and how to classify it based on training data.

The role of ML in video annotation

ML is involved in many aspects of video annotation. For example, it can help you extract key points from a video that are highly likely relevant to your task. In addition, it can generate bounding boxes around objects or people in the video.

The dataset should contain videos similar enough to each other so that the model can generalize but different enough to learn how to distinguish between them.

One type of machine learning model in video annotation includes a feature extraction model. It learns what features to extract from the video and how to use them for classification. The second is the classification model itself. This system labels each frame with a specific category.

A feature extraction model learns a set of features you can use to classify a video. The features are then extracted from each frame and passed to the classification model, which labels each frame with a specific category. You can train these models using pre-trained neural networks.

Conclusion

Video annotation for machine learning is an essential process in developing visual recognition systems. It allows us to learn more about how humans perceive and understand the world around us. This data can then be used to build better AI models that respond appropriately to real-time situations and objects without explicit instructions.