Maximizing Performance: AI Model Optimization Techniques

To fully harness the power of AI systems, optimizing their performance is vital. This section will explore key strategies for enhancing such systems to achieve accurate, efficient, and scalable outcomes, based on extensive research and analysis of AI model optimization techniques.

AI model performance optimization encompasses a range of factors, including model selection, training data quality, hyperparameter optimization, regularization techniques, hardware infrastructure, and more. By focusing on these aspects, organizations can unlock the true potential of their AI models and drive significant business value.

Keep in mind:

- Choosing the right AI model is crucial for optimal performance.

- Acquiring high-quality training data is essential for accurate AI model outcomes.

- Optimizing model hyperparameters enhances precision and efficiency.

- Regularization techniques help prevent overfitting and improve model generalization.

- Selecting the right hardware infrastructure, such as GPUs and cloud-based platforms, accelerates AI model processing.

Fine-Tune Model Selection

When optimizing AI model performance, choosing the right model is the fundamental step towards achieving desired outcomes. While off-the-shelf models offer convenience, their generic nature may not always meet specific requirements. To ensure optimal performance, thorough evaluation of the model architecture, data sources, and relevance to the problem at hand is necessary.

Custom development of neural network models tailored to specific tasks and data domains can often yield superior results compared to repurposing generic or outdated models. These specialized models can incorporate domain-specific knowledge and nuances, allowing for more accurate predictions and efficient decision-making.

By carefully selecting or developing the appropriate model, organizations can enhance the performance of their AI systems and achieve better outcomes in various applications.

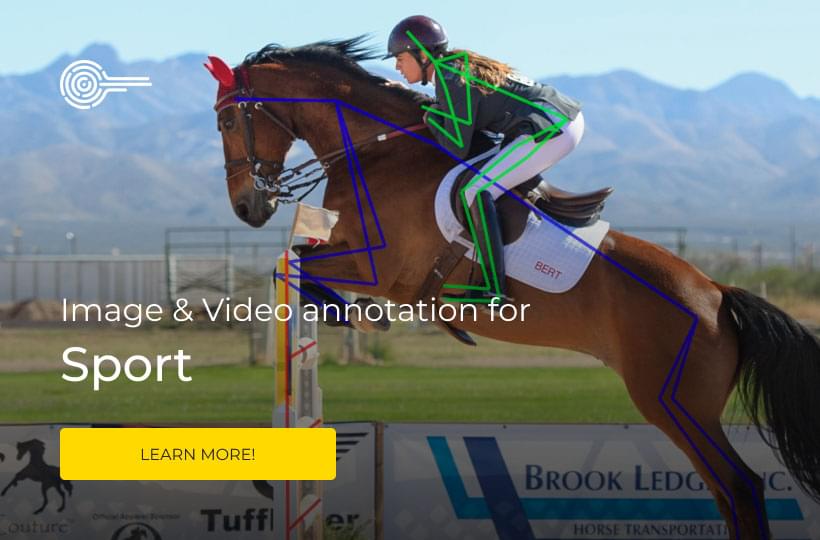

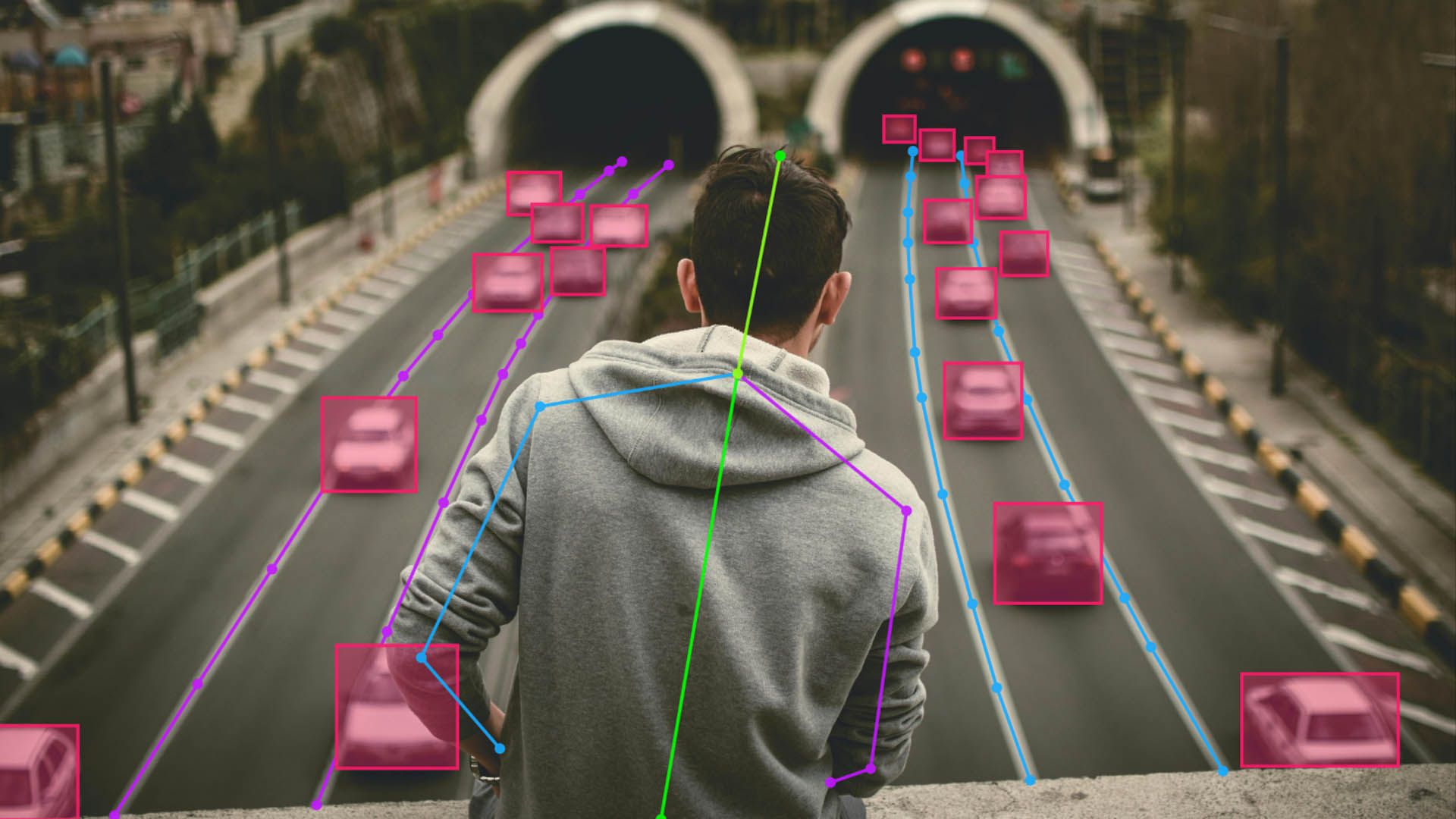

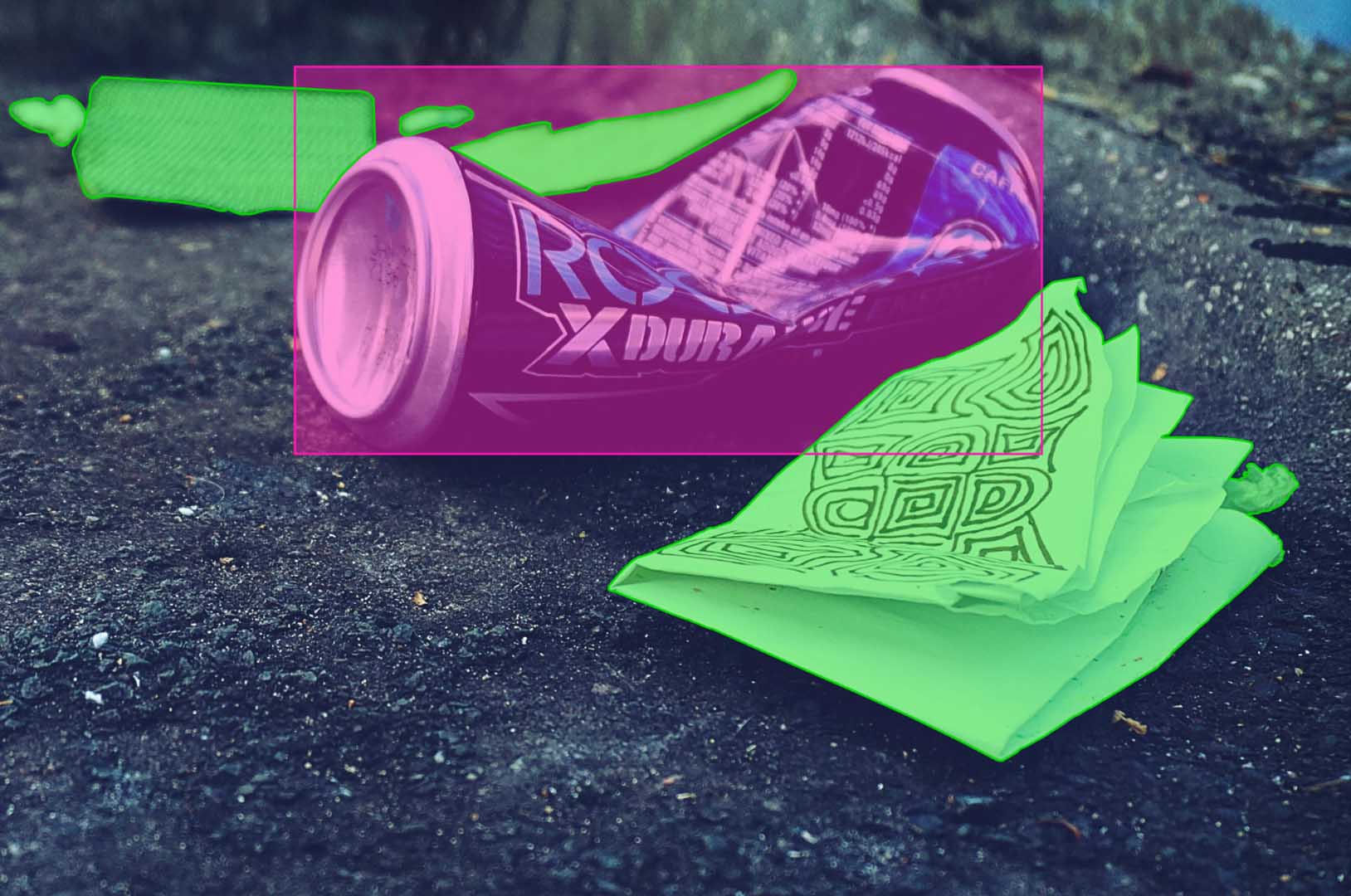

Acquire Quality Training Data

In the world of AI model training, the adage "garbage in, garbage out" holds true. The quality of training data directly influences the performance of the model. To optimize AI model performance, it is imperative to prioritize the acquisition of high-volume, high-quality, and relevant training data.

When acquiring training data, it is crucial to ensure that it is representative of the problem space at hand. This means collecting data from diverse sources that cover a wide range of scenarios and variables. By incorporating a variety of data sources, AI models can be trained to handle real-world situations effectively.

Data integrity plays a vital role in training data quality. It is essential to review the data for errors, inconsistencies, and biases that could skew the model's understanding and performance. Rigorous data hygiene practices, such as cleaning, processing, labeling, and splitting the data into training and evaluation sets, set the foundation for a robust AI model.

Data sources:

- Publicly available datasets relevant to the problem domain.

- Third-party data providers specializing in specific industries or niches.

- Crowdsourcing platforms for additional data collection.

- Internal data repositories, including customer databases and historical records.

Data integrity and hygiene:

- Implement data validation procedures to identify and rectify errors or inconsistencies.

- Adopt rigorous data cleaning techniques to remove duplicate or irrelevant data points.

- Label the data accurately to provide meaningful annotations for model training.

- Ensure proper data splitting to separate training and evaluation datasets for model testing.

By acquiring quality training data from diverse sources, ensuring data integrity and hygiene, and adopting best practices, organizations can equip their AI models with the necessary foundation for optimal performance.

Example: Acquiring Quality Training Data

To exemplify the importance of training data quality, consider a healthcare AI model tasked with diagnosing rare diseases. Data from both public sources, such as medical research papers and public health databases, and private sources, such as hospital records and patient lab results, should be collected. This diverse data collection approach enables the model to learn from a comprehensive range of medical cases, contributing to better accuracy and performance in diagnosing rare conditions.

Optimize Model Hyperparameters

Hyperparameters play a critical role in determining the performance of AI models. These settings define the model's architecture and can significantly influence its accuracy, efficiency, and generalization. Optimizing hyperparameters is an essential step in maximizing model performance.

Several techniques can be employed to fine-tune hyperparameters and tailor the model architecture to the specific dataset and use case. Some common methods include:

- Grid search: A systematic approach that exhaustively evaluates a predefined set of hyperparameter combinations, finding the optimal configuration based on specified evaluation metrics.

- Random search: An alternative technique that randomly samples hyperparameter values within predefined ranges, allowing for a broader exploration of the hyperparameter space.

- Bayesian optimization: A more advanced approach that employs probabilistic models to model the relationship between hyperparameters and evaluation metrics, optimizing the model more efficiently.

Before conducting hyperparameter tuning, it is crucial to establish clear evaluation metrics that align with the desired outcome. These metrics can include accuracy, precision, recall, F1-score, or any other relevant performance measure based on the problem domain.

Hyperparameter tuning is a resource-intensive process that may require significant computational power and time. However, the benefits of optimizing hyperparameters can be substantial. Models that are well-tailored to the data and use case tend to exhibit improved generalization, precision, and efficiency.

Example: Comparison of Hyperparameter Tuning Techniques

Let's consider an example of optimizing hyperparameters in a deep learning model for image classification. The goal is to achieve the highest possible accuracy on a specific dataset.

Optimizing hyperparameters is crucial for maximizing AI model performance. By employing suitable techniques such as grid search, random search, or Bayesian optimization, organizations can tailor the model architecture to the data and use case. This process demands computational resources but can significantly enhance model generalization, precision, and efficiency.

Regularize to Prevent Overfitting

Overfitting is a common challenge in AI model development, where a model becomes overly sensitive to the training data and performs poorly on new, unseen data. To address this issue and enhance the model's ability to generalize well, regularization techniques are employed. These techniques selectively constrain the model's learning capacity, preventing it from fitting noise or irrelevant patterns in the training data.

One popular regularization technique is L1/L2 regularization, which introduces a regularization term to the loss function. This term penalizes large weights and biases, forcing the model to focus on the most relevant features and reducing its susceptibility to overfitting. By controlling the regularization strength with a hyperparameter, the model can strike a balance between fitting the training data and generalizing to new examples.

Another effective technique is dropout, where certain neurons are randomly and temporarily ignored during training. This forces the model to rely on a more diverse representation of the input, reducing the reliance on specific features and improving generalization. Dropout layers can be inserted within the model architecture to regularize specific layers or applied globally across the entire model.

Early stopping is a powerful regularization strategy that involves monitoring a validation metric, such as validation loss or accuracy, during training. The training process is stopped when the validation metric starts to deteriorate, indicating that the model has started to overfit. This prevents the model from continuing to optimize its performance on the training data at the expense of generalization.

Noise injection is another technique that promotes model generalization. Synthetic noise, such as random perturbations added to the input data or during the training process, helps the model learn to be more robust and resilient to variations in the input. By training on noisy data, the model becomes less susceptible to overfitting and more adaptable to different scenarios.

Monitoring Model Robustness

To ensure the effectiveness of regularization techniques in reducing overfitting, it's essential to monitor the model's robustness over time. Validation loss and accuracy are commonly used metrics to evaluate the model's performance on unseen data. By regularly evaluating these metrics during training, developers can ensure that the model is not underfitting or overfitting the data.

To further evaluate the model's robustness, synthetic noise testing can be incorporated. By introducing synthetic noise to the input data during testing, performance degradation can be assessed. Models that display a stable performance despite the presence of noise indicate better generalization and are less susceptible to overfitting.

Regularization Techniques to Prevent Overfitting

| Technique | Description |

|---|---|

| L1/L2 Regularization | Adds a regularization term to the loss function to penalize large weights and biases, reducing overfitting. |

| Dropout | Randomly ignores neurons during training to encourage the model to rely on a more diverse representation of the input. |

| Early Stopping | Stops the training process when a validation metric starts deteriorating, preventing overfitting. |

| Noise Injection | Introduces synthetic noise to the input data or during the training process to enhance the model's robustness. |

By leveraging regularization techniques and closely monitoring model performance, overfitting can be mitigated, enabling AI models to generalize better and perform well on unseen data.

Select the Right Hardware Infrastructure

Optimizing hardware infrastructure is a critical aspect of enhancing AI model performance. By leveraging advanced hardware capabilities, organizations can accelerate AI model training and inference, leading to improved efficiency and speed. This section explores key considerations for hardware optimization, including the CPU vs GPU dilemma and the benefits of cloud-based AI development platforms.

CPU vs GPU: Unleashing the Power of Parallel Processing

When it comes to AI workloads, graphics processing units (GPUs) have emerged as powerful tools for hardware optimization. GPUs excel at parallel processing, allowing for the simultaneous execution of multiple compute-intensive tasks. This parallel processing capability is particularly advantageous for deep learning workloads, which often involve complex calculations and large datasets.

Compared to central processing units (CPUs), which prioritize sequential processing, GPUs can significantly speed up AI model training and inference. By harnessing the immense computational power of GPUs, organizations can reduce training times and obtain faster results.

Cloud-based AI Development Platforms: Scalability and Accessibility

The cloud has revolutionized AI development by offering flexible and scalable infrastructure solutions. Cloud-based AI development platforms, such as Google Cloud, AWS, and Azure, provide accessible and cost-effective options for optimizing hardware infrastructure.

These platforms offer easy scalability of GPU power, enabling organizations to adjust computing resources based on their needs. Whether it's ramping up GPU capabilities during intense training periods or scaling down during lighter workloads, cloud-based platforms offer the agility required for efficient AI model development and optimization.

Furthermore, cloud-based AI development platforms eliminate concerns about physical hardware limitations and maintenance. Organizations can leverage these platforms' infrastructure as a service (IaaS) offerings, relying on the expertise of cloud providers to manage their hardware resources. This allows for focus on AI development and optimization rather than worrying about infrastructure management.

Specialized Hardware: Accelerating AI Performance

Beyond CPUs and GPUs, specialized hardware has emerged to further optimize AI model performance. Field-programmable gate arrays (FPGAs) and application-specific integrated circuit (ASIC) chips have been designed specifically for AI workloads, offering tailored optimizations for neural networks.

These specialized hardware solutions can provide significant performance improvements by accelerating computations and reducing power consumption. However, the adoption of specialized hardware requires careful evaluation of its compatibility with AI models and associated costs.

When selecting the right hardware infrastructure, organizations must consider their processing needs, budgetary constraints, and accessibility requirements. Weighting these factors will help create an optimal hardware environment that maximizes AI model performance.

Next, we will explore how AI model optimization can be further enhanced through the selection of the right model deployment strategy.

Conclusion

Optimizing AI model performance requires a systematic and iterative approach. By fine-tuning model selection and acquiring quality training data, organizations can lay a strong foundation for maximizing performance. Taking the time to optimize hyperparameters and select the right hardware infrastructure further enhances the efficiency and accuracy of AI models.

Regular monitoring and error analysis are essential for maintaining optimal performance. It's crucial for organizations to continuously improve their AI models by incorporating feedback and making necessary adjustments. By following these optimization techniques and best practices, businesses can deploy AI models that unleash their full potential and achieve excellence in artificial intelligence.

Model deployment is the final step in the AI model optimization process. When deploying AI models, it's important to consider factors such as scalability, security, and integration with existing systems. Effective deployment ensures that the optimized AI models can be seamlessly integrated into production environments and deliver their intended benefits.

FAQ

What is AI model optimization?

AI model optimization refers to the process of enhancing the performance of artificial intelligence models to achieve accurate, efficient, and scalable outcomes.

How important is model selection in AI model optimization?

Model selection is crucial for AI model optimization. Off-the-shelf generic models may not deliver the desired outcomes, so it is essential to evaluate their architecture, data sources, and similarity to the problem at hand. Custom development of specialized neural network models often leads to superior performance compared to repurposing generic or outdated models.

Why is acquiring quality training data important for AI model optimization?

Acquiring high-volume, high-quality, and relevant training data is essential for optimizing AI model performance. This data should be representative of the problem space and provide sufficient examples for the model to learn meaningful patterns and relationships. Ensuring data integrity by reviewing for errors, inconsistencies, and biases and practicing data hygiene techniques such as cleaning, processing, labeling, and splitting the data into training and evaluation sets lays the foundation for a robust AI model.

How can hyperparameters be optimized to enhance AI model performance?

Hyperparameters are model structure settings that significantly impact performance. Techniques like grid search, random search, or Bayesian optimization can be used to optimize these hyperparameters, tailoring the model architecture to the data and use case. Clear evaluation metrics should be established, and hyperparameters should be tuned based on their impact on these metrics. This process requires computational resources but can enhance model generalization, precision, and efficiency.

What is overfitting, and how can it be prevented in AI model optimization?

Overfitting occurs when a model excessively conforms to the training data, compromising its ability to handle new, unseen data. Regularization techniques such as L1/L2 regularization, dropout layers, early stopping criteria, and noise injection can help reduce overfitting and improve model efficiency. These techniques selectively constrain the model, allowing it to generalize better. Monitoring metrics like validation loss and incorporating synthetic noise testing can also contribute to model robustness.

How does hardware optimization contribute to AI model optimization?

Hardware optimization plays a crucial role in speeding up AI model training and inference. GPU parallel processing capabilities often enable faster execution of deep learning workloads. Cloud-based AI development platforms like Google Cloud, AWS, and Azure allow for easy scalability of GPU power. Furthermore, specialized hardware such as FPGAs and ASIC chips designed for AI can further accelerate performance through optimizations tailored to neural networks. Infrastructure decisions should consider processing needs, costs, and accessibility.

What is the importance of regularly monitoring and improving AI model performance?

Optimizing AI model performance requires a systematic and iterative approach. From fine-tuning model selection and acquiring quality training data to optimizing hyperparameters and selecting the right hardware infrastructure, each step contributes to improving efficiency and accuracy. Regular monitoring, error analysis, and continuous improvement are necessary for maintaining optimal performance. By following these optimization techniques and best practices, organizations can unlock the full potential of their AI models and achieve excellence in artificial intelligence.