How 3D Point Cloud Segmentation Will Make The Future Hands-Free

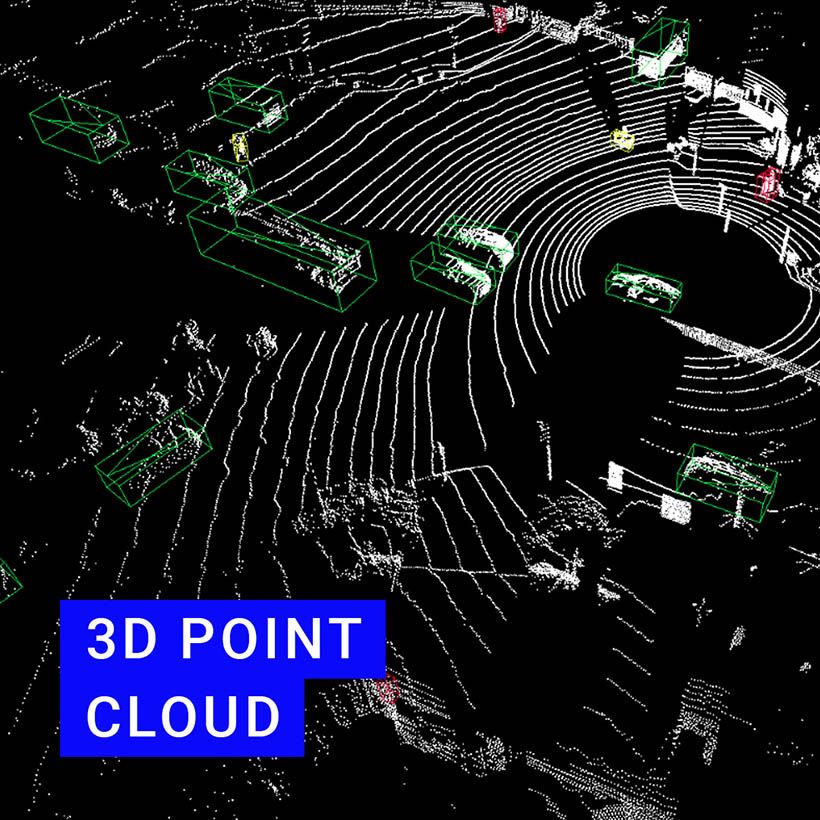

What is a 3D cloud point? Human eyes automatically define the objects we see. We measure the three-dimensional shape at the same time. On your commute, you pass pedestrians, vehicles, and road features. When objects enter your vision, you identify their 3D shapes and classify them. That’s 3D cloud point segmentation from the human perspective. AI technology might be ahead of humans in many areas, but not this one.

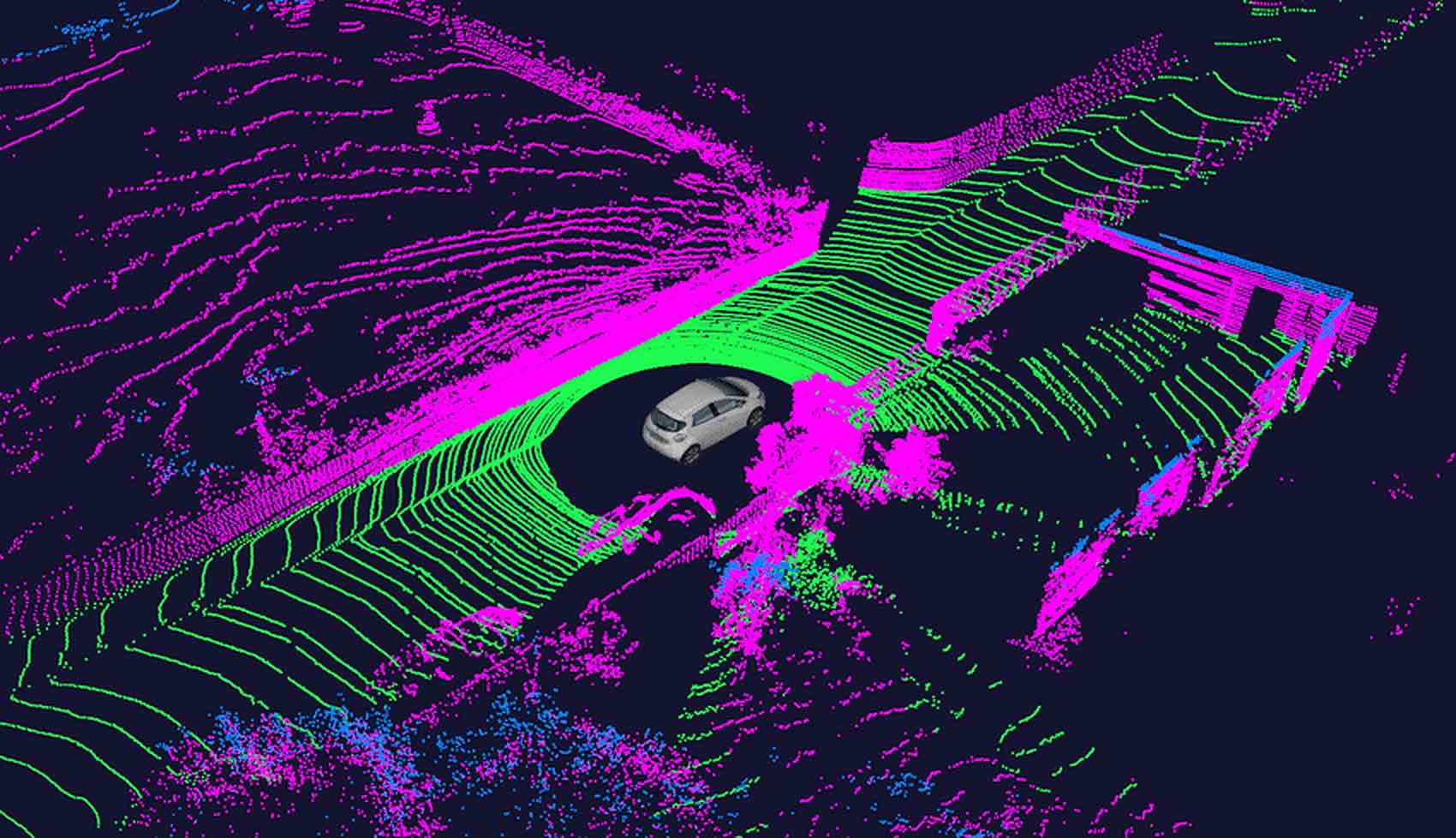

Millions of laser points are sent out from a sensor, then measured as they return. Each point has XYZ values to form a 3D polygon. This is LiDAR, which stands for “light detection and ranging”. It’s used in autonomous vehicle data collection to create 3D cloud points. Machines need advanced object detection and instance segmentation to safely drive us. Self-driving vehicles, medical imaging, and agricultural monitoring benefit from developing this technology.

3D Point Clouds And Our World’s Autonomous Future

When our machines have a three-dimensional understanding of their environment, they can do a lot for us. That being said, we expect a high standard of AI precision before we’ll hand over the reins. We’re still struggling in several areas of cloud point processing. Present-day methods have high redundancy and uneven sampling. In addition, they sometimes fail to create explicit polygon structures.

Challenges aside, the future couldn’t be brighter. When AI can navigate itself through different environments, processes get optimized. There’s growing concern that human workers will be less necessary. That’s undeniably true, but the avenues AI opens might be a solution. Let’s take a digital step further and examine how society will benefit.

Autonomous Driving

There’s a lot of focus on this area for a good reason. Having AI systems behind the wheel of our vehicles could raise safety standards around the globe. Efficiency rises, too, along with reliability. The Center for Data Ethics and Innovation says that safety isn’t enough. The public might be unwilling to tolerate self-driving car crashes, despite being safer.

To do this, autonomous vehicle data collection occurs on a massive scale. Humans have automatic decision-making on roads, but machines require vast datasets. Among many things, the data holds object detection and instance segmentation. When combined with 3D point clouds, the data trains autonomous driving programs.

Medical Imaging

Doctors are highly trained but relatively scarce. Medical AI will have the knowledge and object segmentation of surgeons without their scarcity. We need programs to create an accurate segmentation polygon for each object in medical imagery. Just like in other areas of automation, the standards are uncompromising.

Medical point clouds have already proven to be more accurate than traditional methods. For example, in the case of a spinal CT scan, point cloud models give unmatched detail. Precise bone reconstruction and advanced diagnoses are just areas that benefit. With this technology, we’ll save more lives and improve others.

Aerial Crop Surveying

Assessing how crops perform is easier with 3D point clouds. Most farmers use drones or small aircraft to survey crops manually. When AI gets involved, the whole process speeds up. First, farmers will check crop health, size, and density by building point clouds. Then, with great precision, autonomous drones will collect agriculture data. A segmentation polygon around a plant species can feed farmers a lot of data.

Quality Inspections

Manufacturing a reference point cloud makes for consistent production. For example, an assembly line can enforce higher quality standards. By forming a segmentation polygon around an object, we can compare it to our reference point cloud polygon.

What This Means For The Human Workforce

There’s no question about it. The employment landscape is changing before our eyes. Automation provides and takes indiscriminately, and our only choice is to adapt. Generally speaking, technological advancements outshine their repercussions. Still, we aren’t forging ahead without considering how we’ll be affected. Governments around the world are beginning to address this future issue. There’s no doubt that workforces will shrink and labor markets will be disrupted.

The industries in the last section benefit but will experience staff redundancies. In many cases, employees will transition into supervisory roles. For local machines, this process is sometimes called a Human Machine Interface. HMIs are machine processes managed by a human worker.

AI systems will need human management and guidance for a certain amount of time. One example is road semantic segmentation, the process of categorizing different road surfaces. We still need human input when AI segmentation encounters errors.