Enhancing Autonomous Driving with Semantic Segmentation

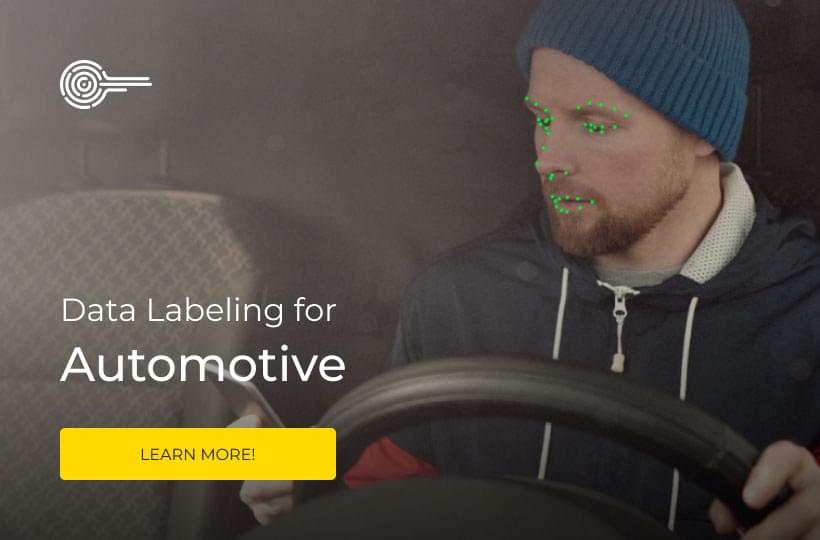

Autonomous driving technology has revolutionized the automotive industry, promising safer and more efficient transportation. Central to this technology is the perception system, which allows autonomous vehicles to understand and interpret their surroundings. One crucial component of the perception system is semantic segmentation, an advanced computer vision technique that enables accurate object classification at the pixel level.

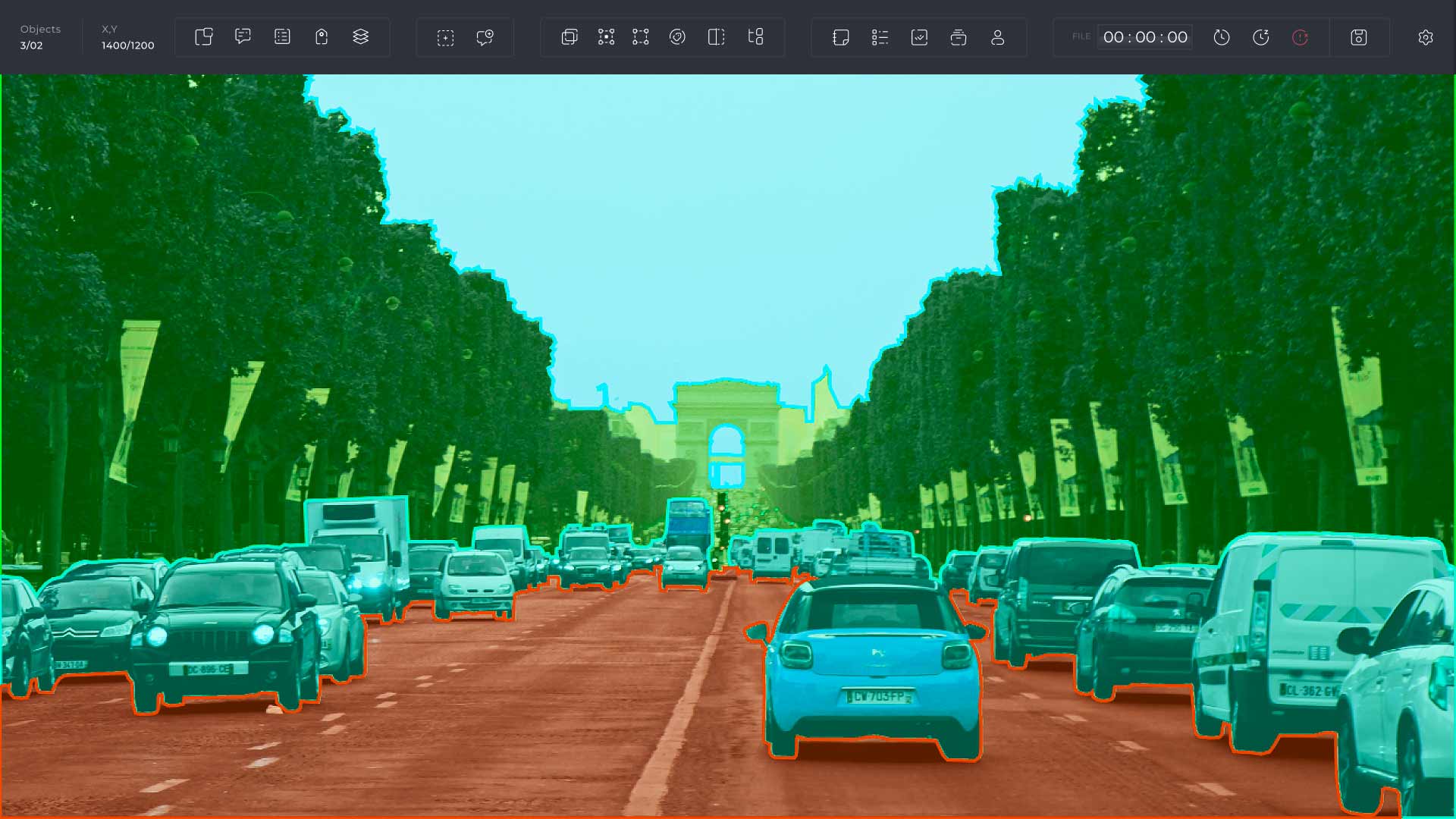

Semantic segmentation in autonomous driving involves dividing an image into distinct segments and assigning each segment a specific label, such as vehicles, pedestrians, road signs, and obstacles. This precise understanding of the environment is essential for autonomous vehicles to navigate safely and make informed decisions on the road.

- Semantic segmentation enhances the perception system of autonomous vehicles, enabling accurate object classification at the pixel level.

- It plays a crucial role in enhancing the safety and efficiency of autonomous driving technology.

- By accurately identifying objects in real-time, semantic segmentation enables autonomous vehicles to make informed decisions on the road.

- Advanced computer vision techniques, attention mechanisms, and synthetic data augmentation contribute to the improvement of semantic segmentation in autonomous driving.

- The future of autonomous driving relies heavily on advancements in semantic segmentation technology.

Challenges of Semantic Segmentation in Autonomous Driving

The accuracy of semantic segmentation models in autonomous driving heavily relies on supervised learning methods. These models are trained on large annotated datasets, enabling them to classify predefined objects with high accuracy. However, semantic segmentation faces several challenges when applied to real-world driving scenarios, particularly in dangerous situations.

Hazardous driving scenarios often involve variations and anomalies in the environment that may go beyond the predefined categories. These variations can include unexpected objects, unusual conditions, or dynamic elements that pose risks to the safety of autonomous vehicles. While supervised learning excels in classifying known objects, it struggles to identify and handle these unforeseen variations effectively.

Collecting training datasets that encompass every possible variation in hazardous scenarios is impractical, as it would require extensive effort, time, and resources. Therefore, semantic segmentation models need to address the challenge of detecting and adapting to variations and anomalies that may arise in real-world driving environments.

Current approaches to address variations and anomalies in semantic segmentation utilize uncertainty estimation techniques and augmenting the training pipeline with additional tasks. Uncertainty estimation seeks to quantify the uncertainty of the model's predictions, allowing it to identify regions or instances where the model may struggle or make errors. Augmenting the training pipeline with additional tasks aims to expose the model to a wider range of variations and anomalies during training, enhancing its robustness.

However, these methods are not without limitations. Uncertainty estimation techniques may introduce noisy results and require careful tuning to strike the right balance between uncertainty and accuracy. Augmenting the training pipeline with additional tasks can increase the computational complexity and training time, making it challenging to deploy these models in real-time autonomous driving systems.

Human Attention Mechanisms and Perception Skills

Human attention mechanisms are an integral part of our cognitive processes, allowing us to selectively focus on specific stimuli while filtering out irrelevant information. Our remarkable environmental perception skills enable us to effortlessly identify invariant elements even amidst variations and anomalies. These skills play a critical role in semantic segmentation for autonomous driving scenes, where accurately classifying objects is essential for safe navigation. By leveraging the intricacies of human attention mechanisms and aligning them with feature attributes associated with variations and anomalies, we can enhance the accuracy and reliability of semantic segmentation models.

Our ability to selectively attend to relevant information and disregard distractions can be attributed to the remarkable cognitive processes of our attention mechanisms. These mechanisms enable us to prioritize important cues and make sense of complex visual scenes. In the context of semantic segmentation for autonomous driving, our environmental perception skills allow us to recognize objects across different variations and anomalies, contributing to a safer and more efficient driving experience.

| Human Attention Mechanisms | Environmental Perception Skills |

|---|---|

| The ability to selectively focus on specific stimuli | Effortlessly identifying invariant elements amidst variations and anomalies |

| Filtering out irrelevant information | Recognizing objects across different variations and anomalies |

| Prioritizing important cues in visual scenes | Contributing to a safer and more efficient driving experience |

Through ongoing research and development in this area, we can continue to push the boundaries of autonomous driving technology, ensuring safer and more reliable systems on our roads. By modeling human attention mechanisms and perception skills, we aim to refine semantic segmentation algorithms, enhancing their ability to accurately detect and classify objects in a wide range of driving scenarios.

Scale Attention Mechanism for Semantic Segmentation

In autonomous driving scenarios, objects in the environment can vary significantly in size and shape, posing a challenge for accurate recognition and classification. To address this issue, we employ a scale attention mechanism that operates over multiple image scales within the network architecture. This mechanism enhances the accuracy and robustness of semantic segmentation, providing more reliable information for autonomous driving scenarios.

The scale attention mechanism works by integrating the results from different scales to improve object recognition at varying resolutions. By considering multiple image scales, the model can effectively capture both small and large objects in the scene, enhancing the overall semantic segmentation performance. This approach enables the perception system to adapt to the substantial scale changes commonly encountered in autonomous driving.

The scale attention mechanism operates within the network architecture, allowing the model to dynamically adjust its attention and focus on the most relevant features at each scale. This adaptive mechanism enhances the model's ability to accurately classify objects across different scales, enhancing its versatility and performance.

The scale attention mechanism is a valuable component of the perception system in autonomous vehicles, enabling them to accurately classify objects at varying scales. By effectively addressing the challenge of scale change, semantic segmentation models can provide reliable information for autonomous driving, contributing to safer and more efficient mobility.

Integration of Spatial and Channel Attention

Semantic segmentation models require a robust understanding of object positions and importance across different scales. To achieve this, two critical attention mechanisms, spatial attention and channel attention, are integrated into the model architecture. These attention mechanisms enable the model to focus on relevant areas and prioritize key channels for accurate segmentation.

Spatial Attention: Enhancing Focus and Overcoming Occlusions

Spatial attention plays a vital role in guiding the model's focus towards the most relevant regions within an image. By leveraging spatial attention, the model can effectively localize objects and overcome challenges such as occlusions. This attention mechanism enables the model to handle complex scenes by ensuring that the most essential regions are accurately identified and segmented.

Channel Attention: Prioritizing Key Appearance Features

Channel attention allows the model to prioritize channels that contain critical appearance features for identifying specific objects. Each channel represents a distinct feature, and by carefully selecting and weighting these channels, the model can enhance its ability to differentiate between different object classes. By utilizing channel attention, semantic segmentation models can effectively capture the unique visual characteristics of objects, leading to more accurate segmentation results.

By integrating both spatial and channel attention, semantic segmentation models can effectively handle diverse object characteristics and improve their performance in challenging scenarios. This fusion of attention mechanisms enables the model to capture both spatial and appearance features, resulting in more accurate and reliable segmentation results.

Multi-Scale Adaptive Attention Mechanism

The Multi-Scale Adaptive Attention Mechanism (MSAAM) is a cutting-edge approach that revolutionizes semantic segmentation in autonomous driving scenarios. By integrating multiple scale features and adaptively selecting the most relevant ones, MSAAM achieves precise and accurate semantic segmentation, enhancing the discriminative power of features.

The attention module of MSAAM combines three attention mechanisms - spatial, channel-wise, and scale-wise - to effectively identify and highlight important visual elements for segmentation. This comprehensive attention framework enables the model to focus on the most critical regions and object attributes, significantly improving performance.

Now, let's explore the MSAAM's attention mechanisms in more detail:

Spatial Attention

Spatial attention focuses on understanding the relative positions and importance of objects at different scales in the input image. By selectively attending to key spatial locations, the model can effectively handle occlusions and better identify objects in complex scenes.

Channel Attention

Channel attention allows the model to prioritize important appearance features by assigning varying weights to different channels. By emphasizing channels that contain critical discriminative information, the model achieves more accurate and robust segmentation results.

Scale Attention

Scale attention addresses the challenge of substantial scale changes in environmental objects, which can vary greatly in size and shape. By operating over multiple image scales, the model captures diverse representations of objects and intelligently combines them, resulting in enhanced discriminative power and segmentation accuracy.

The integration of these attention mechanisms within the MSAAM framework enables it to leverage contextual information and adaptively select the most relevant features for each segmentation task. The result is a groundbreaking approach that significantly advances the field of semantic segmentation in autonomous driving environments.

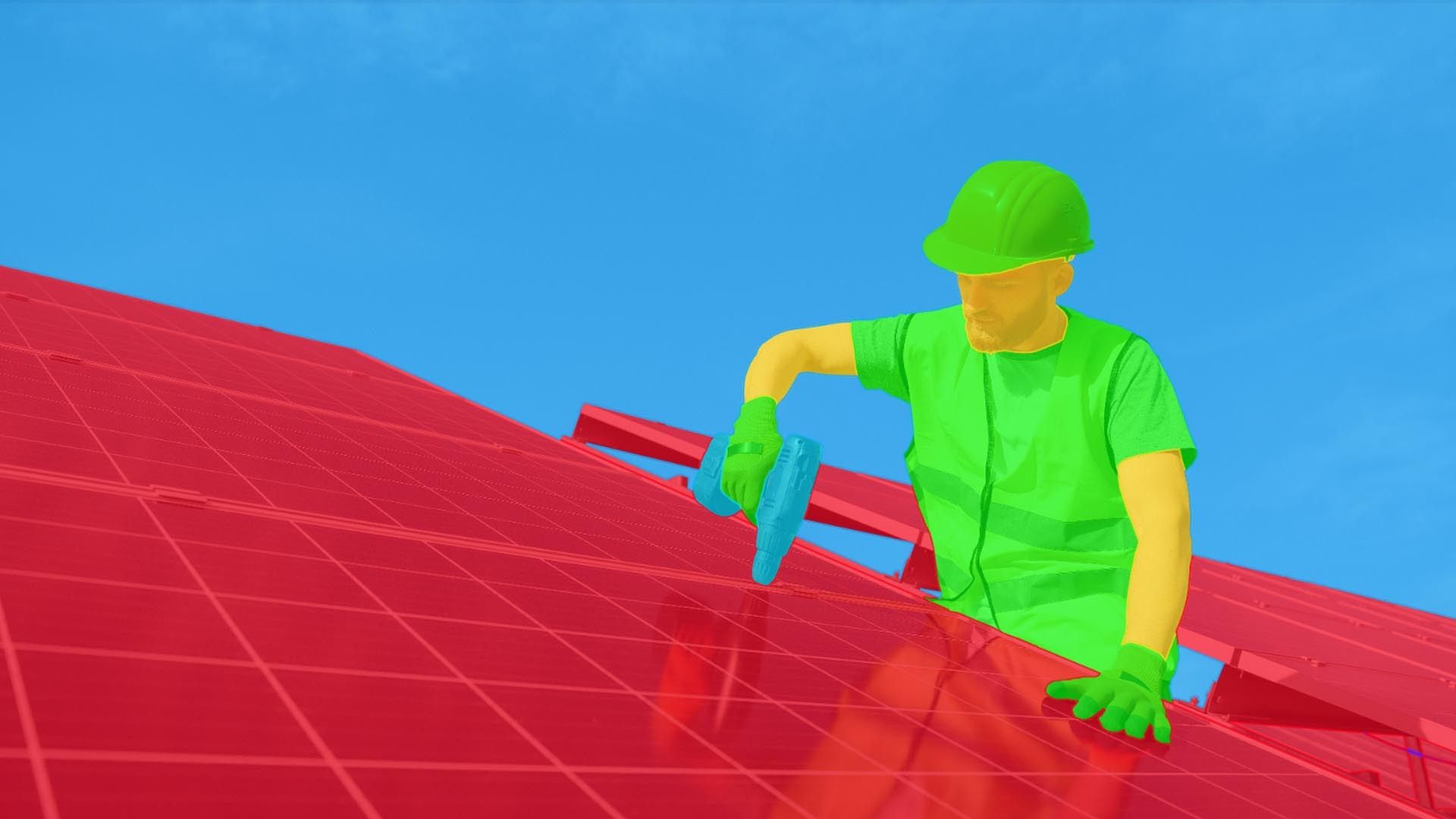

Synthetic Data for Semantic Segmentation

Synthetic data has emerged as a promising solution to address the scarcity of labeled real-world images for training semantic segmentation models. Open-source driving simulators like CARLA provide us with the ability to generate diverse and large-scale synthetic datasets that closely resemble real-world environments.

By augmenting real-world datasets with synthetic data, we can significantly improve the accuracy of semantic segmentation models. Synthetic data offers several advantages, including lower costs and the ability to generate diverse data on a large scale. This allows us to overcome the limitations posed by the limited availability of labeled real-world data.

Open-source driving simulators like CARLA provide a powerful platform for generating synthetic data that captures the complexities of real-world driving scenarios. These simulators offer a range of customization options to create diverse datasets that encompass a wide variety of driving conditions, weather scenarios, and traffic patterns. The generated synthetic images can then be combined with real-world datasets to create augmented training sets.

By incorporating synthetic data, we can enhance the performance and generalization capabilities of semantic segmentation models. The combination of synthetic and real-world data allows the models to learn from a more comprehensive and diverse set of examples, enabling them to accurately classify objects in various challenging scenarios.

Advantages of Using Synthetic Data:

- Cost-effectiveness: Synthetic data generation is a more affordable alternative to manually collecting and labeling large quantities of real-world images.

- Diverse and large-scale data: Open-source driving simulators enable the generation of diverse datasets on a large scale, providing a wide range of examples for training semantic segmentation models.

- Flexibility in data augmentation: Synthetic data allows for the generation of data with specific characteristics or variations that may be challenging to capture in real-world scenarios.

While synthetic data offers significant advantages, there are also challenges to consider. One of the main challenges is ensuring the photorealism of the synthetic images. It's important to evaluate the quality and realism of the generated synthetic data, as models trained solely on synthetic data may struggle to generalize to real-world scenarios that exhibit different characteristics.

Additionally, developing a pipeline for generating synthetic images and accessing assets from video games can be time-consuming and technically challenging. It requires expertise in computer graphics and simulation to create realistic and accurate synthetic datasets.

| Advantages of Synthetic Data | Challenges of Synthetic Data |

|---|---|

| 1. Cost-effective | 1. Ensuring photorealism |

| 2. Diverse and large-scale | 2. Technical challenges in pipeline development |

| 3. Flexibility in data augmentation | 3. Accessing assets from video games |

Despite the challenges, the integration of synthetic data with real-world datasets offers a promising avenue for improving the accuracy and performance of semantic segmentation models in the context of autonomous driving. As advancements in open-source driving simulators continue and techniques for generating high-quality synthetic data evolve, the use of synthetic data is expected to play a crucial role in the development of robust and reliable perception systems for autonomous vehicles.

Advantages and Challenges of Synthetic Data

Synthetic data offers numerous advantages in the context of training semantic segmentation models for autonomous driving. However, it also presents some challenges that need to be considered. In this section, we will explore the advantages and challenges of using synthetic data, highlighting its potential and limitations.

Advantages of Synthetic Data

There are several advantages to utilizing synthetic data in training semantic segmentation models:

- Cost-effectiveness: Synthetic data generation is a cost-effective alternative to collecting and annotating large volumes of real-world images. It allows us to create diverse datasets without incurring the high costs associated with data collection and annotation processes.

- Diverse data generation: Synthetic data provides the flexibility to generate diverse training datasets that encompass a wide range of environmental conditions. Researchers can modify parameters such as weather conditions, lighting, and object placement to create realistic but controlled scenarios.

- Ease of augmentation: Synthetic data can be easily augmented with real-world datasets to increase the size and diversity of the training data. This augmented dataset can enhance the performance and generalization capabilities of semantic segmentation models.

These advantages make synthetic data a valuable resource for training semantic segmentation models, especially in scenarios where real-world data may be limited or difficult to obtain.

Challenges of Synthetic Data

While synthetic data offers numerous advantages, it also presents several challenges that need to be addressed:

- Photorealism: Synthetic images may not always be entirely photorealistic, which can limit their usefulness for training semantic segmentation models. The lack of photorealism can affect the model's ability to accurately generalize to real-world scenarios.

- Time-consuming pipeline development: Developing a pipeline for generating high-quality synthetic images can be a time-consuming process. It requires careful consideration of various factors such as object placement, lighting conditions, and realistic textures.

- Access to assets: Obtaining high-quality assets, such as 3D models and textures, from video games or other sources can pose challenges. It may require extensive research and negotiation to gain access to the necessary assets for generating realistic synthetic images.

Despite these challenges, the use of synthetic data, particularly in combination with real-world datasets, holds immense potential for improving the performance of semantic segmentation models in autonomous driving.

Experimental Results with Synthetic Data

When it comes to enhancing the accuracy of semantic segmentation in autonomous driving, experimental results have shown that synthetic data augmented with real-world datasets can be a game-changer. Deep neural network (DNN) models trained on these augmented datasets consistently outperform models trained exclusively on real-world images.

Integrating synthetic data from open-source driving simulators like CARLA proves to be a promising approach in enhancing the quality of semantic segmentation in autonomous driving scenes. By leveraging the diverse and large-scale synthetic datasets generated by these simulators, we can provide the models with additional examples that closely resemble real-world environments, improving their ability to accurately classify objects.

Future Directions and Applications

The proposed approach in semantic segmentation for autonomous driving opens up exciting possibilities for future research and development. We envision a range of advancements that can further enhance the safety, efficiency, and versatility of autonomous driving technology. Here are some key future directions and potential applications:

Exploring More Advanced Attention Mechanisms

Attention mechanisms play a crucial role in improving the accuracy of semantic segmentation models. To push the boundaries of autonomous driving technology, we can delve deeper into the development of more advanced attention mechanisms. By incorporating cutting-edge techniques such as self-attention and transformer-based architectures, we can optimize the model's ability to focus on relevant details and handle complex driving scenarios with even greater precision.

Optimizing the Use of Synthetic Data

Synthetic data has shown promise in addressing the scarcity of labeled real-world images for training semantic segmentation models. To harness the full potential of synthetic data, further optimization is necessary. This includes refining the generation process to improve the photorealism of synthetic images and ensuring that the synthetic data accurately represents the diverse range of real-world driving environments. By fine-tuning the integration of synthetic data, we can enhance the performance and robustness of semantic segmentation models in various scenarios.

Expanding the Applicability of the Method

While semantic segmentation plays a crucial role in enhancing the perception system of autonomous driving, its potential is not limited to this specific task. We can explore the broader applicability of the proposed method to other perception tasks in autonomous driving systems. By adapting and extending the approach, we can improve object detection, instance segmentation, and other critical tasks that contribute to a comprehensive perception system. This will pave the way for safer and more reliable autonomous vehicles in a wide range of driving environments, including urban areas, highways, and off-road terrains.

Applications in Autonomous Driving Technology

The improved accuracy of semantic segmentation has significant implications for the future of autonomous driving technology. By effectively classifying objects at the pixel level, autonomous vehicles can make more informed decisions and navigate complex driving scenarios with enhanced safety and efficiency. Here are some key applications:

- Traffic Management: Accurate semantic segmentation enables autonomous vehicles to understand and respond to traffic conditions in real-time. This can lead to improved traffic flow, reduced congestion, and enhanced overall transportation efficiency.

- Pedestrian Safety: Semantic segmentation can help autonomous vehicles detect and track pedestrians, ensuring their safety in both urban and suburban environments. This technology has the potential to significantly reduce pedestrian accidents and improve the overall safety of autonomous driving.

- Environmental Adaptation: With precise semantic segmentation, autonomous vehicles can adapt to diverse driving environments, including changing weather conditions, varying road surfaces, and complex urban landscapes. This adaptability enhances the overall performance and reliability of autonomous driving systems.

In summary, the future of semantic segmentation in autonomous driving holds immense potential. By exploring advanced attention mechanisms, optimizing synthetic data usage, and expanding the applicability of our method to various perception tasks, we can continue to push the boundaries of autonomous driving technology. The improved accuracy and reliability of semantic segmentation contribute to safer and more efficient autonomous vehicles, revolutionizing transportation in the years to come.

Conclusion

In conclusion, semantic segmentation plays a vital role in enhancing the perception system of autonomous driving. By accurately classifying objects at the pixel level, semantic segmentation enables autonomous vehicles to navigate safely and efficiently. Addressing the challenges of variations and anomalies in hazardous scenarios is crucial for ensuring the safety of both passengers and pedestrians.

Integrating attention mechanisms, such as scale attention, spatial attention, and channel attention, enhances the accuracy and robustness of semantic segmentation models. These attention mechanisms enable the models to focus on the most relevant information and overcome challenges such as occlusions and variations in object size and shape.

Furthermore, the use of synthetic data from open-source driving simulators like CARLA offers a cost-effective solution for training semantic segmentation models. Augmenting real-world datasets with synthetic data improves the performance and accuracy of the models, providing a more comprehensive understanding of autonomous driving scenes.

The proposed multi-scale adaptive attention mechanism demonstrates superior performance compared to state-of-the-art techniques. It effectively combines multiple scale features and adaptively selects the most relevant information for precise semantic segmentation. This advancement in semantic segmentation technology contributes to the development of safer and more efficient autonomous driving systems, making autonomous vehicles more reliable and trustworthy. With further research and development, semantic segmentation will continue to pave the way for the widespread adoption of autonomous driving technology in the future.

FAQ

What is semantic segmentation in the context of autonomous driving?

Semantic segmentation is a computer vision technique used in autonomous driving to classify objects at the pixel level. It plays a crucial role in accurately identifying and understanding the road scene, enabling safe navigation for autonomous vehicles.

What challenges does semantic segmentation face in the context of autonomous driving?

Semantic segmentation models trained on large annotated datasets have limitations in classifying objects that deviate from conventional categories. In hazardous driving scenarios, variations and anomalies pose safety risks, making it challenging to develop models that can adapt to dynamic environments.

How do human attention mechanisms influence semantic segmentation in autonomous driving?

Human attention mechanisms allow us to selectively focus on specific stimuli and filter out irrelevant information. By analyzing feature attributes associated with variations and anomalies and aligning them with attention mechanisms, the accuracy of semantic segmentation models can be enhanced.

How does the scale attention mechanism improve semantic segmentation in autonomous driving?

The scale attention mechanism operates over multiple image scales within the network architecture, addressing the challenge of recognizing objects at different resolutions. By integrating results from different scales, it provides more reliable information for accurate semantic segmentation in autonomous driving scenarios.

What is the significance of integrating spatial and channel attention in semantic segmentation?

Spatial attention helps the model understand the relative positions and importance of objects, while channel attention prioritizes key appearance features for identifying specific objects. By combining spatial and channel attention, semantic segmentation models can handle diverse object characteristics and improve performance.

What is the Multi-Scale Adaptive Attention Mechanism (MSAAM) in semantic segmentation?

The MSAAM integrates multiple scale features and adaptively selects the most relevant features for precise semantic segmentation. It incorporates spatial, channel-wise, and scale-wise attention mechanisms, enhancing the discriminative power of features and outperforming state-of-the-art techniques in terms of performance.

How does synthetic data contribute to improving semantic segmentation in autonomous driving?

Synthetic data, generated using driving simulators like CARLA, offers cost-effective means to generate diverse and large-scale datasets resembling real-world environments. By augmenting real-world datasets with synthetic data, the accuracy of semantic segmentation models can be improved.

What are the advantages and challenges of using synthetic data for semantic segmentation?

Synthetic data is cost-effective, allows for diversity, and can be easily augmented. However, it may not be entirely photorealistic, limiting its value for training. Generating synthetic images can be time-consuming, and accessing assets from video games can be challenging. The quality of synthetic data should be carefully evaluated for its suitability in training semantic segmentation models.

What are the future directions and applications of semantic segmentation in autonomous driving?

Future directions include exploring advanced attention mechanisms, optimizing the use of synthetic data, and expanding the applicability of the method to other perception tasks in autonomous driving systems. Semantic segmentation advancements contribute to safer and more efficient autonomous driving in various scenarios.