Data Augmentation Strategies: Scaling a Dataset Without Additional Annotations

Data augmentation is a technique that artificially expands datasets and creates different variations of samples without the need for additional annotations.

In deep learning, data augmentation increases the amount of data, sets the limits of the AI model's solution, and improves performance. Implementing data augmentation strategies reveals the accuracy and robustness of the AI model.

Data augmentation is used in healthcare, where collecting larger amounts of data is time-consuming and expensive and requires access by patients and skilled professionals. With data augmentation, it is possible to scale datasets without additional annotation efforts.

Quick Take

- Data augmentation improves AI model performance.

- It augments the dataset without additional annotations.

- Augmentation methods vary depending on the data type (image, text, audio).

- Data augmentation addresses the problem of class imbalance and generalization.

- Used correctly, it can help save on data collection costs.

Understanding Data Augmentation

Data augmentation is a method of artificially increasing the size of a training dataset by creating new examples based on existing data. This improves the overall quality of training machine learning models, especially when the initial dataset is small or unbalanced.

Benefits of data augmentation:

- Reduces overfitting and increases the robustness of an AI model.

- Better generalization of the model to unseen data.

- Corrects unbalanced datasets.

- Reduces the cost of data collection and labeling.

Machine learning applications

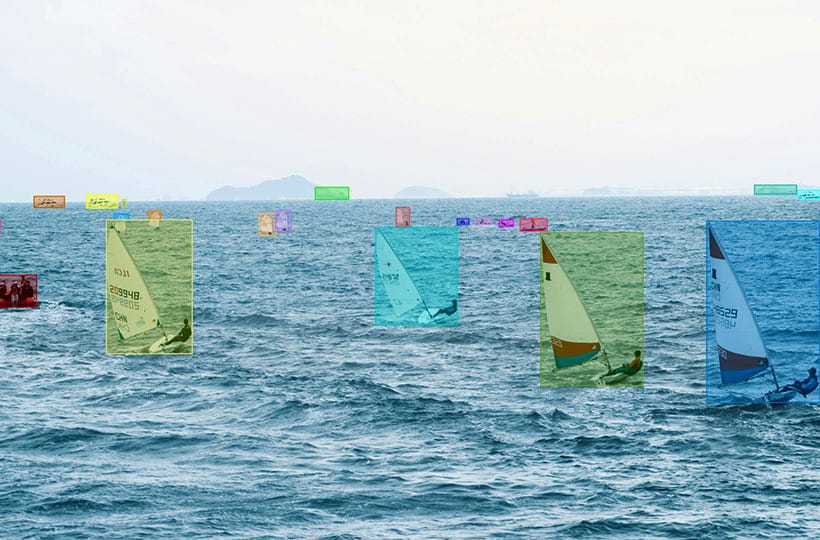

Computer vision tasks such as object detection and image classification.

- Natural language processing.

- Healthcare visualization.

- Autonomous driving simulations.

Data augmentation is a primary tool for scaling datasets without additional annotations, improving AI models' performance.

Data Augmentation Methods

- Image transformations. These include horizontal and vertical mirroring, random angle rotation, scaling, and cropping, which change spatial characteristics. Changing brightness, contrast, saturation, and hue, adding random noise and blurring adjust visual aspects. These methods improve object recognition accuracy.

- Text data augmentation includes synonym substitution and back translation. Random word substitution generates new sentence structures. These methods improve the performance of text models for unseen data.

- Audio data augmentation uses time-domain and frequency-domain methods. Speech rate adjustment and pitch shifting help models adapt to different speech rates, strengthening their adaptability in real-world tasks.

Image Data Augmentation Strategies

Image data augmentation augments data sets for machine learning models. It applies data transformation techniques to increase their diversity and size. It does not require additional annotation efforts.

Text data augmentation strategies

Text data augmentation improves natural language processing (NLP) models. Let's look at text data augmentation strategies.

Audio and Speech Augmentation

Audio augmentation helps build robust speech recognition and audio processing models and improves model performance in various scenarios.

Building an Augmentation Pipeline

Integrating data augmentation into your machine learning workflow requires planning. First, you need to determine the transformation techniques for your dataset. These are determined by the data type (video, audio, or text). For images, consider flipping or rotating. For text, use synonym substitution; add noise or speed correction for audio.

Testing helps maintain data integrity. Evaluate augmented samples to confirm their relevance and realism. Use a portion of the original data to compare with the augmented versions. In the process, check for distortions that will hurt the AI model's training.

Monitor metrics to assess the effectiveness of your augmentation pipeline. Aim to improve model accuracy; effective data augmentation increases the accuracy of the AI model and reduces overtraining.

Data augmentation problems

- The quality of synthetic data negatively impacts an AI model if transformation techniques alter the meaning or semantic load of the original examples.

- Excessive or uneven augmentation leads to a skewed sample, where some data variations appear more often than others. This leads to data imbalance and reduces generalizability.

- The problem of task-domain matching shows that not all methods work equally well in different domains. For example, adding noise may benefit a model that recognizes human speech but is detrimental to medical audio diagnostic systems.

Tools and frameworks for data augmentation

- One well-known framework is NLPAug. It is a Python tool that supports various augmentation methods, from simple synonym substitution to complex transformations using deep models. It integrates with other NLP libraries and combines different types of augmentations.

- TextAttack is a library designed to create, test, and improve NLP models through synthetic example generation. It contains a set of transformers that perform word permutations, token insertions, or deletions, which test the model's robustness to changes.

- Hugging Face Datasets train data augmentation models, where new text variants can be stored and processed. Snorkel allows you to create weakly labeled datasets using programming rules.

Future directions in data augmentation

One direction is adaptive augmentation, where the system selects or creates new data based on the weaknesses of the AI model. This allows the model to be trained with fewer examples.

Cross-modal augmentation, which combines multiple data types (e.g., text + image or text + audio), is gaining significant traction, generating multimodal examples that reflect the real world. This is needed for visual question-and-answer (VQA) or multimodal sentiment analysis tasks.

There is also a rise in personalized augmentation, where synthetic examples are generated based on a specific user, cultural context, or application. This is used in education, medical applications, or dialect-specific speech recognition.

FAQ

What is data augmentation, and why is it important?

Data augmentation is the process of expanding a dataset by creating new examples based on existing ones. It improves the accuracy of AI models, reduces overfitting, and helps work with limited or unbalanced data.

What are the methods for augmenting image data?

These include rotation, cropping, mirroring, brightness adjustment, scaling, and noise reduction.

What are the strategies for augmenting audio data?

Audio augmentation includes time stretching, pitch shifting, and adding background noise.

What are the challenges with data augmentation?

Overfitting due to overfitting and reducing data diversity. It can also lead to artifacts or distortions. It is important to find a balance and regularly evaluate the quality and relevance of the augmented data.

Are there any tools or frameworks designed for data augmentation?

Tools and frameworks such as NLPAug, TextAttack, and Hugging Face Datasets are designed for data augmentation. They have various features and are cross-platform.