Bridging Data Science and Machine Learning: Projects to Explore

Did you know that GitHub hosts over 100,000 data science and machine learning projects?

For those excited about data science and machine learning, GitHub is a goldmine. It's filled with groundbreaking projects. Exploring these can improve your skills and give you hands-on experience.

This article will dive into data science and machine learning projects. It's ideal for students, professionals, or anyone intrigued by data and algorithms. The insights and resources from these projects can be quite valuable. So, let's dive in!

Key Takeaways:

- GitHub repositories offer a wide range of advanced machine learning projects.

- Exploring these projects can enhance your skills and provide practical experience.

- The top 10 projects on GitHub cover various topics, techniques, and applications.

- These projects contribute to the latest developments in data science and machine learning.

- GitHub repositories provide a vibrant community and valuable resources for learning and collaboration.

Exploring the Enron Email Dataset

The Enron Email Dataset project delves into a vast collection of company emails. These come from the Enron Corporation, known for its severe fraud. This initiative aims to pinpoint patterns and divide emails into genuine and fraudulent ones. It requires data preprocessing, exploratory data analysis, and statistical analysis. Researchers can find valuable insights on GitHub, with related repositories offering tools and knowledge.

For instance, the Athena repository is a standout. It offers a rich set of tools for digging into the dataset. There, you'll find aids for getting the data ready, exploring it closely, and employing advanced statistical methods. Using this repository, data scientists and analysts enhance their understanding of fraud detection, benefiting from collective insights.

The Enron Email Dataset

This dataset is a gold mine for examining corporate fraud and crafting new fraud-detection algorithms. It holds over 500,000 emails exchanged among Enron's staff, painting a detailed picture of their internal conversations. This includes messages from all company sectors, showcasing a wide view of Enron’s communications.

| Data Preprocessing | Exploratory Data Analysis | Statistical Analysis |

|---|---|---|

| Data cleaning and transformation | Identifying patterns in email content and metadata | Measuring email frequency and response times |

| Removing duplicates and irrelevant information | Visualizing email networks and social relationships | Exploring email timestamps and distribution |

| Tokenizing and normalizing text data | Analyzing sentiment and tone of emails | Examining email length and word count |

State-of-the-art data mining methodologies can be applied with the support of GitHub repositories with this dataset. These resources offer code snippets, libraries, and instructions that empower researchers and developers.

Predicting Housing Prices with Machine Learning

Our project delves into predictive modeling via machine learning to forecast housing prices. The aim is to craft a model capable of precisely gauging a property's value, taking into account various attributes. This includes but is not limited to lot size, building type, and the year it was built. Such predictions are a boon for those buying or selling, enabling well-considered choices and accurate market valuations.

Data preprocessing is pivotal in our approach. It involves purging and translating our dataset to align with machine learning's requisites. Essential tasks comprise rectifying missing data, encoding categorical variables, and normalizing numerical attributes. This phase expunges irregularities, bolstering our prediction model's precision.

Furthermore, shaping the dataset via feature engineering is integral. This process avails the creation of new attributes or the reconstitution of existing ones to glean deeper insights. For instance, by determining a property's age from its year of construction and the present year. Employing pertinent features enriches model performance, allowing the discernment of crucial data patterns.

Selecting the most fitting model among a range of machine learning algorithms is fundamental. Options like linear regression, decision trees, and random forests exist. Each boasts unique merits and drawbacks, necessitating a careful assessment for optimal predictive prowess. A strategic evaluation guides us in identifying the model best suited to our data's nature.

Online platforms, such as GitHub, hold a plethora of project-related materials. They furnish comprehensive tutorials and practical examples on data cleansing, feature crafting, and model choice. For those venturing into the realm of data science and machine learning, these repositories are invaluable, aiding effective project implementation.

Identifying Fraudulent Credit Card Transactions

The aim is to create a model that pinpoints deceitful credit card spending. As fraud rises, protecting consumers' money is vital. Businesses need strong tools to catch these activities.

The project's tasks include delving into the dataset's details. This helps differentiate fake transactions. Next, we clean the data to make it ready for model testing.

- We explore the dataset thoroughly for patterns that may hint at fraud.

- The dataset goes through cleaning to prep it for model learning.

- Our models, like logistic regression and neural networks, are taught to spot fraud precisely.

Creating a model that catches fraud without harassing legitimate customers is essential. Tweaking the model and making it perform efficiently is key. This thins down the false alerts.

Also, external tools like GitHub can make the project smoother. These tools speed up everything from finding data patterns to setting up models.

Example GitHub repositories for Fraud Detection

| Repository Name | Description |

|---|---|

| 1. Fraud-Detection-Papers | It compiles papers on fraud. These share insights on the latest fraud detection methods. |

| 2. Fraud-Detection-Models | It holds ML models and techniques just for spotting credit card fraud. |

| 3. Fraud-Detection-Tools | Lists resources to support in making and checking fraud detection models. |

Image Classification with Convolutional Neural Networks

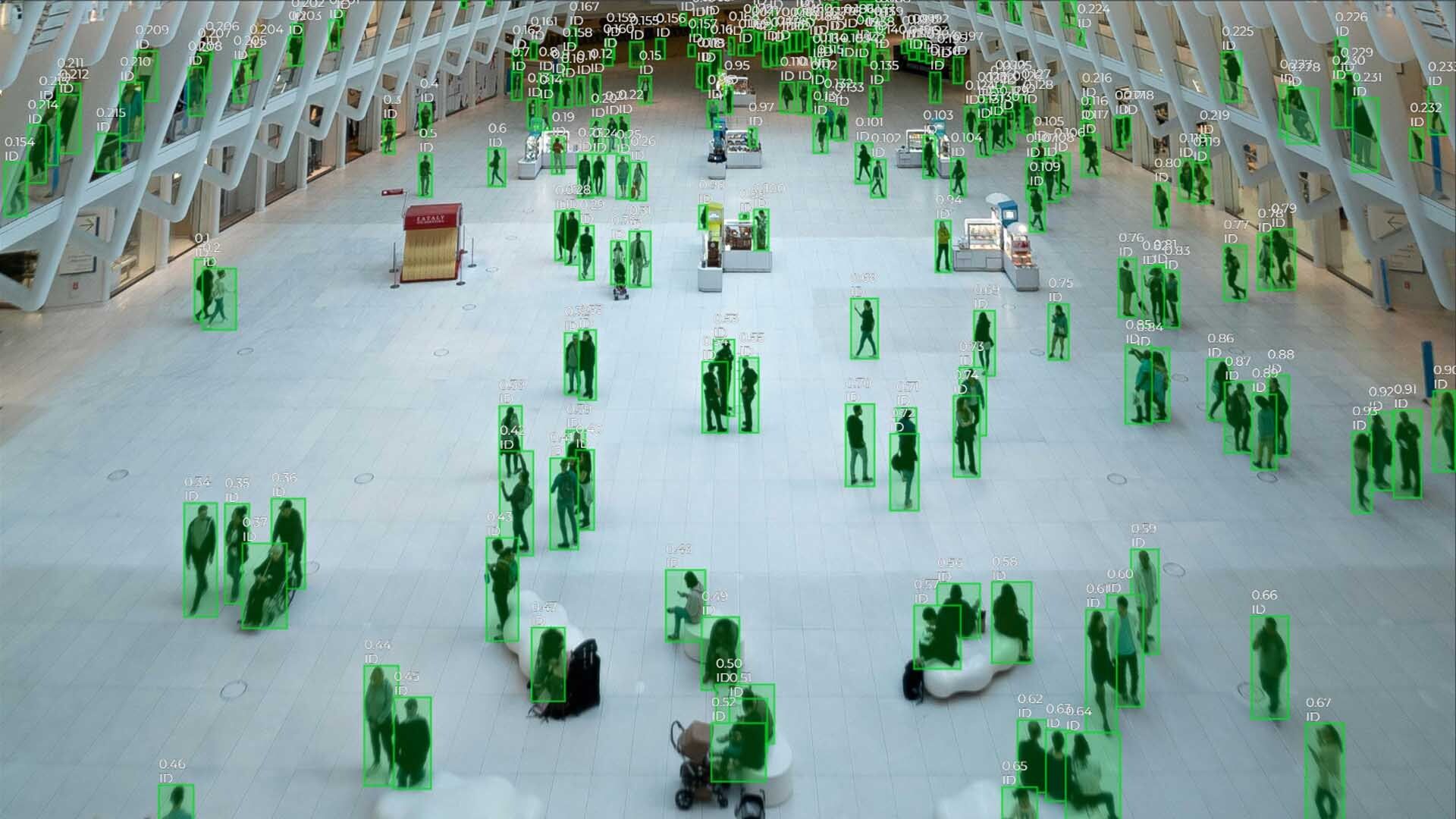

Image classification is key in computer vision, crucial in many areas like object recognition and autonomous driving. Convolutional neural networks (CNNs) are central to these tasks.

CNNs recognize features in images to classify them. They use layers to filter spatial information and identify patterns. Then, they learn to make decisions in the fully connected layers.

Preparing data is vital for successful image classification. This step includes resizing images and adjusting pixel values. It also involves techniques to enhance the dataset, making the model more accurate and less prone to overfitting.

Model training then occurs, where the CNN refines its understanding. It's crucial to optimize settings like the learning rate to achieve the best results. This fine-tuning is essential for the model to perform at its peak.

Post-training, the model must be rigorously tested with a new set of data. This step uncovers the model's strengths and areas where it may fall short. Metrics like accuracy and F1 score help in this evaluation.

GitHub is a hub for tools and knowledge related to CNNs for image classification. It has pre-built models, datasets, and code. This serves the computer vision community, enabling better collaboration.

To put it simply, image classification with CNNs combines deep learning and vision in solving intricate tasks. It requires thorough data preparation, strategic model training, and utilizing community resources. These steps allow developers to create systems that categorize images accurately.

Sentiment Analysis on X Data

Studying emotions in X (Twitter) content is a captivating effort for data scientists. It sheds light on whether the text carries feelings of positivity, negativity, or neutrality. Such insights are crucial for understanding public views, feedback from customers, and perceptions of brands.

Through sentiment analysis, organizations can better shape their strategies, comprehend consumer feelings about their offerings, and make smarter choices. The endeavor kicks off with data preparation, given X data's common disturbances like hashtags and URLs. It's vital to cleanse the data for accurate analysis. This stage employs steps like removing insignificant words and breaking up the text into smaller parts.

Following that, select what to analyze from the text. This can include word use frequencies, combing through multiple words together (n-grams), and specific lists of words denoting emotions. These selections provide a deep look into the tweets and form the basis for the analysis.

After getting the data ready, the core task — analyzing feelings — commences through text classification. This includes educating a computer program to sort tweets by their emotional connotations. Various models can perform this, each with its strengths based on the project's needs and dataset complexity.

To tackle such a project effectively, professionals can draw from the wealth of resources on GitHub. These platforms host a variety of tools, data, and models for sentiment analysis. They foster a collaborative environment, enhancing learning and contributions to the field.

The confluence of data preparation, feature selection, text analysis, and community resources arms data scientists with the essentials for robust sentiment investigations.

| Advantages of Sentiment Analysis on Twitter Data | Challenges of Sentiment Analysis on Twitter Data |

|---|---|

|

|

Analyzing Netflix Movies and TV Shows

Streaming services have become a staple in many homes, with Netflix leading the pack. It's known for a broad collection of movies and TV shows. This includes everything from big hits to shows that have won critical acclaim. The vast content on Netflix is not just for entertainment. It's a goldmine for data analysis and insights. In this study, data scientists use advanced methods to dissect Netflix's content.

Data analysis breathes life into raw data, turning it into meaningful patterns. With tools like statistical analysis and machine learning, experts unlock valuable insights. They track trends by studying the distribution of genres, viewer ratings, and when shows were released. This helps identify what viewers like and why.

Data isn't useful unless we can see its story. That's where data visualization steps in, making complex data easy to understand. It transforms numbers into visual charts and graphs. These visuals help us understand what content on Netflix is popular. From viewership patterns to top genres, it opens our eyes to Netflix's diverse range of offerings.

Exploring Netflix's data goes beyond the surface. It entails digging into each title's details, such as cast and crew. This deep dive reveals unexpected connections between shows and movies. Known for its high-quality recommendations, this exploration forms the basis for enhancing user experiences further.

"Data analysis enables us to understand Netflix's content landscape, while data visualization brings the insights to life. Data exploration unveils hidden relationships and opportunities for content personalization."

For data scientists, GitHub repositories offer a treasure trove of resources for Netflix data analysis. These repositories contain datasets and tools specifically for this purpose. It's a place where enthusiasts can find, contribute, and improve models to learn more about Netflix's content landscape.

Example of a Data Analysis Table:

| Title | Genre | Release Year | Average Rating |

|---|---|---|---|

| Stranger Things | Science Fiction, Horror, Drama | 2016 | 8.8 |

| The Crown | Drama, History, Biography | 2016 | 8.7 |

| Narcos | Crime, Drama, Thriller | 2015 | 8.8 |

| Black Mirror | Science Fiction, Drama, Thriller | 2011 | 8.8 |

Customer Segmentation with K-Means Clustering

K-means clustering serves as a robust tool in the realm of customer segmentation, accommodating the swings of unsupervised learning models. The central aim is to amalgamate customers into clusters based on their clad commonalities and profiles. This approach aids businesses in untangling their customer demographics, paving the path for tailored marketing strategies that can enhance overall client gratification.

In the inauguration, prepping the data takes precedence, necessitating a round of cleaning and structuring. It's a multi-tiered process, encompassing the rectification of missing data fixtures, encoding of categorical enigmas, and normalization to standardize all metrics. This act of prepossessing the data is cardinal, underpinning the veracity and fidelity of the ensuing insights.

The subsequent phase involves pinpointing salient features that are imperative for the segmentation of customers. The art of feature selection is significant; it accentuates the efficacy and intelligibility of the segmentation outcomes. To this end, one might leverage analyses such as correlation scrutiny and feature salience to cull out the most pertinent traits.

With the data primed and the pivotal features discerned, it then falls to the K-means algorithm to segment the clientele into cogent clusters. K-means operates through an iterative schema, with the objective of curbing intra-cluster variability, thereby refining the homogeneity within individual clusters. It culminates in the demarcation of clusters, each delineating a niche of analogous customers.

The universe of Github teems with repositories dedicated to illuminating the practice of customer segmentation vis-a-vis K-means clustering. These digital troves are treasure troves of resources, be they code snippets, exemplars, or tutorials, offering a fertile ground for the exploration of segmentation methodologies, thereby facilitating the comprehension and application of the algorithm.

Benefits of Customer Segmentation with K-Means Clustering

- Improved Marketing Strategies: Segmentation empowers businesses to fine-tune their overtures to various customer cohorts. Armed with insights into each group's specific demands, entities can craft campaigns that are laser-focused, enhancing the resonance and thus the efficacy of their marketing, and by extension, the satisfaction of their clientele.

- Enhanced Customer Experience: Customer segments allow firms to tailor offerings and communications to the distinctive tastes and tendencies of each cluster. This customization not only improves the direct customer experience but also fosters enduring bonds, positioning businesses for long-term success.

- Better Resource Allocation: By engaging in segmentation, organizations can deploy their resources more judiciously, concentrating on the segments that offer the highest returns. This strategic approach enables businesses to direct their marketing machinery towards the most lucrative channels, optimizing their operational efficiency.

- Identifying New Opportunities: Segmentation acts as a beacon, illuminating unexplored market niches and business prospects. It enables enterprises to distill critical insights from consumer actions and aspirations, thereby surfacing novel service or product opportunities and avenues for growth.

To wrap it up, employing K-means clustering for customer segmentation bestows businesses with profound insights into their clientele, empowering them with informed decision-making capabilities. Armed with the wealth of knowledge attainable from Github resources, organizations can navigate through segmentation projects with finesse, extracting pivotal insights vital for their marketing, sales, and overarching business trajectories.

Medical Diagnosis with Deep Learning

Deep learning has brought remarkable change to medical diagnostics. It allows for precise and efficient recognition of various conditions with the help of advanced neural networks. Medical professionals benefit greatly, making more informed decisions.

Data preprocessing is key in deep learning for medical diagnosis. It includes cleaning, standardizing, and improving medical images for the best model performance. Techniques like image normalization, noise reduction, and image augmentation enhance the dataset's quality for better predictions.

Model training stands as another key phase. Through exposure to large datasets of labeled medical images, deep learning models learn to identify patterns and features. Hence, they become capable of accurately categorizing unseen images. During training, the neural network hones its performance by adjusting weights and biases.

Rigorous evaluation is necessary to gauge a deep learning model's medical diagnosis capability. This involves assessing accuracy, precision, recall, and the F1 score. These measures offer insights into the model's real-world applicability and performance.

"Applying deep learning techniques to medical diagnosis has the potential to revolutionize healthcare by enabling more accurate and early detection of diseases."

GitHub is a valuable resource for those keen on medical diagnosis using deep learning. It offers datasets, pre-trained models, and example code to jumpstart custom diagnostic system creation. Open-source collaboration fosters faster development and research in this area.

Benefits of Medical Diagnosis with Deep Learning

The adoption of deep learning in medical diagnosis brings numerous advantages:

- It can accurately classify medical images, improving early detection and disease treatment.

- This leads to faster, more efficient diagnosis processes, lessening the workload on healthcare workers.

- Remote diagnosis becomes feasible, providing expert insights regardless of where the patient is located.

- It supports better decision-making in healthcare, which can enhance patient outcomes.

With deep learning, medical diagnosis sees a new era of precision and efficiency. Future developments promise even more accurate and widely accessible diagnostic capabilities.

| Advantages of Medical Diagnosis with Deep Learning | Benefits |

|---|---|

| Accurate classification of medical images | Aiding in early detection and treatment of diseases |

| Improved efficiency and speed of diagnosis | Reduction in healthcare professional workload |

| Potential for remote diagnosis | Access to expert opinions globally |

| Enhanced decision-making support | Better patient outcomes |

By integrating deep learning, medical diagnosis ushers in the potential for enhanced patient care. GitHub's wide resources empower newcomers and experts to join the healthcare revolution through deep learning.

Summary

Projects in data science and machine learning on GitHub are more than learning opportunities. They let you highlight your expertise. These cover a broad array of subjects, like spotting fraud or understanding how people feel. By working on them, you gain hands-on skills and help push the field forward

Stay curious and keep exploring the vibrant world of data science and machine learning. With GitHub's vast reservoir of projects and a community of like-minded enthusiasts, there’s no limit to what you can achieve. The field is at a tipping point, and your knowledge and skills can help move mountains. Good luck on your journey to creating cutting-edge data science projects!

FAQ

What is the Enron Email Dataset project?

The Enron Email Dataset project dives into a vast collection of internal Enron communications. This data comes from a corporate titan tainted by fraud. It looks to identify patterns and sort emails, seeking out those linked to fraud. The project steps include preparing the data, exploring it deeply, and applying statistical scrutiny. For those intrigued, GitHub houses a wealth of supplementary materials and advice.

What does the housing price prediction project entail?

The aim here is to prognosticate housing costs using advanced machine learning. It relies on a dataset loaded with factors like lot size, dwelling types, and build years. Essential tasks span from organizing the data to creating new features and picking the right model. For practical insights, the project's GitHub repositories offer exemplar code and instruction.

What is the goal of the project on identifying fraudulent credit card transactions?

In this initiative, the prime objective is to craft a model pinpointing shady credit card deals. It uses a dataset flush with transaction details from European card users. The workflow encompasses several phases, including delving into data, prepping it for analysis, and the actual model training. Handy resources and tools are available on the project's GitHub pages.

What does the image classification project involve?

At its core, this venture is about categorizing images, tapping into the power of convolutional neural networks (CNNs). These are the go-to for image-related tasks and are quite adept at inferring information from visuals. The journey involves getting the data ready, honing models through training, and thorough examination. GitHub's resources are poised to assist, offering both code snippets and models ready for action.

What is the sentiment analysis project about?

This project, specifically, scrutinizes Twitter for sentiment insights via text mining. It's keyed into assessing the emotional content of text snippets, like tweets. The work pipeline includes preparing the data, distilling features, and then applying a classification approach. GitHub resources offer a solid starting point with examples and data in tow.

What does the project on analyzing Netflix movies and TV shows entail?

My analysis zeroes in on Netflix's vast entertainment collection. The task list involves searching for trends, creating visual representations, and drawing out content-related revelations. GitHub contributions stand ready, providing both datasets and analytical tools for this undertaking.

What is the customer segmentation project about?

The plan here is to group customers using K-means clustering, ideal for such tasks. It starts with preparing the customer data, choosing pertinent features, and running the clustering algorithm. For those eager to try it out, GitHub hosts instructional materials and practical samples.

What does the project on medical diagnosis with deep learning involve?

Deep learning steps in to aid in medical diagnostics in this initiative. It relies on models like CNNs to scrutinize medical images for insights. The roadmap includes readying the data, tuning the model, and assessing its performance. For accessible starting points, GitHub is a goldmine for datasets and model blueprints.