How to Annotate Reports for Automated ESG Scoring

In the modern world, investors and society demand transparency from companies not only regarding profits but also regarding their impact on the environment, society, and governance. These aspects are united under the concept of ESG.

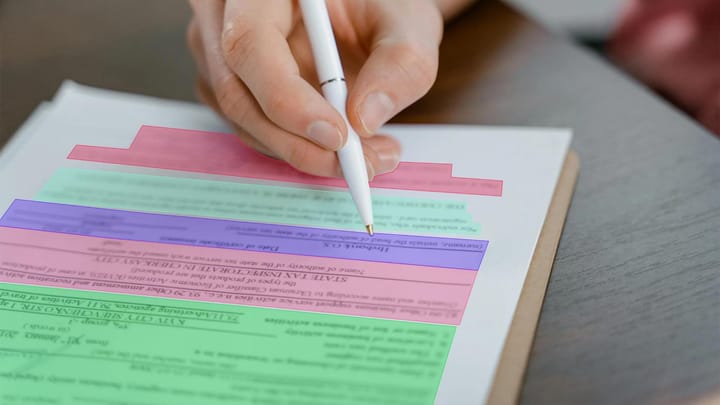

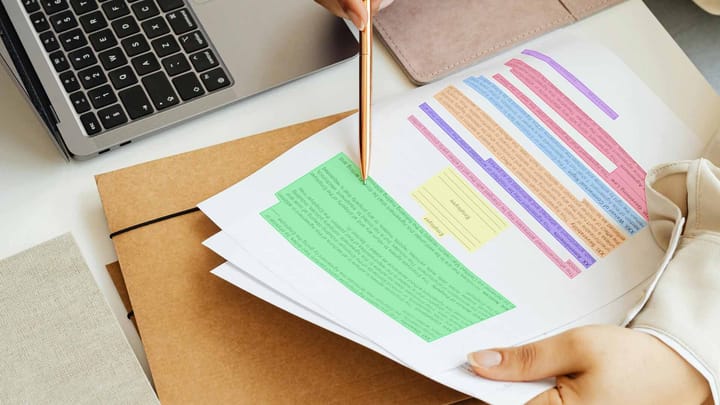

The problem is that companies present their ESG reports as long, unstructured texts, such as