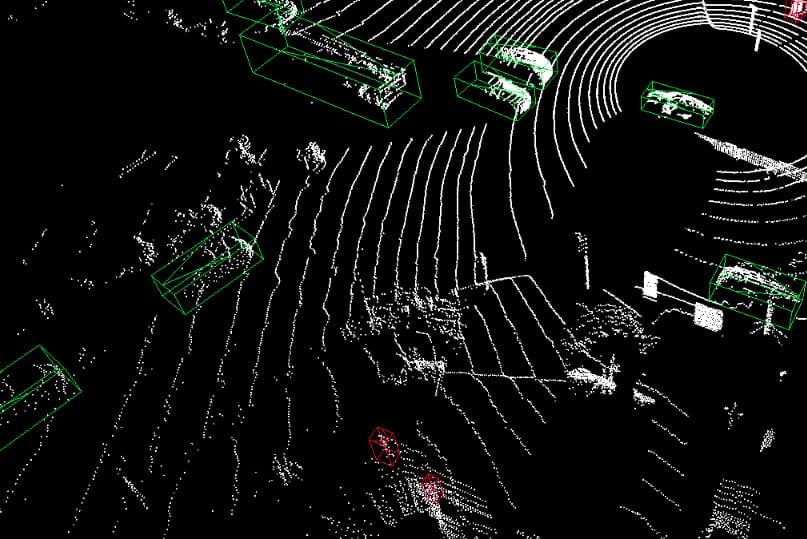

What is LiDAR 3D annotation and why is it relevant for autonomous vehicles?

LiDAR 3D annotation refers to the process of labeling 3D point clouds collected by LiDAR sensors. This includes identifying vehicles, pedestrians, road edges, etc with the goal of training AI models in spatial perception. This way systems can interpret their surroundings in three dimensions, dramatically improving object detection, distance estimation, and navigation. For low-light or adverse weather conditions, prevision is especially important. Major trends in 2025 emphasize AI-powered automatic LiDAR annotation, trajectory labeling, and use of synthetic data to reduce the amount of manual work.

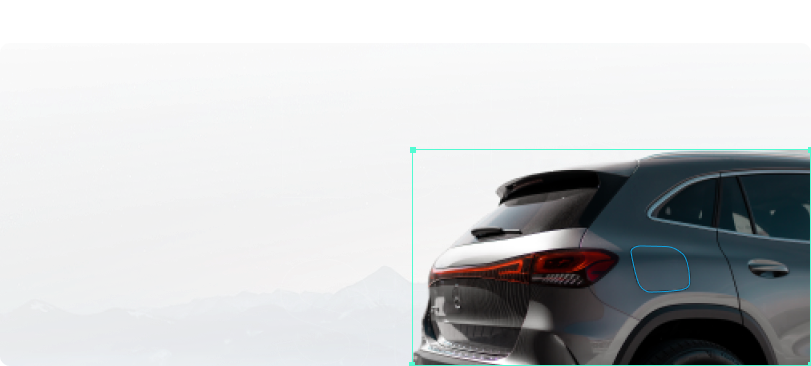

How does ADAS data annotation contribute to vehicle safety?

ADAS data annotation involves tagging sensor data from cameras, radar, and LiDAR. This data teaches vehicles to recognize road signs, lane markings, cyclists, and other dynamic elements. We establish “ground truth” for machine learning models that assist drivers with features like lane-keeping, automatic braking, and adaptive cruise control. High-quality annotated datasets enhance real‑world adaptability, support regulatory compliance, and reduce prediction errors, all of which contribute to better assisted driving systems.

What are current trends in AI-assisted annotation for LiDAR and ADAS?

In 2025, annotation workflows are shifting in the following direction:

- AI-driven pre-annotation reduces manual effort and speeds up labeling.

- Semi-supervised learning uses both labeled and unlabeled data to improve efficiency.

- Interpolation techniques enable high-quality tracking in sensor fusion scenarios by filling gaps between frames.

- Timeline annotation - adding time to 3D data streams aids trajectory and motion prediction.

These trends support scalable annotation pipelines for autonomous systems.

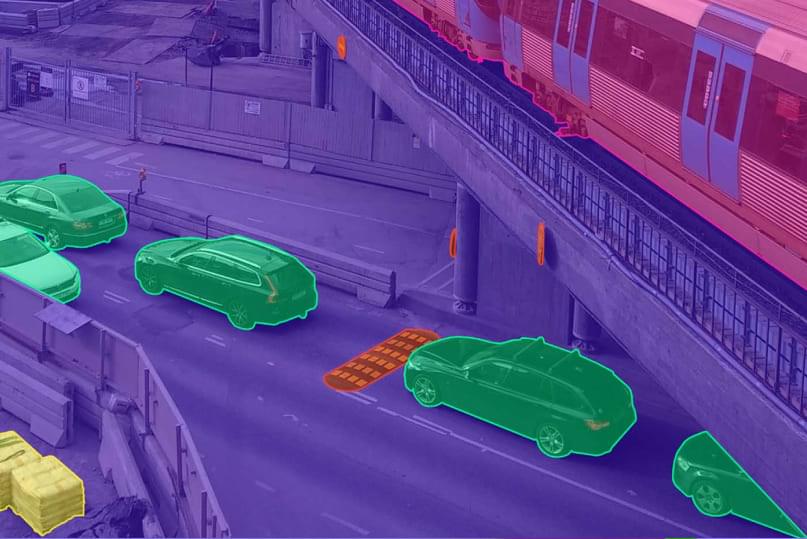

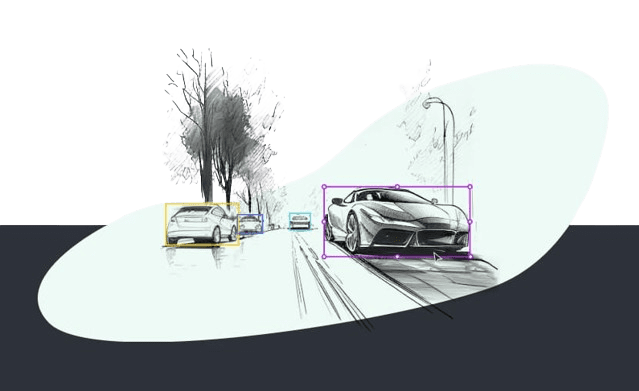

What types of annotation techniques are used in autonomous driving?

Common annotation types include:

- 2D/3D bounding boxes for basic object tagging.

- Polygons and semantic segmentation for detailed shape mapping and road segmentation.

- Instance segmentation for labeling discrete elements like lanes and crosswalks.

- Keypoint and skeletal annotation for facial recognition and pose estimation in pedestrians/cyclists.

- LiDAR point-cloud labeling for 3D object and movement detection.

These techniques collectively provide ADAS with robust environmental models.

How do large language models (LLMs) integrate with LiDAR and ADAS annotation workflows?

LLMs are increasingly used to auto-generate annotation instructions, assist quality assurance, and formulate annotation schemas for unstructured textual metadata. Sensor logs, driver behavior notes, and basic descriptions can all go through LLMs with relatively high accuracy.

While vision and sensor pipelines are still human-in-the-loop, LLMs help with documentation, error detection, and process automation - creating more efficient workflows across multimodal data types.